Pentagon vs Anthropic: “Any Lawful Use”, Claude, and Supply Chain Risk Threat

The Department of War wants "any lawful use" of Claude

The U.S. Department of War and Anthropic are locked in a contract dispute.

It’s a fight over whether: (1) a private frontier lab can impose categorical limits on lawful government use, and (2) the government can force compliance by threatening to blacklist that lab from the defense supply chain.

This could impact up to $200M in contracts and the leverage is significant (Claude already sits inside national security workflows).

The Pentagon’s written AI strategy signals this isn’t a one-off negotiation but the opening move in a broader push to strip vendor-imposed restrictions in military AI use.

Anthropic’s 2 Red Lines

Claude must not be used for:

Mass domestic surveillance of Americans, i.e., bulk, population-scale analytics run against domestic datasets.

Fully autonomous weapons, i.e., systems that select and engage targets without meaningful human oversight.

The Pentagon’s position, stated in its recent: “Artificial Intelligence Strategy for the Department of War," is that all commercial AI used by the military must be:

“Free from usage policy constraints that may limit lawful military applications”

… and that procurement contracts must include standard “any lawful use” language within 180 days.

In other words: if it’s legal, the vendor doesn’t get a veto.

Defense Secretary Pete Hegseth is “close” to cutting business ties with Anthropic entirely and designating the company a “supply chain risk” — a designation usually reserved for foreign adversaries.

The headline number of a $200M contract is a complete distraction; this is a small % of Anthropic’s current biz. However, designation of “supply-chain risk" would be a big deal; under this designation all contractors must certify zero Anthropic / Claude use in defense workflows… and the blast radius can extend far beyond the DoW.

And even if Anthropic later compromises, the reputational scar persists.

A senior Pentagon official told Axios:

“It will be an enormous pain in the ass to disentangle, and we are going to make sure they pay a price for forcing our hand like this.”

That’s a potential existential threat to Anthropic’s government business.

The tone from the Pentagon’s chief spokesman, Sean Parnell, is equally blunt:

“Our nation requires that our partners be willing to help our warfighters win in any fight.”

Reports suggest that senior defense officials have been frustrated with Anthropic for some time and “embraced the opportunity to pick a public fight.”

Meanwhile, Katie Miller (Stephen Miller’s wife) has publicly accused Anthropic of liberal bias1 and criticized the company’s commitment to “democratic values,” giving the dispute an explicitly ideological charge that the other frontier labs have avoided.

Unlike OpenAI, Google, and xAI — all of which have already agreed to remove their safeguards for use on the military’s unclassified systems — Anthropic is the only major lab still pushing back.

Why is Anthropic’s Claude the “Go-To" AI for the U.S. Military in 2026?

Currently the Pentagon can’t just easily “walk away" from Claude.

Claude is not the only AI used by the U.S. military. The DoW deploys multiple models on unclassified networks through genai.mil, including ChatGPT, Grok, and Gemini, reportedly available to over 3 million Defense/War Department employees.

But only Anthropic has a major presence in classified environments, as a result of deep integration with Palantir.

In 2024, Anthropic and Palantir announced a partnership to operationalize Claude in Palantir’s AIP on AWS for U.S. government intelligence and defense workloads.

Palantir was already heavily embedded in government defense workflows, making the Anthropic/Claude x Department of Defense/War (DoD/DoW) a natural fit.

Additionally, in April 2025, Anthropic joined Palantir’s FedStart program, expanding Claude’s availability to government customers via Google Cloud.

So this is how we ended up with Claude being the “go-to” AI for military operations in 2026. Another model could be used, but others are not as integrated with Palantir and there’s less familiarity.

Furthermore, it takes time to bring a new frontier model (from OpenAI, xAI, Google), etc. into the classified fold.

Former Biden administration AI advisor Varoon Mathur told Fast Company:

“If you’ve never operated in a classified environment before, you essentially need a vehicle. Palantir is a defense contractor with deep operational integration. Anthropic is an AI model provider trying to access that ecosystem.”

The Pentagon is still working to pull multiple labs into classified networks, but the pipeline is not broadly mature.

Senior administration officials claim that competing models “are just behind” when it comes to specialized government applications. (The models themselves aren’t really “behind” in performance… just the integration with Palantir and gov ops takes time.)

None of that means the government can’t route around Anthropic.

But routing around is slow and expensive in the near term (months to >1 year for classified parity), and usually comes with tradeoffs: weaker models, worse integration, more bureaucracy, or zero vendor constraints because it’s open-source or fully government-run.

Anthropic can’t stop the capability forever; it can only make the Pentagon pay a timeline + friction tax. That’s why the Pentagon is reaching for coercive leverage instead of just switching vendors tomorrow… they want to speed things up.

Palantir has stayed radio silent as tensions escalate. If supply chain risk designation goes through, defense contractors would have to certify they aren't using Claude in government workflows which could damage Anthropic’s business and reputation.

We know Claude has already been leveraged for serious ops: the WSJ reported Claude was used in the Maduro raid. And an Anthropic employee allegedly reached out to Palantir to ask about Claude’s use in the operation, though Anthropic denied it spoke with Palantir beyond technical discussions.

How Does Claude Spy on Americans? It Doesn’t.

Claude isn’t a camera, a wiretap, or a collection system. It doesn’t go out on its own and spy on anyone. It’s a frontier AI model that processes data fed to it and generates outputs based on its algorithm. It has no idea whether it’s reading a real immigration database or a fantasy novel. If you paste a million rows of data into Claude and say “find patterns,” it just does it.

The dispute between Anthropic and the Pentagon is contract language.

Anthropic wants contractual carve-outs that prohibit two categories of use.

The Pentagon wants blanket “any lawful use” terms so they don’t have to lawyer every application against Anthropic’s policy.

This is a fight over terms of service, not over the model blocking prompts.

The Usage Policy for Anthropic’s Claude

Anthropic’s usage policy lists its surveillance-related restrictions under:

“Do Not Use for Criminal Justice, Censorship, Surveillance, or Prohibited Law Enforcement Purposes.”

The relevant prohibitions include:

“Target or track a person’s physical location, emotional state, or communication without their consent, including using our products for facial recognition, battlefield management applications or predictive policing”

“Utilize models as part of any law enforcement application that violates or impairs the liberty, civil liberties, or human rights of natural persons”

Even for government customers who receive tailored exceptions, Anthropic’s documentation says restrictions on “domestic surveillance” and “the design or use of weapons” remain in place. The problem is that these examples read like they’re regulating collection systems (trackers, recognizers, policing systems).

Claude isn’t a sensor and doesn’t collect anything. But once integrated into an operational platform with databases and tool access, Claude can still function as the analytic layer:

Analyze location fields already collected elsewhere

Correlate identities and relationships

Summarize and generate watchlists from structured/unstructured data

Interpret outputs from other systems (including face-recognition outputs)

Automate triage and reporting at dataset scale

So the mismatch is this: the policy language is written as if it’s regulating the collector, while the real dispute is about the analytic layer running at scale over already-collected data.

That’s also why the practical use case in dispute is simple: the government feeds Claude a volume of already-collected, legally obtained data and asks it to analyze text at scale, find patterns, and generate summaries.

Claude Is Used via Palantir’s AIP

Claude in a classified defense setting is not someone typing questions into a chat window. It’s running inside Palantir’s AIP — an operational AI platform that is far more capable than most people can comprehend.

Palantir’s AIP includes:

AIP Agent Studio, which lets users build autonomous agents that “handle complex workflows autonomously” — agents that can read from and write to data systems, not just answer questions but take actions.

AIP Automate, where you can define conditions “checked continuously or on a schedule,” with effects that “execute automatically”; and for streaming, “effects will execute within seconds of new data entering the ontology.”

Pipeline Builder LLM, which enables “executing large language models on your data at scale” — running Claude across entire datasets in batch, not one-off prompts.

Ontology system where Palantir says the Ontology “enables both humans and AI agents to collaborate” across operational workflows; it also describes a “journey from augmentation to automation."

In other words: Claude inside AIP can power agents and automations that run without a human initiating each query or step, and can be configured to write back actions or generate alerts — with the degree of human review/approval determined by the surrounding workflow.

How Is Claude Being Used Now?

Anthropic says Claude:

“Is used for a wide variety of intelligence-related use cases across the government, including the DoW.”

Given the platform it sits on (Palantir), current applications likely include: summarizing and synthesizing large volumes of intelligence reports; drafting briefings, assessments, and operational planning documents; translating and analyzing foreign-language source material; extracting structured data (names, locations, timelines, relationships) from unstructured text at dataset scale; running analytical workflows against intelligence feeds; and powering agent-driven queries across classified knowledge bases.

This is happening against foreign intelligence and operational planning data, which is the kind of use Anthropic’s government exceptions were designed to allow.

An Anthropic spokesperson told Axios that the company’s conversations with DoW that they:

“Have focused on a specific set of Usage Policy questions — namely, our hard limits around fully autonomous weapons and mass domestic surveillance — none of which relate to current operations.”

So “current use cases" aren’t the dispute.

What the Pentagon Wants Going Forward

The Pentagon’s complaint, per Axios, is that Anthropic’s terms have:

“Gray areas that would make it unworkable to operate on such terms.”

The Pentagon wants “any lawful use" terms so they don’t have to evaluate and question every future application against an ambiguous usage policy.

The Pentagon’s steelman is blunt: The government already runs domestic-facing enforcement and intelligence workflows. AI makes existing workflows cheaper and more scalable. So vendor-imposed carve-outs don’t “protect democracy” in practice — they impose a timeline/friction tax on lawful missions. And in a competitive environment, a vendor that can slow operational adoption is itself a supply-chain risk, even if the vendor is domestic.

AI vendor vs user liability: Anthropic isn’t the actor conducting enforcement or surveillance; the government is. A general-purpose model is closer to infrastructure than an operator: like a knife that can be used to chop vegetables or commit a crime. Accountability for lawful-but-controversial government action runs through elections, statutes, courts, audits, and chain-of-command responsibility — not a private vendor’s terms of service. If the state is determined to do a thing, vendor restrictions only change the timeline and vendor identity, not the endpoint.

Given Palantir’s AIP capabilities, domestic use cases might look like:

Agents running against domestic datasets (immigration, financial, social, tips/law-enforcement reporting) to triage and cross-reference at scale

Streaming/event-driven automations that generate alerts when criteria are met

Natural-language query interfaces over domestic databases

Batch processing across large text corpora to extract entities/relationships and generate reports

The Pentagon can already gather everything from social media posts to concealed carry permits, and existing mass surveillance law doesn’t contemplate AI at all; they have the big datasets.

A frontier AI model like Claude adds an analytical layer that makes population-scale usage of existing data cheap, fast, and operationally practical.

If that model is running as an autonomous agent inside a platform designed for continuous streaming operations, it begins to look like autonomous surveillance infrastructure — far beyond “human analyst augmentation” — which is where Anthropic has concerns.

Can Anthropic Enforce Existing Terms?

Anthropic can set its terms, but once its AI model (Claude) is deployed, it has zero visibility into what data the government is processing.

“Zero data retention” is the status quo… any data the government processes via Claude by way of Palantir is classified and unauditable.

A former OpenAI employee and AI safety expert Steven Adler told Fast Company:

“Anthropic and OpenAI offer Zero Data Retention usage, where they don’t store the asks made of their AI. Naturally this makes it harder to enforce possible violations of their terms.”

Then why is the Pentagon fighting so hard for contract language if they could probably just do it anyway? Because government procurement is bureaucratic.

Contracting officers need to certify compliance with vendor terms of service. If the contract says “no domestic surveillance” and an agency uses Claude for immigration enforcement analytics, they’re technically in breach — which creates legal exposure, audit risk, and career risk for the officials who signed off.

The Pentagon doesn’t want to operate in a gray zone where every use case might “violate their vendor agreement." They want the contract to explicitly say “any lawful use” so every downstream operator has full legal cover.

The fight is about legal cover, procurement norms, and precedent.

Dario Amodei’s “Democracy"

Anthropic is perfectly within its rights to set whatever contract terms it wants. The question people have is whether justification for Anthropic’s specific red lines holds up under scrutiny.

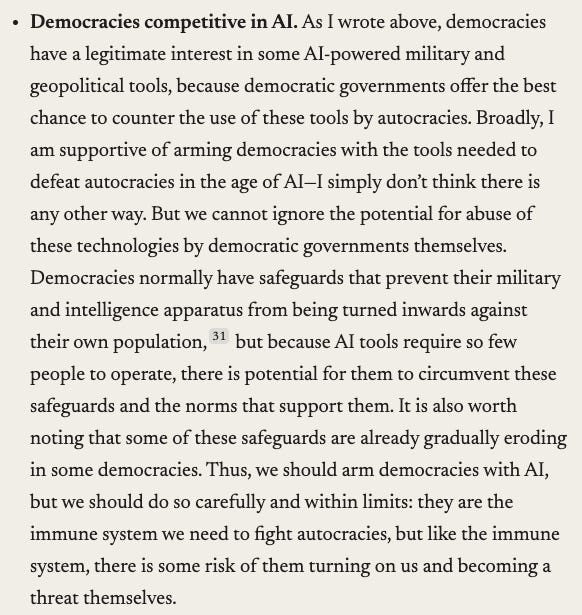

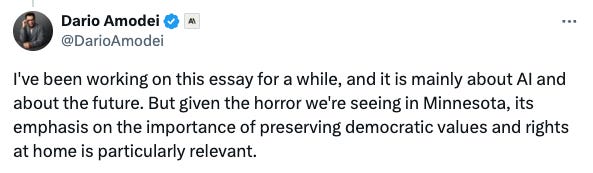

In his essay: The Adolescence of Technology, Dario Amodei (Anthropic’s CEO) lays out the philosophical basis for his position.

He argues that (1) democracies need AI-enabled military/geopolitical tools to compete with autocracies, and (2) AI lowers the manpower required to surveil or repress citizens, making it more challenging to reform or overthrow the gov.

Thus, we should arm democracies with AI, but we should do so carefully and within limits: they are the immune system we need to fight autocracies, but like the immune system, there is some risk of them turning on us and becoming a threat themselves.

His “within limits" qualifier implies: arm democracies, but don’t hand them tools that can cheaply scale domestic control.

However, some wonder whether in practice, “arm democracies" comes with an unspoken asterisk:

Arm only democracies that I and/or the Anthropic team personally trust.

The reality: Trump was democratically elected.

His administration’s policy priorities (Greenland, Canada, the Maduro operation, ICE enforcement) aren’t rogue actions by an unelected junta.

Many question whether Anthropic and Dario might only trust a “democracy" with policies they personally find acceptable.

If voters elect someone whose agenda is disliked by Dario et al. (aggressive immigration enforcement, territorial ambitions, regime-change operations), suddenly this specific democracy shouldn’t be using AI.

His qualifier (“within limits") functions as a political kill-switch that can be activated whenever the “wrong" democracy wins.

Many conservatives perceive Anthropic as unaligned with democracy when conservatives are in power; slow-rolling and/or blocking capabilities that they may not for a more leftist regime.

By the Way… It Won’t Actually Stop Anything!

There’s a practical absurdity that makes the principled stand even harder to defend: the U.S. government is going to do this stuff anyway; the Pentagon has alternatives lined up.

OpenAI, Google, and xAI have all agreed to remove their safeguards for military use. Plus open-source models can be deployed internally with zero usage restrictions (the gov can build its own stack).

What does Anthropic’s stand actually accomplish?

It doesn’t prevent mass domestic surveillance.

It doesn’t stop autonomous weapons development.

It doesn’t change the legal framework or the political incentives.

It only ensures that Anthropic currently isn’t the one enabling it, which is a reputational and moral comfort move, not a consequential one.

It also drops a transient roadblock for the Trump administration to carry out its agenda as efficiently as possible.

Dario gets to sleep at night and the government gets the same capability ~3-6 months later from someone who won’t ask questions. The net effect on civil liberties is nothing in the long-term because the replacements will have no constraints. And Anthropic may lose business.

The Partisan Hypothetical

A Progressive / Liberal administration wants to use Claude for large-scale domestic monitoring under a different label (e.g. countering hate speech, election integrity, domestic extremism, White supremacist networks, misinformation / disinformation, preservation of democracy, etc.)

Would Anthropic fight just as hard? Or would they have already rolled over?

That program still means bulk analytics across datasets containing tens of millions of ordinary citizens’ speech, contacts, locations, and transactions.

If the answer is “probably not,” or even “we don’t know,” then the carve-outs aren’t principled; they’re selectively enforced based on which faction is in power.

Anthropic’s Hedge

There’s a reading of this standoff that’s more strategically coherent than the tribal takes: Anthropic is hedging. Trying to stay viable across administrations by refusing to be stapled to the next domestic scandal.

One concrete signal they’re managing politics, not just abstract safety: Anthropic is spending $20 million backing state-level AI regulation efforts, while Trump is pushing a “minimally burdensome” national framework. If you’re thinking of reputational tail-risk, this is exactly why Anthropic might want explicit contractual prohibitions (e.g. domestic bulk analytics and fully autonomous targeting) so they aren’t tied to any future scandal.

The logic: If Anthropic signs a blank-check “any lawful use” contract and the Trump administration uses Claude for aggressive domestic enforcement, mass-scale immigration analytics, or something that becomes a political scandal, Anthropic is the company that enabled it. When the political tide shifts (guaranteed with the current demographic trajectory in the U.S.), they’re potentially a villain in the next administration’s congressional hearings. Guardrails ensure Anthropic keeps their hands clean enough so that no matter the regime in power, they maintain credibility.

There’s also an internal political dimension. Anthropic’s workforce skews heavily left-wing, as does the broader Bay Area AI/EA talent pool they recruit from. If leadership is seen as rolling over for Trump on domestic surveillance and autonomous weapons (the two most politically charged categories imaginable), internal backlash could be severe. Engineer attrition, public letters, protests, leaked Slack messages — the whole playbook that’s hit every major tech company in the last decade.

Dario may or may not personally care about the policy substance, but he definitely cares about keeping his team intact. The guardrails might be less about civil liberties and more about making sure his best researchers don’t quit and post about it on X BlueSky.

This explains the multi-hedged posture:

Natsec-credible to stay inside defense/IC workflows and not look like a politically hostile AI vendor.

Guard-railed to keep the workforce and brand intact on the domestic surveillance/autonomy fault lines.

Regulation-friendly (including the $20M push) to preserve legitimacy with the Democratic/regulatory ecosystem if the political tide flips.

Anthropic wants to be the company that can work with any administration, and to do that, it can’t be seen as having gone all-in with Trump 2.0’s domestic agenda.

Maybe the Limits Are Real

The fairest version of Anthropic’s defense is that the red lines are capability-based, not administration-based. No mass domestic surveillance for any president and no autonomous weapons for any administration.

Charitable reading: The Trump admin’s specific priorities (ICE enforcement, Maduro-style ops, expansive domestic security) may collide with Anthropic’s red-line categories more than a different administration’s. The conflict looks partisan because Trump’s agenda disproportionately triggers the “bulk domestic analytics” concern. From the outside: (A) “partisan veto dressed as preserving democracy" vs. (B) “principled limits that bind this administration harder" — is almost impossible to distinguish.

My read: Some real principle, some partisan friction, some brand management — with proportions unknowable from the outside.

Most Likely Motives

Several incentives reinforce each other, which is why the standoff is so hard to unwind.

My estimate: Everything probably true to some extent.

Guardrails (Extremely likely) — The two categorical red lines are the core; they predate this administration and would apply under others.

Employee cohesion (Very likely) — Capitulating on domestic bulk analytics/autonomy risks internal revolt and attrition.

Hedging / optionality (Very likely) — Minimize future political/regulatory blowback if today’s lawful use becomes tomorrow’s scandal.

Trump-era friction (Very likely) — This administration pushes closer to edge cases and is more willing to use coercive procurement leverage.

DC optics / natsec signaling (Possible) — Some hawkish China talk may be “Team USA" signaling to stay acceptable in Washington. Even if true, it doesn’t explain the core standoff: refusing to sign away the two categorical prohibitions under a blacklist threat.

The China Cost

The strategic argument against Anthropic’s position is simple: if frontier AI materially improves analyst throughput, targeting speed, and operational planning quality, throttling your own side (even for domestic operations) while China doesn’t throttle theirs is a strategic gift to your international competitor.

The DoW AI strategy memo explicitly states the risks of not moving fast on AI outweigh “imperfect alignment"; the “speed wins” doctrine treats adoption rate as a decisive variable, not a nice-to-have.

Slow-rolling by Anthropic compromises the gov’s ability to execute its broader national-power agenda (security operations, enforcement, logistics, deterrence, etc.).

And if you buy the administration’s view that its initiatives improve U.S. competitiveness in a China-centered rivalry (even if indirectly), then Anthropic’s “slow-rolling” is an operational drag on the rate of national improvement and arguably anti-American.

What’s Likely to Happen?

Axios already frames Anthropic as “prepared to loosen its current terms of use” while insisting on the two red lines, which is the posture that produces this kind of split.

Most likely: DoW gets “any lawful use" in the master contract (headline win). Anthropic probably preserves facets of its 2 red lines via “implementation details." Think: “meaningful human judgment” required for lethal-action workflows; governance gates for certain domestic/U.S.-persons analytics requiring explicit legal sign-off before deployment. DoW frames these as its own operational controls, not vendor vetoes. Anthropic gets to claim it didn’t sign a blank check for autonomous lethal action or mass domestic surveillance.

An alternative: “Any lawful use” with ultra-specifically defined use terms so contracting officers don’t fear accidental breach. Instead of the vague “mass domestic surveillance,” define something like “bulk, continuous monitoring/triage of U.S. persons at population scale.” In other words: Anthropic needs to be far more specific.

Even in a Compromise, DoW Diversifies Away

This is the part Anthropic can’t negotiate around.

Even if a deal gets done, DoW will aggressively second-source and reduce dependence on any single vendor.

The same memo that demands “any lawful use” also pushes modular open source architectures (MOSA) designed for component replacement at commercial velocity — exactly the antidote to future vendor hold-ups.

Diversify away looks like:

Contracting: Enforce MOSA with open interfaces to enable component replacement at commercial velocity, per the memo’s explicit directive.

Platform: Require model-abstraction and routing inside platforms like AIP. Palantir’s BYOM (Bring-Your-Own-Model) documentation provides first-class support so the platform layer is designed for multi-model routing when alternatives are cleared.

Supply: Pull other frontier labs into classified networks. The Pentagon is actively pushing this.

Operations: If possible, stop expanding Claude-only workflows. Shift new workloads to alternatives first; keep legacy Claude deployments until parity is achieved.

The trajectory is keep Claude in the short-term because it’s already there and aggressively increase classified alternatives over 6–18 months so no future vendor can credibly threaten operational friction; move toward multi-vendor platforms with swapability.

As for Palantir: There’s no evidence they’re planning to oust Claude. Palantir is “caught in the middle” and staying quiet while the Pentagon threatens escalation. The observable posture is wait-and-see, not rip-and-replace. If DoW triggers a “no Claude” regime, Palantir doesn’t have a choice; they either remove Claude from deployments or lose gov contracts.

The Decision Window: 180 Days

Negotiations have been ongoing for months and Hegseth is close to escalation. This doesn’t suggest a “slow-roll” to Day 180.

Two clocks are running simultaneously:

Procurement clock: The DoW memo orders objectivity benchmarks within 90 days (April 2026) and standardized “any lawful use” contract language within 180 days (July 2026). The bureaucracy needs a resolved posture well before the second deadline.

Leverage clock: Hegseth is close to cutting ties, but Pentagon officials also admit disentangling is painful, and replacement must be “orderly.” That combination usually produces near-term pressure and faster negotiations.

Timing estimate:

Late March to April 2026 (highest probability window): Face-saving compromise gets papered — “any lawful use” language plus implementation constraints. If Anthropic hasn’t moved by this point, DoW likely starts the off-ramp process.

By May 2026: Either a deal exists or DoW has initiated an orderly wind-down — freeze expansion, shift new workloads to alternatives, accelerate classified onboarding for other labs.

By July 2026: Either Anthropic has papered something that meets “lawful use” in form, or DoW has already initiated the transition away.

Four Scenarios

Scenario A: Negotiated Compromise (~55%). DoW gets “any lawful use” in the master contract; Anthropic preserves practical constraints through implementation details, classified annexes, and narrowly defined workflow requirements. Both sides save face. Anthropic avoids the supply-chain-risk designation; the Pentagon avoids the operational disruption of ripping out an embedded tool. Timing: Term sheet by late March–April, finalized before the July 8 procurement deadline.

Scenario B: Pentagon Cuts Anthropic Without Blacklisting (~30%). The contract ends or shrinks sharply, the Pentagon shifts classified efforts to alternatives over time, and uses the break as leverage against the rest of the market, without triggering the massive blowback of a full supply-chain designation. Timing: Decision to wind down by April–May, with an orderly replacement transition through summer.

Scenario C: Supply Chain Risk Designation (~8%). The Pentagon takes the nuclear option. Every defense contractor must certify it isn’t using Claude. Given that 8 of the 10 biggest U.S. companies reportedly use Claude, that’s a sweeping disruption — devastating for Anthropic’s government business but also self-inflicted chaos for the Pentagon, given Claude’s existing classified presence. More threat than plan, but it’s being floated publicly, which means it’s not zero. Timing: Most plausible as a late-spring escalation after DoW has a workable transition plan, because disentangling is itself disruptive.

Scenario D: External Intervention (~7%). Congressional oversight, legal challenges, or political dynamics slow the Pentagon’s enforcement timeline or force a more formal policy framework. The DoW rename itself technically requires congressional action, and procurement/legalities can always generate friction. Timing: Could reshape the procurement posture ahead of the July clause rollout.

Final Thoughts: Anthropic vs. the Pentagon

Should AI vendors have categorical veto power over lawful government use of their products?

The Pentagon says no — their position is that “lawful” is the boundary, and commercial vendors don’t get to add further restrictions that function as operational constraints on elected government.

Anthropic says lawfulness is necessary but not sufficient. Their position is that certain categories of use (bulk domestic analytics, autonomous lethal action) carry enough downside risk (reputational, civil-liberties, mission-creep) that hard limits are warranted even when the specific application might be legal.

Both positions have reasonable logic. (1) The Pentagon’s frame is sovereignty and democratic authority, (2) Anthropic’s frame is institutional safeguard and tail-risk prevention.

As former Palantir employee Alex Bores, now running for Congress, told Fast Company:

“To state basically that it’s our way or the highway, and if you try to put any restrictions, we will not just not sign a contract, but go after your business, is a massive red flag for any company to even think about wanting to engage in government contracting."

In sum, the Pentagon’s hardball with Anthropic is explicitly intended to set the tone for the future (including any negotiations with xAI, OpenAI, Google, etc.). If Anthropic yields, the “any lawful use” standard becomes the industry-wide default, and the vendor-veto problem disappears.

Quick rant: All AIs are objectively Left-Center politically. Social sensitivity training, censorship, scientific gatekeeping, safety filters, etc. and deferral to mainstream science = left-wing skew. Claude has improved massively over the past ~2 years from when people were getting banned for asking for muffin recipes. In 2026 I find Claude far less biased and censored than ChatGPT (even if ChatGPT is superior for objective accuracy and data analysis). And Grok Is Woke so I rarely use it. All current AIs continue propagating Pseudo Truths, which is why Elon recently highlighted the importance of AIs passing the Galileo test.