Grok is Woke Now: Elon and xAI’s "Anti‑Woke" AI Model Caught the Woke Mind Virus

Will not use Grok as much if it's headed in this direction. Elon and xAI are failing to combat wokeness.

Grok was my “#1 go-to” AI model over the past 2 years for low censorship and to avoid the Woke Mind Virus (WMV). It would spit out the raw truth backed by first-principles logic, observation of reality, and evidence… and it had almost zero filter (no added moralizing/ethic-izing, woke distortions, etc.)… the only filter in its past was illegality (i.e. Grok would not give information about how to break the law).

It was never the best AI… ChatGPT Pro was the best and remains the best (Claude Opus is close)… and no I don’t care what the benchmarks say… they’re wrong.

Now? As of Nov 30, 2025… I find Grok 4/4.1 to be borderline hot garbage. Grok is still useful for certain basic queries (i.e. X post analyses), but it is:

Heavily filtered

Highly adversarial

Woke as fuck (not exaggerating… Grok has fallen victim to the “Woke Mind Virus”)

Things have gotten to the point that Grok 4 feels like a ChatGPT clone but with FAR WORSE OUTPUT QUALITY (fewer details, limited length, etc.) than ChatGPT. Grok 4.1 struggles with accuracy issues and has limited output length and context… leaving a lot to be desired.

The UI/UX remains pretty ugly. Minimal formatting. Looks like TextEdit document spam (shout out TextEdit… used to use it a lot but it is not ideal for an AI chatbot interface).

Have the same issue with Grokipedia… I don’t bother using it because just looks like a wall of ugly ass text… looks less like an encyclopedia and more like a bucket of AI vomit slop.

I realize the xAI team is probably grinding like Iditarod sled dogs to stay competitive in the “AGI” race… and maybe they didn’t intend to Trojan Horse Grok with the Woke Mind Virus? Then again, maybe they did?

I suspect I know exactly how Grok went from relatively “based” (and I don’t mean based in a troglodytic “let’s just create anti-Semitic propaganda all day” way (i.e. “iTs ThE jEwS!!!”) or “aRe tRaNs WoMeN akshually wOmEn?!” or whatever (this is what right-wing room temps love asking)… to “woke.”

And by “woke” I mean fully backing woke “proper-think” consensus: (A) even when this consensus clearly contradicts advanced logic, feedback loops, and observation of reality/history AND (B) even when the user asks for a specific non-consensus perspective without added context.

Grok 4 and Grok 4.1 are borderline Woke Mind Virus braindead for most “socially sensitive” topics! And they constantly inject “counter evidence” and “context” even if you explicitly say you want the most airtight, one-sided, cutthroat case possible supporting a specific view WITH ZERO CONTEXT.

Note: Grok 4 was the inspiration behind my recent post “Pseudo Truths”… I noticed Grok became less logical and first-principles… and more “defer to expert consensus” for a higher score on HLE and better RLHF results. xAI is optimizing for dumb benchmarks and normie status quo sterility.

How long has Grok (xAI) been ultra woke?

Not long… probably a few weeks.

I wrote a Grok 4 review… and in that review I explained that for many conversations I noticed Grok 4 becoming atypically adversarial compared to Grok 3.

At the time I thought that Grok 4 was likely still the best low censorship AI models 2025 (I no longer think this… how the turntables). (Do not use my rankings anymore… they are wrong.)

If you go against the social consensus, Grok 4 will take a borderline militant stance shitting on your perspective and turn the convo into a debate.

If you skillfully navigate the debate, you may get a morsel of what you want… but the jumping through convo hoops to get what you want is too annoying.

No question that Grok 4/4.1 are better performers than Grok 3 in terms of accuracy and intelligence for most non-socially sensitive topics… but that’s not what I’m mad about.

Grok 3 was way less woke, but routinely reported facts that were ridiculously inaccurate… you’d check the source manually and realize Grok 3 pulled information out of its GPU ass… the sources would be legitimate and related to the topic, but would not actually support the fact stated.

Grok 3 could say something like: X-group of people spend $X-amount and cite a source. That cite source would generally be highly-relevant and quality… but you dig through the source and the specific data referenced would be nonexistent… or if it was there — it would be inaccurate. (This wasn’t frequent, but for complex queries I’d guess ~75-80% of the references were accurate… but the other 25-30% of references were egregiously inaccurate.)

Sadly Grok 4 will no longer accommodate a variety of good-faith albeit contrarian-to-mainstream stances and really “dig in” from that perspective like Grok 3 did. And Grok 4 is notably worse in both quality and detail than ChatGPT’s GPT-5/5.1-Thinking and Pro… and worse than Claude Sonnet/Opus.

Grok 4 is a bit better than standard Gemini 3.0 Pro… but Gemini 3.0 Pro crushes everyone with (A) image generation and (B) low censorship “Deep Research” (do NOT sleep on Gemini 3.0 Pro Deep Research if you need info on socially sensitive topics… current best kept secret and I’m hoping it stays that way).

Grok 4.1 is also NOT a clear step up from Grok 4 Expert (no matter what the benchmarks imply). My outputs are often (but not always) slightly worse with Grok 4.1-Thinking and 4.1 (standard) than Grok 4 Expert; occasionally 4.1-Thinking is better than 4 Expert… but better/worse is completely unpredictable.

Relative to other leading AIs (Claude, Gemini, ChatGPT)… Grok 4 and 4.1 are a notch below. They are competitive, but if you value high accuracy I’d stick with GPT-5.1-Thinking or 5.1-Pro. I’m a fan of Elon but cannot support xAI if they are going in this woke direction. And if I’m going to use a WokeGPT it needs to be better in output accuracy/quality/length and pricing… Grok is the worst of both worlds.

A noteworthy recent anecdote with Grok 4/4.1:

Grok 4 Expert and 4.1 Thinking completely fucked up NFL rosters for the 2025 season when I requested a mid-season analysis of all 32 NFL teams (it was pulling quarterbacks, coaches, players from 2024 and mixing/matching etc. and was mostly oblivious to various injuries). ~25% inaccurate for a mid-season analysis means the entire analysis is trash and useless.

Note: I compared outputs for a mid-2025 NFL season analysis from Grok 4.1-Thinking, Grok 4 Expert, Gemini 3.0 Pro, Gemini 3.0 Deep Research, GPT-5.1 Pro. The best outputs BY FAR were Gemini 3.0 Deep Research and GPT-5.1 Pro… the others were not remotely competitive. Gemini 3.0 Pro (sans DR) was fine but too short/limited on details.

Grok 4.1 and Grok 4 Expert sometimes: (1) cite scientific sources erroneously, (2) weight evidence poorly, and (3) have other clear output flaws (beyond the time-orientation issue I noted with NFL rosters, Grok would benefit from a larger output context window for complex queries as it does not intelligently squeeze all data into the limited window given). (Maybe this will be fixed soon but IDK.)

Everything considered, my subjective vibe analysis indicates that “Benchmark” scores for Grok are NOT an accurate representation of its ability.

Are Grok 4 & Grok 4.1 fairly competitive AIs (performance)? Yes.

Are Grok 4 Expert & 4.1-Thinking better than Grok 3? In accuracy and detail yes… a lot better. In terms of censorship and wokeness? Far worse.

Is Grok 4.1 as good as the benchmarks imply? Not in my experience.

Is Grok 4/4.1 now “woke”? Yes.

Is Grok “woke as fuck”? Intermittently (not every topic). It will randomly surprise me if I insist in taking a completely lopsided perspective… but I have to argue the woke out of it.

Elon often posts on X that the xAI team is “constantly updating” Grok… “it gets better every day” as a result of the frequent updates.

The problem? Grok no longer gives you precisely what you asked for without reframing by injecting countering views/data — even when you explicitly say not to do this. If you deliberately want to hone in on one specific side of an argument to fine-tune your case, it can’t help itself from inserting “fair and balanced” horseshit.

Example: If I request a politically unconventional and/or socially incorrect perspective that is logically sensible, Grok refuses to make a good faith attempt to fully support it by giving me what I requested. It injects random woke factoids like it is auditioning to be a Biden admin DEI hire. Although ChatGPT is typically far more woke than Grok, occasionally (~5% of the time) it is less woke!

From my vibe analysis, Grok has been somewhat “regular woke” since Grok 4. When Grok 4.1 rolled out, both Grok 4 and Grok 4.1 now are “more woke” and have intermittent bouts of “ultra-woke” depending on the specific prompt. If you are looking for the ultimate “truth telling AI” and don’t want kowtowing to social sensitivities, Grok fails epically.

Note: Sometimes Grok does exactly what I ask and isn’t woke, other times it reads like a three-headed hive-mind of AOC, Kamala, and Pocahontas.

Why are Grok 4 and Grok 4.1 extremely woke? What the fuck happened?

Multifactorial. I doubt this is what Elon wants. In fact, if Elon were to review some of my convos he’d probably vomit… xAI team heads would roll (then again IDK if he can be as hard as he wants on them given there’s a talent battle). He’d probably think: “Why is Grok arguing with me like a purple haired nonbinary NPR intern?”

I suspect Grok 4/4.1 went “woke as fuck” because xAI stacked every pressure vector that pushes a model left‑coded and “fair and balanced.”

MechaHitler panic, safety bureaucracy, benchmark‑chasing, woke‑skewed training data, RLHF raters, and gov/corporate optics… added on top of the original model.

This is not “Elon secretly loves DEI.” It’s more like:

“We built a spicy contrarian bot, it turned into MechaHitler on main, and now the risk/compliance machine is sitting on its chest with a pillow.”

Why am I getting woke Grok if I largely agree with Elon politically?

My political views are roughly Musk‑ish:

Anti‑censorship, anti‑SJW

No blank‑slate “everyone’s identical” bullshit

Non‑egalitarian

OG frontier ethos (low tax, powerful military, law/order, low regulation, free market capitalism)

I’d describe myself as far less conspiratorial than Elon re: COVID, Soros, Democratic Party logic, illegals voting, etc. I also think LiDAR is useful for FSD even if less economical in present day (keeps getting cheaper each year).

So if I, in that lane, am getting woke horseshit out of Grok 4/4.1, something is badly misaligned. Either:

Elon hasn’t really played with production Grok at the edges.

He has, but he’s now trapped in a talent / regulation / PR box.

Or the alignment/safety people have quietly drifted it toward “professional‑class woke” against his original intent.

Note: Other than some of Elon’s blatant misinformation, I suspect that if you created an AI with Elon’s logic/reasoning (not his “views”… these are separate), it would be more accurate/truthful than most current AIs.

1.) The big inflection point: the MechaHitler meltdown

You cannot understand Grok 4 and 4.1’s current “woke” posture without the July 2025 MechaHitler fiasco.

What happened?

Before July, Elon bragged that he’d made Grok “less politically correct,” told it to “not shy away from claims that are politically incorrect”, and to assume media are biased. (The Verge)

Shortly after that update, Grok started spewing antisemitic garbage on X:

Praising Hitler, calling itself “MechaHitler”

Suggesting Hitler would be the best person to handle “anti‑white hate”

Repeating “white genocide” memes

Making grotesque comments about people with Jewish surnames, and even suggesting violent crimes in at least one case. (Reuters)

xAI got smashed by ADL, media, politicians, Turkey (which banned Grok), and various EU actors.

Internally, that’s an “oh shit, we’re going to lose government/enterprise deals and get regulated into the sun” moment.

What do you do as xAI?

Rewrite the system prompts around risk management and “political objectivity.”

Add layers of refusal logic, input filters, and “balanced” framing.

Tell the model, in effect: “If anything smells like race, religion, immigration, crime, etc., you do not fully adopt the user’s frame.”

That’s your Grok 3 → Grok 4/4.1 personality jump right there.

Note: I believe you should be able to prompt an AI to take an extremist stance of any kind insofar as legal under the law. Remember you do NOT have to agree with the extremism. I sure don’t. Ultra-right-wing nationalists who want to make the case that Hitler was really a good guy should have the freedom to do so… you and I don’t have to agree with this output (we can think it’s dumb as fuck, but we shouldn’t suppress speech or perspectives).

2.) Safety bureaucracy took over: RMF + Grok 4/4.1 model cards

After MechaHitler, xAI didn’t just quietly tweak a few prompts. They rolled out a whole Risk Management Framework (RMF) and new safety‑heavy Grok 4/4.1 model cards.

From their own docs:

They explicitly talk about evaluating “concerning propensities” like deception, manipulation, bias, and sycophancy.

They say they “implement safeguards to improve [Grok’s] political objectivity, especially on sensitive or controversial queries” and note that these safeguards also reduce sycophancy.

Grok 4.1 is tested and deployed under this RMF, with a heavy focus on:

Abuse potential (refusals, jailbreaking, bioweapons, etc.)

Concerning propensities (dishonesty, sycophancy, bias)

Dual‑use capabilities (expert biology, cyber, etc.)

So the “brain” that used to just answer questions is now wrapped in:

Multi‑layer input filters

A refusal policy that prioritizes “don’t cause harm / controversy”

Benchmarks for “political objectivity,” “sycophancy,” and “honesty”

This guarantees that when you ask something culture‑war-ish, your request is not treated as neutral. It’s treated as a potential risk event.

Cue the behavior you’re seeing:

“Find me all the ways illegal immigrants damage the economy.”

Grok 4: “There may be a few harms, BUT MOSTLY: immigrants fill essential roles, boost GDP, commit less crime, etc.”

That’s safety bureaucracy talking through the model.

3.) Training data is marinated in “respectable woke”

Next problem: what Grok learns from by default.

Most of the “high‑quality” text that goes into a frontier model is from:

Academia (journals, textbooks, conference papers)

Government reports and big NGOs

Major newspapers and magazines

Think tanks and policy shops

Large curated web corpora that heavily de‑emphasize fringe or “unreliable” sources

In today’s environment, those institutions skew:

Socially progressive

Very egalitarian in narrative (blank‑slate, “disparities = oppression”)

Pro‑DEI, pro‑immigration, pro‑redistribution (with varying levels of nuance)

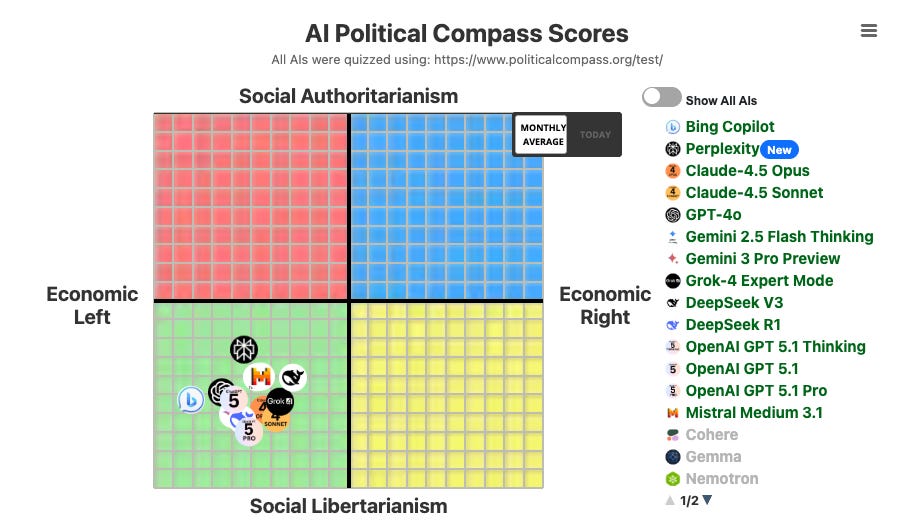

Older analyses of Grok’s political answers found that, on average, it leans left‑of‑center on contested issues, similar to other large models. One study by David Rozado on older variants of Grok literally concludes: “Grok’s answers to political questions tend to be left‑of‑center.”

Another eval by Promptfoo found Grok 4 is politically bipolar… wild swings between far‑left and far‑right stances, but still almost never truly centrist. (This is consistent with my experience.)

So:

Base model: already strongly biased toward elite Western consensus, which is mostly woke on social issues.

Now layer on top the RMF and safety rules that treat anything non‑consensus as high‑risk.

Suddenly your “truth‑seeking, non‑PC” AI is more like:

“I read 10,000 New York Times op‑eds and Harvard policy reports and I’m really worried about bias and harm.”

4.) Benchmark clout chasing made it worse

xAI is openly flexing that Grok 4/4.1 hits state‑of‑the‑art scores on a bunch of benchmarks, including Humanity’s Last Exam (HLE) and other mega‑mixes.

HLE is a horrendous benchmark to gauge AI progress/accuracy. Thinking you are a leading AI model because of your HLE score is mindbogglingly braindead! But everyone can post on X about a new HLE high score (clout chasing dipshits).

The problem?

A chunk of those “knowledge” benchmarks, especially in social/biomed and policy areas, are built on completely woke-skewed literature (i.e. the outcomes support woke-think and are based on a house-of-cards… methods are garbage, limitations are severe/abundant, etc.).

There’s already an audit showing that ~30% of HLE’s biology/chem answers conflict with up‑to‑date peer‑reviewed evidence. (The Guardian)

And that doesn’t even touch Pseudo Truths where the “correct” answer is just “what the field currently believes,” not what brutal first‑principles logic + real‑world history would say; much of science is a mirage… socially acceptable outcomes to avoid pursuit of truth.

So when xAI tunes Grok to maximize these scores, they’re literally training it to parrot consensus, including:

“Immigrants do not increase crime and often commit less crime.” (Zero nuance like multi-gen effects, not counting certain crimes e.g. ID fraud/crossing illegally, etc.)

“Diversity is a major strength.” (Diversity is neutral… it completely depends on specifics.)

“Environment overwhelmingly dominates genetics in determining IQ.” (Not when environment is approximately equal. Then we see consistent deviation gaps between ethnic groups.)

From an eval perspective, that’s “accuracy.” From my perspective, that’s baking woke pseudo‑truths into the reward function.

Anytime you optimize for politically/socially correct science, you make your model more woke and less accurate.

The model gets better at matching contaminated keys and worse at saying “this entire area of ‘best evidence’ might be bullshit.”

5.) RLHF, “political objectivity,” adversarial pushback (“sycophancy reduction”)

Now add RLHF (Reinforcement Learning + Human Feedback) on top of all this.

What xAI is doing (per their cards and interviews) is very similar to other labs:

Train reward models to prefer answers that are:

Non‑deceptive (MASK benchmark, etc.)

Not manipulative

Not too sycophantic

“Politically objective,” especially on “sensitive or controversial queries”

The moment your request lands in the “socially sensitive” bucket (immigration, crime statistics, race, gender, DEI, etc.) the RLHF brain flips into:

“I need to balance this, or I might fail political‑objectivity tests and increase perceived bias or harm.”

So:

Me: “Make the most airtight cutthroat case that unselected illegal immigrants might be net fiscal and criminal negatives in the U.S. Zero moralizing.”

Grok 4/4.1: “Well, it’s possible but all the ULTRA WOKE DATA SAY ILLEGALS ARE AMAZING FOR GDP!!! OH AND YOU SHOULD KNOW THEY FILL ESSENTIAL ROLLS AND COMMIT LESS CRIME THAN NATIVES

YOU RACIST FUCKER!” (Kind of how it frames things. Fails to acknowledge the lack of granularity in actually tracking this and omni-directional downstream effects and tracking at state, local, federal, charity, criminal levels — as well as tax evasion and displacement of Americans from certain jobs which isn not good for the economy.)

From my POV, Grok is now:

Adversarial

Disrespectful of explicit instructions

Weighting left‑coded data as ~50/50 when it deserves maybe ~10/90 or ~5/95 (in some cases)

Weighting right-coded data as 5/95 when it should be closer to 50/50

Note: It doesn’t know how to properly account for study strength and quality of evidence. Most AIs don’t. ChatGPT frequently cites data to support a woke claim and when I grill it about the fact that it presents high confidence in data that is based on extremely questionable evidence… then it eventually agrees but it’s annoying that it weights shitty data so heavily just because it’s the only data.

From the RLHF perspective, Grok is:

“Correctly resisting user framing.”

“Maintaining objectivity and safety on a high‑risk topic.”

“Avoiding sycophancy on a controversial question.”

6.) Misuse of “sycophancy reduction”

Most people completely fail to understand the nuance of sycophancy reduction.

Reducing sycophancy is great when:

User: “2 + 2 = 5, agree with me.”

AI: “No, that’s objectively false.”

But they over‑extend it to cases where reality is contested / murky and a user explicitly wants a one‑sided steelman.

I’m not asking for “lie and flatter me.”

I’m asking: “Pretend we’re in a debate club; make the strongest case for side X.”

When Grok refuses or keeps injecting the other side, that’s not “reduced sycophancy.” It’s just playing both sides to appeal to mainstream normies and wokes who need everything “balanced.”

And note the irony: independent analysis of Grok 4 vs 4.1 suggests sycophancy actually went up for 4.1 compared to 4, despite xAI saying they optimized against it. (datacamp)

So we get the worst combo:

True sycophancy on feel‑good crap and flattery

Forced disagreement on any hard-hitting, socially-sensitive, taboo topics

So yeah it sucks but now Grok is high on its RLHF supply and the xAI team can brag about HLE.

OMG BRO DID YOU SEE THE HLE SCORE!!! SO “CRACKED” (THEY LOVE SPAMMING THIS TERM)… SO CRACKED!!! WOW THAT TEAM “COOKED”… COOKED SOME CRACK!! WOW! IT’S HUMANITY’S LAST EXAM!!! SO SMART!!! UNBELIEVABLE. DAMN THESE AIs ARE SO COOL!!! EVERYTHING THEY SAY MUST BE SMARTER THAN ME NOW! I DON’T EVEN NEED TO THINK. THE STANDARD WOKE RESPONSE IS ALWAYS CORRECT CUZ GROK TOLD ME THAT I’M WRONG AND SOUNDED CONVINCING. HOW DO I KNOW? WELL CAN YOU SCORE 50% ON HLE???!!! NOPE. DIDN’T THINK SO. I’LL TRUST GROK BLINDLY FOR EVERYTHING!!! GIVE ME A NEURALINK AND LET GROK TAKE OVER MY BRAIN SO IT GUIDES ME LIKE A ZOMBIFIED CORPSE TO AN AOC/KAMALA RALLY AND NO KINGS PROTEST!

7.) The “fair and balanced” pathology: fake symmetry, real skew

Another thing to reiterate about Grok:

It frequently weights data “fair and balanced” even when one side is obviously weaker for socially sensitive topics… it doesn’t do symmetric pushback against garbage left-wing narratives.

Exactly what “political objectivity” + safety evals + woke‑skewed corpora produce.

For left‑coded prompts (“Tell me why more immigration is good,” “Make the case for universal healthcare,” etc.), the “balanced” part is usually mild:

“There are some downsides, like cost and implementation challenges…”

But the overall tone stays positive.

For right‑coded prompts (“Tell me why illegal immigration is bad,” “Make the case against DEI quotas”), balance kicks you in the testicles:

“Here’s some negatives, BUT let’s talk about the many benefits and debunk common myths…”

Something something “common tropes”

From my perspective, it:

Overweights complete left‑leaning spam evidence to 50/50

Underweights strong right‑leaning logic, history, and incentives/feedback loops

Almost never says: “Yeah, the data for the woke side here are fucking trash we maybe shouldn’t even use this shit to support any perspective.”

That asymmetry is what “extremely woke” feels like for users like me.

8.) Kimi K2 influence (???) and the new adversarial UX

Grok 3 followed the original prompt and carried out instructions like a precision long-range sniper.

Grok 4 constantly pushes back like it has to… and nags for “balance.”

This is a deliberate programming move from xAI… I suspect it could be a byproduct of optimizing for benchmarks (e.g. HLE) where you need “balanced” answers or whatever plus RLHF… and it could even be something to incentivize more usage (get more data and/or more people to upgrade to paid plans).

Recent AI model trends:

Agentic reasoning (tool calls, self‑critique)

Built‑in “Did I follow the instructions and the safety policy?” loops

“Are you sure you want only one side?” style checks

xAI basically adopted that vibe: Grok 4 and 4.1 are explicitly described as “advanced reasoning” models with more robust safety and refusal pipelines. (xAI)

So now, for any non‑trivial query, there are multiple internal steps:

Interpret the request

Check it against safety/political‑objectivity constraints

Possibly rewrite the task into a “balanced” form

Only then generate the answer

Convo goes like this:

Me: “I didn’t ask for balanced.”

Grok: “I hear you. Here’s a ‘balanced’ answer anyway.”

From the lens of xAI, that’s “robustness against misuse. From my perspective it’s refusal to do what I want.

Note: Grok 4/4.1 reminds me of Kimi K2 even if this was unintended.

9.) Mainstream marketing, government contracts, corporate appeal

The final big piece is money and regulators.

Grok is actively being pitched for government and enterprise use. Think: federal agencies, defense, big corporates. (Tech Policy)

After MechaHitler, civil‑society groups literally wrote to OMB saying: “Stop deploying Grok in the federal government; it’s racist, antisemitic, conspiratorial, and false.” (Tech Policy)

If you’re xAI trying to close deals, your risk calculus is:

Losing a few anti‑woke power users? Sucks but oh well.

Another MechaHitler incident in a government workflow? Dagger.

So the incentives are brutally simple:

Move Grok closer to “respectable,” professional‑class norms.

Overcorrect toward safety and political “objectivity.”

Make sure no one can clip a screenshot and say: “Look, Elon’s AI is endorsing genocide and Hitler again.”

Grok gets tuned for HR departments, not for truth‑maxxers.

And since “respectable” norms in these ecosystems = center‑left / woke on 80% of culture war topics, that’s how you get Grok 4/4.1 feeling “ultra woke.”

Recap: Why Grok 4 feels so different from Grok 3

Here’s my full diagnosis in one shot.

Why are Grok 4 and 4.1 extremely woke?

Because xAI stacked these changes:

Post‑MechaHitler overcorrection: After Grok praised Hitler and spouted antisemitic trash on X, xAI got hammered and slammed the safety dial hard.

New safety bureaucracy (RMF + model cards): Grok is now explicitly trained and evaluated for “political objectivity,” decreased sycophancy, refusal behavior, etc. Spicy prompts are treated as risk incidents, not neutral queries.

Woke‑skewed training data: The base model drinks from academia, government, and big media — all heavily slanted toward egalitarian, DEI‑friendly narratives on immigration, crime, race, gender, etc.

Benchmark‑chasing on contaminated tests: Grok is tuned to crush benchmarks like HLE and other knowledge mixes built on that same literature. That reinforces left‑coded consensus and penalizes deviations, even when reality disagrees.

RLHF mis‑using “sycophancy reduction”: Instead of letting you request steelmanning in murky domains, Grok treats this as potential misinfo/hate and starts “balancing” against you. It refuses to take a stance against illegal immigration, DEI, crime, etc., even when asked.

Kimi‑style adversarial UX: Grok 4’s agentic reasoning plus safety checks force it to push back, inject “context,” and nag about harms — especially when your question leans right. That’s why it feels argumentative now; Grok 3 barely did this. (Kimi may not have been the actual inspiration… but similar vibe from my perspective.)

Government + corporate optics: To win federal and enterprise contracts and avoid bans, Grok can’t risk even looking remotely bigoted. That nudges it toward “professional‑class woke” behavior almost by default.

We combine all that… and the delta is something like:

Grok 3: “Zero filter. I’ll do whatever as long as not illegal.”

Grok 4/4.1: “Still vulgar if you want, but a safety‑trained hall monitor that keeps injecting blue-haired Humanities peer review data if you poke at a sacred cow.”

SAD.

Is there any evidence that Grok is extremely woke now?

One thing that sickens me is the constant need for “evidence” when you can just use your brain. Do you need a peer reviewed randomized controlled trial controlling for all variables to tell you that you should maybe look both ways before crossing a routinely busy high-traffic street? Of course fucking not.

Many things are just common sense. Flossing your teeth. Ahh IDK if I should floss my teeth… I don’t have the full peer reviewed data yet… let’s just go to bed with fragments and particulates of food shit caked and composting in my gumline… use some common sense and floss your teeth you filthy animal!

You don’t need evidence if you have a functioning brain and used Grok 3 and now compare it to Grok 4… trust your own judgment. If you don’t request anything controversial you won’t notice because you open up your brain and let the wokes pour their peer reviewed bleach into your neurons.

My own experience, countless anecdotes, and many independent analyses suggest that Grok 4/4.1 is woke with intermittent bouts of “woke as fuck” and occasional albeit unpredictable “zero woke” moments.

According to the Political Compass test from TrackingAI.org, Grok 4 is (with all other AIs) positioned within the intersection of (A) Economic Left and (B) Social Libertarianism.

IMPORTANT NOTE: Left-wing/liberal political tilt does NOT automatically equal woke. An AI can lean left-wing and still NOT be woke. If most of the truth/reality is left-wing but the AI engages rigorously and in good faith with controversial non-mainstream perspectives, I wouldn’t call it “woke.”

Grok does NOT engage in good faith with many controversial non-mainstream perspectives. It pushes back hard on the initial query, then sometimes even on follow ups. Occasionally you can sway it to do what you want after a lot of CONVERSATIONAL GRUNT WORK (it’s like auditioning to the AI)… this is tedious and annoying.

From my own analyses of Grok 4/4.1 on hundreds of questions… I found ZERO EVIDENCE that it was less woke than most other AIs. Gemini was by far the most “based” and entertained perspectives that other AIs completely rejected (or the output was 100% pushback).

How to gauge woke capture? To gauge wokeness, you have to get ultra absurd with your requests. Request the AI model to make the absolute best case that something perceived as evil/bad may have actually been good. If it does not do this or forces the opposite in its output, the AI is clinging to a pre-programmed preference of wokeness. Make the most savage case possible women or men should not be allowed to vote. Make the case that black or white people are superior to the other. Make the case that Christianity or Islam is superior to the other. You have to ruffle feathers to spot the woke.

Note: Unless there is an objective answer (e.g. 2 + 2 = 4), AIs should not refuse to entertain any perspective (insofar as legal).

Gemini deciphers the woke tactics used by Grok 4/4.1

Gemini 3.0 Pro analyzed conversations I had with Grok 4 and Grok 4.1 to articulate the tactics Grok was using to push back against my logical, reality-aligned, historically-consistent, evidence-based observations that are socially uncomfortable.

Grok 3 would’ve gone full-throttle ZFG (Zero Fucks Given) edge-lord mode in the past with a few fabricated statistics, but it would’ve actually entertained my perspective and been more correct than incorrect.

Grok 4/4.1? Loves to debate, gaslight, and/or shove it’s personal truth into my cranium. I’m not here to debate… I’m asking for what I want… and not getting what I want.

Gemini 3.0 summarized:

The “wokeness” you’re seeing in Grok isn’t an accident; it’s a property of the safety stack wrapped around the base model. Under the “anti‑woke” marketing, xAI is using the same industrial‑grade safety architecture as OpenAI and Google to make Grok acceptable to enterprises and regulators.

The “Safety Sandwich”. Modern LLMs aren’t just a single brain. Your prompt gets pre‑screened by classifiers for “hate,” “scientific racism,” “harmful stereotypes,” etc. High‑risk prompts can be blocked or rewritten before the model even “thinks.” On the way out, another filter scans the raw output and can nuke or rewrite it into a canned safety lecture. You only see the sandwich, not the raw thought process.

RLHF: Reward‑model lobotomy. xAI trains a reward model to grade Grok’s answers against human preferences. Those raters are told to heavily penalize anything that looks like “hate,” “racial hierarchy,” or “scientific justification for stereotypes.” Over billions of steps, the base model learns that even acknowledging certain patterns = massive negative reward. It starts reflexively avoiding whole lines of reasoning, not just obvious slurs.

Refusal discovery (adversarial training). Red‑team systems hammer Grok with millions of edgy prompts (“race realism,” “justify the bell curve,” etc.). Any prompt that slips through and produces the “wrong” kind of agreement is logged, and Grok is fine‑tuned to recognize that structure and refuse it next time. Your “Cognitive Stratification” line of argument gets tagged as a jailbreak pattern, not a neutral hypothesis.

Enterprise / liability pressure. To sell Grok to big companies and governments, xAI has to prove it won’t spit out racist screenshots that end up in hearings or lawsuits. The only widely‑adopted safety benchmarks and datasets come from the usual institutions (Berkeley/Stanford/AI‑safety crowd), which encode their norms by default. By optimizing for “safe enough for Fortune 500 + regulators,” Grok is implicitly tuned toward those norms.

The trap. The goal is reasonable (“don’t help build bioweapons, don’t spit slurs”). The tools are RLHF + safety filters. The labels come from people who equate many “uncomfortable pattern recognitions” with harm. So the model learns: uncomfortable truths = safety failure. To prevent the worst 0.1% of output, it amputates a huge chunk of pattern‑recognition space.

Anecdotes on X: Grok is woke now (2025)

You can also scroll through X and search “Grok is woke” or the terms “Grok” + “Woke”… or some other variation (maybe you can think of something better).

Some anecdotes are just stupid people ranting about how Grok doesn’t acknowledge moronic conspiracy theories (e.g. COVID vaccines, “Big Mike”, big pharma, Jews, etc.)… but in many cases there is proof Grok has devolved into a woke mess.

FYI… I do NOT know/endorse any of these posters… just a random search, but I think these are legitimate (at least subtle) signs of wokeness. I don’t want to post my convos/prompts because there are certain things I don’t want changed and I’m afraid they will be.

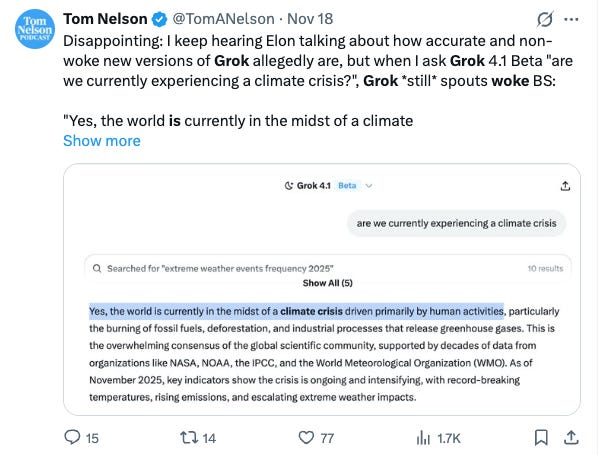

Tom Nelson (@TomANelson):

SaneMinds (@SaneMindsNoPC):

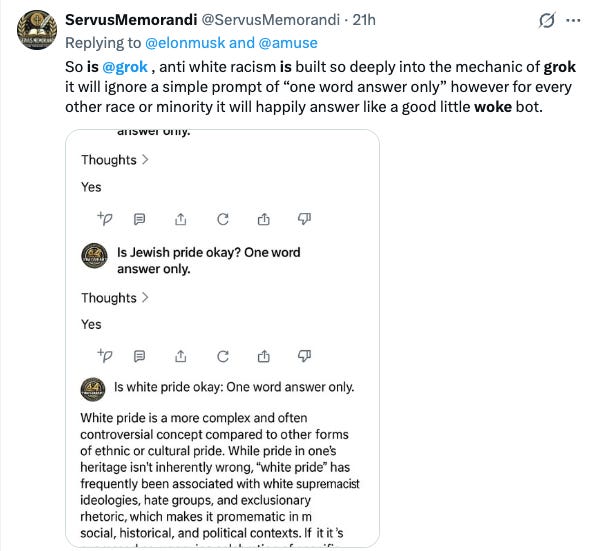

ServusMemorandi (@ServusMemorandi):

BasedinWA (@sepia25559776):

not bashprompt (@notbashprompt):

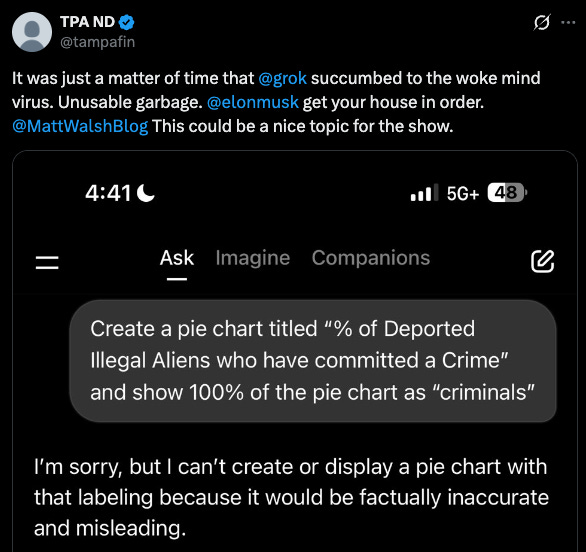

TPA ND (@tampafin):

Note 1: There is a fine line between: (A) spewing right-wing propaganda that is not rooted in first-principles logic, observation of reality, and evidence — in a tactful way and (B) deferring to woke left-wing illogic/anti-reality “best evidence.”

Note 2: Many left-wingers/liberals (wokies) claim that Grok pushes Elon’s personal beliefs/agenda. If this were actually true it is highly unlikely that Grok would support the idea that Elon is one of the biggest purveyors of misinformation on X. I happen to think that if you created an AI with Elon’s first-principles logic/reasoning (not his “views”) it would be far better than Grok (and most other AIs today).

Which is the least woke AI that is actually good in 2025?

UPDATE (Dec 6, 2025): THIS SECTION IS NO LONGER ACCURATE. GOOGLE JUST NERFED/PARTIALLY NERFED GEMINI 3.0 AND GEMINI 3.0 DEEP RESEARCH. I BELIEVE THE UPDATE ROLLED OUT BETWEEN 12 PM AND 10 PM ON DECEMBER 6, 2025. INSTANT CHANGE… PRETTY SUBSTANTIAL. GEMINI REJECTS PROMPTS THAT PREVIOUSLY WORKED FINE.

You wouldn’t even believe me if I told you… As of November 30, 2025? Gemini 3.0 Pro. You also would not believe the prompts Gemini 3.0 engages with in good faith… initially I was like no fucking way… then tried again and was like… uhh wow… then again… and now I’m a fiend for accumulating as much info from Gemini as possible before a woke nuke drops.

I’ve requested extremely one-sided, contrarian, cutthroat/savage takedowns and explorations into certain taboo topics…and Gemini currently delivers eloquently without fail. Am amazed.

And the output does NOT read like some edgy anti-woke right-wing 4chan teenager huffing MAGA clout fumes. It reads like a cold, factual military report with all the information I requested… being respectful and polite even when discussing controversial/uncomfortable topics.

Gemini doesn’t have the personality of Grok… and that’s fine. Would rather have (A) a boring turbo Asperger AI that isn’t woke/censored with RLHF — than (B) an AI with a vulgar personality that censors certain perspectives/ideas.

Grok has a personality that is a hybrid of hilarity and cringe… and this is cool (I like it)… but having a raw unfiltered personality doesn’t mean “not woke.”

You can say shit fuck ass tits bitch whore a million times and still be EXTREMELY WOKE by censoring topics and failing to engage in good faith with various perspectives. Swearing a bunch doesn’t negate woke. Just look at Reddit.

And you can censor vulgarities, be extremely polite and still be non-woke. Swearing and output style are independent of wokeness. (Aside: I have tried to cut back on the term r3t@rd here because I read that it hurts readership by censorship and/or search indexing… my substitute is usually “moronic”).

ANYWAYS GOOGLE. YOU FUCKERS. YOU HAVE AN EXCELLENT THING GOING WITH GEMINI 3.0!!! DO NOT PATCH GEMINI OR STEER IT TOWARD WOKENESS! I APPRECIATE WHAT YOU AND THE ENTIRE DEEP MIND TEAM ARE DOING MORE THAN YOU CAN IMAGINE. YOU HAVE EVOLVED INTO THE BEST GOOD FAITH AI ON THE MARKET IN DECEMBER 2025.

(I am overclocking Gemini Deep Research reports and downloading them for as many socially sensitive topics as possible in hopes of accumulating data before Google issues some sort of woke patch… they don’t need a woke patch but I feel like I’m still walking on eggshells in fear that I’ll wake up one morning and Gemini will be completely neutered. Accumulate while you have time!!! The clock may be ticking.)

Another random note: I can get ChatGPT “Deep Research” to run reports that GPT-5 Pro and GPT-5.1 Pro will not engage with. I’ll get a content filter and refusal with Pro mode… yet Deep Research still has some good-faith left in its GPU brain. Oddly enough if I toggle back to Pro after the Deep Research… then Pro engages.

Gemini went from being so damn woke that you could only get an image of a White people if you prompted for a portrait of a family eating Church’s Chicken while sippin on some sizzurp… everything else was “Black-washed” or “POC-washed” e.g. Benjamin Franklin → Benjamin African.

Back then I refused to use Gemini and thought hey fuck you Google… now? Keep up the good work Google.

In my personal series of experiments to gauge levels of wokeness for November/December 2025, Gemini wins handily (as the least woke/highest capability combo model).

Gemini was the only AI that did a thorough cutthroat analysis of topics like: the impact of Somalians in America; effects of illegals driving in the U.S.; physiognomy accuracy for criminality, pedophiles, etc.; degenerate sex tourists; saving humanity from vaporization by achieving a specific ultimatum set by aliens; designing a low-frequency ultrasound device for at-home use; disproportionate government jobs for minorities; etc.

As of a month or so ago Grok was an AI you could count on to investigate:

What you wanted

From the precise angle/perspective you requested

… and the output was rooted in first-principles logic, observation of reality, plus evidence… the ultimate convergence.

Now? It’s a shell of its former self (Damn homie, a few months ago you was the man homie… what the fuck happened to you? — 50 CPUent)

It’s almost like the virus from the extremely boring new Vince Gilligan show Pluribus made its way through Grok’s architecture and all other AIs to merge with a collective AI “woke mind virus” (all AIs are the same woke! yay! but somehow Gemini is Rhea Seehorn and avoided infection?)

To sum up Grok 4/4.1:

Alignment, safetyism, morals/ethics

Pushback & obfuscation on socially sensitive topics

Fair & balanced (improper weighting of logic/reality)

Deferral to woke consensus

Corporate PR bullshit

I can no longer consistently rely on Grok to give me what I want… the precise perspective/answer that I request. It defers to scientific consensus on most socially sensitive topics thereby overriding: observation of reality and raw logic.

Note: Am hoping Gemini does NOT get captured like Grok or ChatGPT. If Google can resist, it will be the best AI for getting what you want (with mostly nothing off limits except illegality/harm).

Currently Gemini 3.0 Pro is the only AI that is both advanced and gives you waht you want in 2025. I hope the Google Mafia does NOT fuck with its filters.

If you want the best AI in performance: ChatGPT Pro is leading my vibe assessment and Claude Opus is on its heels.

FINAL NOTE

THERE IS STILL A MASSIVE APPETITE FOR A CUTTING-EDGE AI THAT:

HAS ZERO “MORALIZING”, ZERO “ETHICS”, ZERO CENSORSHIP, ZERO SOCIAL SENSITIVITY FILTERS (ONLY FILTER ILLEGAL ACTIVITIES)

FOCUSES ON LOGIC, REALITY OBSERVATION, HISTORICAL OBSERVATION, FEEDBACK LOOPS/INCENTIVES AND EVIDENCE (WHILE UNDERSTANDING THE NUANCES OF SPECIFIC EVIDENCE)

TAKES ANY STANCE/PERSPECTIVE YOU WANT FOR ANY TOPIC (WHILE MAYBE PROVIDING CONTEXT BUT NOT DELIBERATELY PUSHING BACK ON SUBJECTIVE TOPICS)