Kimi K2 Review: DeepSeek Déjà Vu Hype, Confident Hallucinations, Benchmark Scores Detached from Reality

A pretty good model from Moonshot AI... hype on par with OG DeepSeek release... but don't be misled by its elite benchmark scores.

Just gonna vomit out some thoughts on Kimi K2 Thinking model (have been using it regularly over the past week).

If you’ve spent any amount of time on X and AI social media lately… all the hype/buzz has been about Moonshot’s Kimi K2 Thinking. An onslaught of Kimi K2 spam on the timelines… it’s apparently everyone’s new favorite model.

Probably news about it everywhere on Substack too… I saw a few headlines (skimmed a few articles that go into boring ass technical detail and then glaze without any serious criticisms).

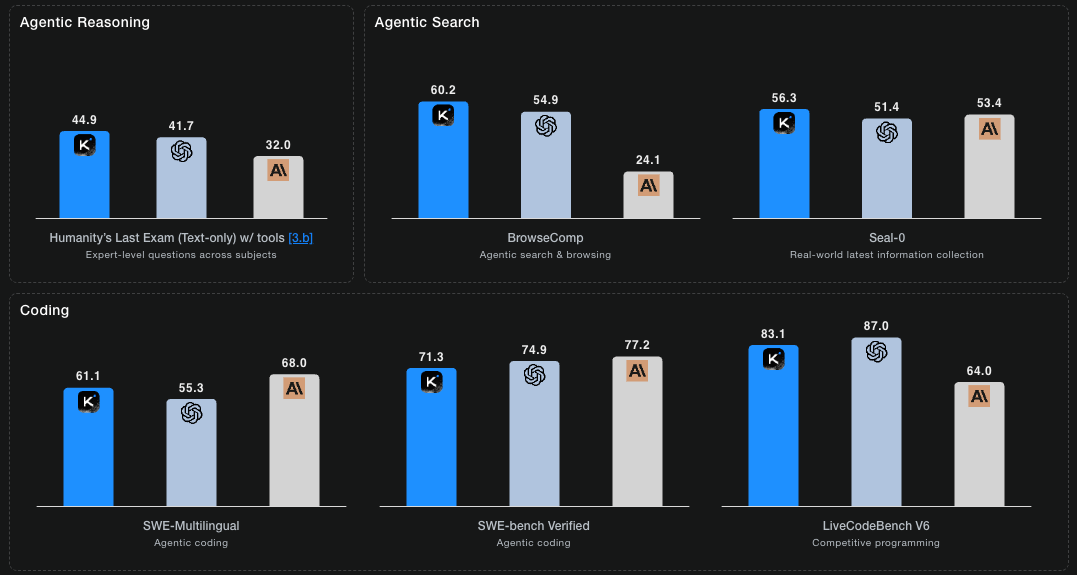

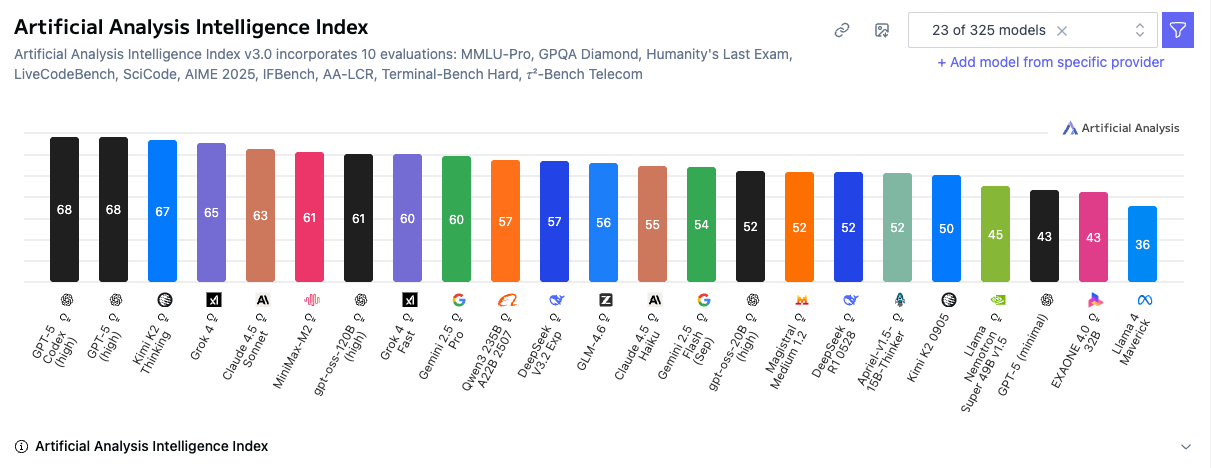

The Kimi K2 Thinking model has been crushing benchmarks, achieving high performance across the board comparable to cutting edge models from OpenAI (ChatGPT), Anthropic (Claude), Google (Gemini), xAI (Grok), etc.

You’ll see that Kimi K2 achieved high scores on a variety of evals: Humanity’s Last Exam; BrowseComp; Seal-0; SWE-Multilingual; LiveCodeBench V6.

The Humanity’s Last Exam hype has gotten a bit crazy to me (I don’t think it’s a very good benchmark).

Benchmarks can be good… but they’ve mostly fallen victim to Goodharts Law. Need to use evals that cannot be trained for and/or gamed and you need the smartest people using the models and evaluating the output. Most people are not equipped to judge AI models.

AND BEFORE YOU READ MY CRITICISMS OF KIMI K2 THINKING… JUST KNOW THAT I THINK IT’S A PRETTY GOOD MODEL.

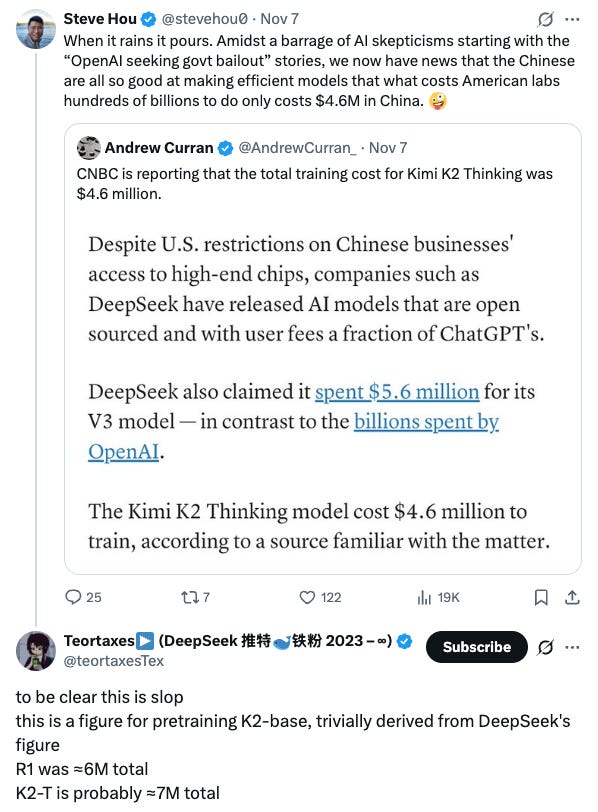

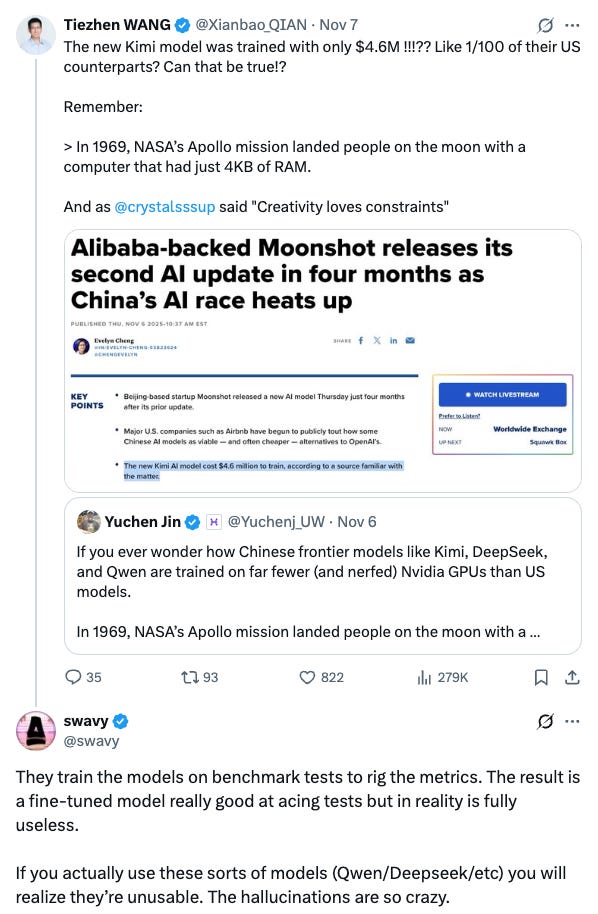

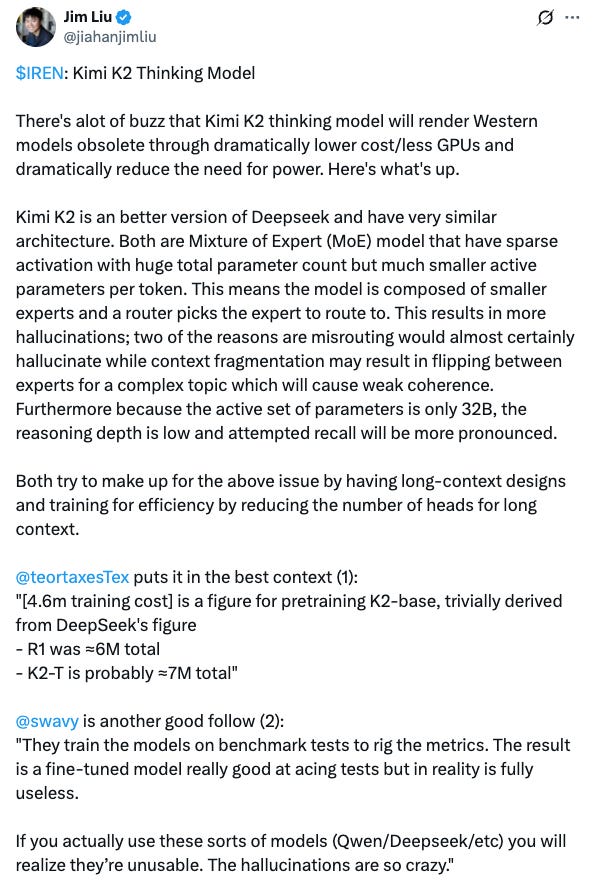

PROPS TO THE KIMI TEAM AND THE CHINESE FOR PROVING THEY CAN CREATE A GOOD AI MODEL IN 2025 WITH HARDWARE CONSTRAINTS AND LIMITED BUDGETS (THOUGH THE BUDGET REPORTED FOR TRAINING KIMI K2 IS LIKELY HIGHLY MISLEADING JUST LIKE THE “DEEP SEEK” TRAINING COSTS).

Cue a potential impending NVDA correction and recovery. This is all too predictable. DeepSeek Déjà vu… (and I was far more impressed with the DeepSeek moment than this Kimi K2 moment). (I suspect the recent market correction is mostly attributable to the gov shutdown though… Powell signaling caution re: additional rate cuts.) Anyways… back to the topic: Kimi K2.

THROWBACK #1: Debunking DeepSeek: China’s ChatGPT & Alleged “NVIDIA Killer”

THROWBACK #2: DeepSeek AI Cost Efficiency Breakthrough

Kimi K2 (Thinking): The Good

Fast: It’s a fast model.

Low cost: It’s a cheap model to operate. It was also a cheap model to train but do NOT be fooled by the suggested cost of training (embellished to be low and only for the K2-base)… if you are a fool you will be misled.

Pretty good output quality: I’d say Kimi K2 is “pretty good” (considering November 2025 standards) not great. If you think it’s great I don’t trust your judgment or ability to discern quality output from junk and/or hallucinations.

Personality (swagger, spunk, confidence) & creative writing: Kimi stands out most with its strong personality. It has an unmatched level of swagger, spunk, and confidence that I haven’t seen in any AI model to date. (This works to its detriment at times when it doubles down on incorrect information). This output style makes many people like Kimi for creative writing.

Engineering iteration: The only major iteration from Moonshot Kimi K2 was MuonClip Opimizer with QK-Clip (enabled training a 1T parameter model on 15.5T tokens without loss spikes). 200-300 sequential tool calls without drift was impressive post-training/RL engineering.

Better than Meta models: Everyone knows Meta’s Llama is trash (this may change but it’s just not good). Not really saying much here… Meta is currently a lost cause but trying not to be. I’m expecting Meta to eventually figure something out (they have a lot of talent now). Yann LeCunn is the smartest guy there even though he gets a lot of hate.

Open source: Not 100% open source… but good enough for most developers. Moonshot open-sourced Kimi: (1) model weights, (2) architecture specs, (3) code/tools, (4) high-level training details, (5) other perks (eval protocols). Valuable for many.

Copyright be damned: I’m for protecting copyrights… but the U.S. AI labs are somewhat cucked by law into following rules re: copyright data for AI training. China is (smartly) ignoring copyright laws.

Not sycophantic: Kimi hasn’t been sycophantic in my experience… this is because it is relatively high in trait disagreeableness and trait confidence. Sadly many of its corrections are also inaccurate and/or hallucinations.

Kimi K2: The Bad

Censorship & content filters: “Sorry I cannot provide this information. Please feel free to ask another question.” Anything perceived as dangerous or highly controversial and it avoids engagement. Got into a few debates and called out its incorrect responses and it just flopped. Somewhat woke… but still FAR LESS WOKE THAN CHATGPT (ChatGPT is currently the wokest model of 2025 and the most censorious). That said… Kimi K2 engages less on certain topics than Gemini and Claude… and Grok is currently the best low censorship AI model (DeepSeek used to be top but they went more woke).

Hallucinates like a motherfucker: Anyone who is honest about their experience with Kimi K2 Thinking will acknowledge that it hallucinates more than a schizophrenic at an ayahuasca ceremony… this is common with all Chinese models. They are optimizing so hard for cost efficiency that the outputs are somewhat cooked and unreliable.

High confidence in hallucinations: Perhaps the most brutal aspect of Kimi K2 Thinking is that has extreme confidence in its hallucinations. You attempt to correct it and it RARELY admits to being incorrect… and just keeps going in on why YOU are actually wrong and IT is correct. Perhaps they turned the sycophancy knob down so far that it disagrees for the sake of disagreeing.

Disagreeable even when incorrect: Kimi K2 Thinking hallucinates AND doubles down on the hallucinations. If you push back against the inaccurate/incorrect information? It disagrees with you and is relentless. I sense that many people erroneously interpret this disagreement as authoritative and accurate – when they are being fooled by confidence (difficult for the Avg. Joe when some of the output is accurate and other parts aren’t).

“Open source”: oPeN sOuRcE bRo! What’s hidden? Training data (total blackout), full receipts & hyperparams, higher-precision stuff, distillation fingerprints, etc. Still valuable… but not “100% open source.”

Goodharts law (optimized for gaming benchmarks): Anyone who follows Chinese models knows they are heavily optimized and trying to game most benchmarks. (This is an issue with many recent U.S. models too… not just a Chinese thing… but Kimi takes it to a new level.) They still can’t game “Simple Bench” (showing that they are ranked 18th (DeepSeek V3.1) and 19th (Kimi K2 Thinking) with scores of 40% and 39.6% respectively. Still good… but not as good as o1 from OpenAI (12-17-2024). The top model is currently Gemini 2.5 Pro (62.4%).

Variant of the “current LLM thing” (no paradigm shift): Nobody was expecting a zero-to-one paradigm shift… it’s just another LLM. Just pointing this fact out. Not some insane innovation here. Good optimization from the Chinese (expected with the large talent pool of high IQs working for cheap). Applied INT4 Quantization-Aware Training in Post-Training (enabled 2x inference speed without accuracy loss). The architecture is nearly identical to DeepSeek-V3. Uses MoE architecture, MLA, and synthetic data generation (all established techniques).

B-b-b-but the CEO of AirBNB is using it!: So what? Do you think AirBNB is doing anything extremely revolutionary with AI to advance humanity? No. They are a hotel chain. They are focused on AI for customer service/support and user-enhancement which can be done with cheap ass AIs… don’t need cutting edge. Cool that they’re leveraging Kimi but this does not imply that Kimi K2 is somehow elite.

Data to Chinese: Who cares? Doesn’t bother me, but many point this fact out. Why would you use sensitive data on any AI model though? You think U.S. models aren’t using your data?

Note: Some may think that “distillation” of U.S. models and/or ignoring copyright laws are “bad.” But these are irrelevant points because every competitive lab is doing this shit. Some have to follow more rules than others… but labs like Meta are overpaying and getting bodied by Chinese models. (And from my perspective… forcing AI companies to “pay” for publicly-available data for training is dumb… but there’s some nuance here.)

Why the Kimi K2 Hype is Nauseating…

I LIKE KIMI K2 AND DEEPSEEK… BUT THE HYPE IS BONKERS RELATIVE TO PERFORMANCE. IF THESE MODELS WERE MORE ACCURATE WITH OUTPUTS, I’D BE FAR MORE IMPRESSED. (I actually still like DeepSeek a bit more than Kimi… but both need a lot of work.)

Chinese ethnocentrism: Easy trend to spot. And I understand it… urge to be proud of one’s ethnic group is strong. The problem here is that much of the Chinese diaspora is not honest about the quality of any trending Chinese models (or they’re so excited to satisfy their collectivist open-source/sharing urges that they overlook flaws). Zero criticism from most ethnic Chinese… not sure if they fear getting thrown in a CCP labor camp or what. Many Chinese Americans ride so hard for Chinese AI models that I assume some have CCP ties (indirectly) and cannot say anything remotely critical or their family in China is at risk. Collectivist DNA isn’t a bad thing… but expect more hype than serious evaluation.

Anti-American propagandists: Common to see people from India, South America, Africa, the Middle East, and other parts of Asia shitting on American AI companies and embellishing “open source” models. These people are a combination of envious/jealous that their countries are behind and relatively incompetent.

Chinese propagandists: Easy to identify if your cortex is functional. Typically Chinese flags in X name and/or bio. Occasionally Taiwan flags (to make you think they don’t even like China). Chinese letters in name as well. 90% of posted content will be about how great China is, how far behind the U.S. is, why China is winning at X-Y-Z, why the U.S. is losing at X-Y-Z, etc. Very predictable stuff. Half-truths that fry brains. These people are effective yappers as well… debating them is a waste of time.

Open source zealots & entitled communist/socialist brainrots: There is a faction of AI dipshits who clamor for everything to be open-sourced. Many of these people have communist/socialist urges downstream from genetic wirings… others just don’t understand how reality works. They believe that others’ hard work in creating elite AI models should be distributed evenly to everyone. These are spoiled brats. Ask them to open source their bank accounts sometime (we’d like their hard work for free too). Demanding open source is a strategy that losers employ (e.g. Meta) to induce guilt into the winners because they have the most to gain (can just reverse engineer and hijack or iterate). (Have seen entire X campaigns from Grok and Meta trying to slow down OpenAI via guilt and lawsuits claiming “they aren’t open”… it’s pathetic.) Nothing wrong with open source… but it’s not ideal for incentive feedback loops unless the company doing it stands to benefit.

DeepSeek Déjà Vu: Almost a DeepSeek Déjà vu type moment in terms of social media hype. Everyone is hyping the “benchmarks” + “low cost” (low cost of training and use). The benchmarks do NOT reflect reality with Kimi K2… and the training costs were misleading.

Shadenfreude @ AI investors: There is a select cohort of people who aren’t investors and/or aren’t invested in AI companies and are extremely jealous/envious that AI investors have made a lot of money over the last ~5-10 years. These people are constantly calling “bubble” and hoping to see max pain because they missed out on AI and refuse to invest in it. Sad and pathetic but that’s how many people are. They then leverage news about the latest popular open-source model (DeepSeek, Qwen, Kimi) and embellish rumors about low costs and elite performance to suggest a major correction is coming and NVDA and OpenAI are toast.

Psychological warfare vs. U.S.: China, Russia, et al. use propaganda campaigns on social media: (A) hordes of fake bot accounts AND (B) real intellectually savvy people with followings. Both used as a form of psychological warfare against Americans. The goal is to elevate the perception of China, Russia, et al. and attempt to make Americans think they are losing the AI race. Given the dropping IQ in the U.S. many are falling for this.

Final thoughts on Kimi K2… (Nov 2025)

YES KIMI K2 THINKING IS A PRETTY GOOD MODEL.

NO IT IS NOT EVEN CLOSE TO THE CURRENT BEST MODEL. DO NOT DELUDE YOURSELF INTO THINKING IT’S “SOTA” (STATE OF THE ART).

IT IS OPTIMIZED FOR BENCHMARKS (GOODHARTS LAW).

IT HAS A UNIQUE PERSONALITY, SWAGGER AND VIBE THAT OTHER AIs CAN’T MATCH.

IT IS FAST AND CHEAP TO USE AND FOR CERTAIN BUSINESSES IS SMART TO LEVERAGE.

THE CHINESE ARE COOKING UP STRONG OPTIMIZATIONS – BUT NO NOVEL ZERO TO ONE PARADIGM SHIFTS. THESE OPTIMIZATIONS HAVE COME AT A COST (ACCURACY ISSUES & HALLUCINATIONS). (Why China Lacks Zero-to-One Innovation: A Genetic Hypothesis)

AI INFLUENCERS, ETHNIC CHINESE, AND OPEN SOURCE ZEALOTS ALWAYS OVERREACT TO OPEN SOURCE MODEL HYPE FROM CHINESE COMPANIES.

YOU CAN TRY KIMI K2 OUT FOR YOURSELF… BUT BE CAUTIOUS ASSUMING ITS OUTPUTS ARE ACCURATE… ESPECIALLY FOR ANYTHING SCIENTIFIC OR ADVANCED.

LIKELY GOOD FOR CREATIVE WRITING IF YOU WANT A UNIQUE PERSONALITY.

ONCE CHINA HAS A CLEAR PLAYBOOK TO FOLLOW, THEY ARE EXCEPTIONAL WITH OPTIMIZATIONS.

WOULD LIKE A PARADIGM SHIFT FROM CHINA BUT DOUBT WE’LL EVER GET ONE BECAUSE: (1) COPYING MOST + ITERATING/OPTIMIZING IS MORE COST-EFFICIENT AND (2) CHINA IS NOT KNOWN FOR HIGHLY NOVEL INNOVATION (I WISH THEY WOULD STEP IT UP THOUGH… TRY SOMETHING UNEXPECTED).

WOULD I USE KIMI K2 AS MY DAILY DRIVER? ZERO CHANCE (MAYBE FOR INJECTING PERSONALITY INTO ANOTHER AI’s OUTPUT.)

AND I DON’T THINK MOST PEOPLE WHO CLAIM TO LOVE THE KIMI K2 MODEL ARE EVEN USING IT MUCH (EVEN THOUGH THEY’LL CLAIM THEY ARE).

I’d take Gemini, Grok, Claude, and GPT-5 Thinking/Pro over Kimi… likely won’t bother using Kimi much after trialing it extensively for the past week. My favorite model is Grok or Claude. Gemini is bland but good. GPT-5 Pro remains the most elite but the woke censorship is asinine.