Risky Words in 10K Filings Predict Stock Returns: Machine Learning Study (2024)

A new study analyzing annual 10K reports for "risky words" generated 22% alpha with machine learning

A new study “Risky Words and Returns” (Dec 2024) by Sina Seyfi used machine learning to identify whether the prevalence of specific terms/words within annual SEC 10-K filings could reliably predict stock returns.

The results suggest that creating a long-short portfolio based on risky words within 10-K filings generated 22% alpha relative to a CAPM (Capital Asset Pricing Model) benchmark - and 13% annual alpha (excluding microcaps).

This probably outperforms QQQ by a bit if you’re willing to use the strategy with microcaps, but likely is similar to QQQ in performance (with a lot more effort) if you exclude the microcaps.

For most people this strategy isn’t worth the time/effort, but for someone who is capable/motivated with ML experience - this strategy could be optimized to generate higher alpha than the one used by Seyfi.

Which Words Were Most “Risky” in 10-K Filings?

High-Frequency, Consistently Predictive Words

The single most repeated “risky word” (i.e., appearing with a nonzero LASSO coefficient most often) is “oil”.

Others frequently appearing with nonzero coefficients include “clinical,” “products,” “gas,” “loans,” “company,” “mortgage,” “fuel,” “energy,” “manufacturing,” “software,” “healthcare,” “drilling,” “properties,” “clients,” and so on.

Interpretation of Positive vs. Negative Coefficients

Many energy-related terms (“oil,” “gas,” “fuel,” “coal,” etc.) typically carry positive average coefficients, indicating that firms discussing these exposures tended—over many time periods—to earn higher subsequent returns (a “risk premium” on energy).

By contrast, “loan,” “loans,” “mortgage,” “bank,” and some references to “business in China” often have negative average coefficients, implying worse subsequent returns for firms emphasizing these risks in their disclosures.

The “Core” Risky Words

These were the most frequently chosen (i.e., nonzero in LASSO regressions) single words, and some bigrams, over time.

Seyfi calls these “main risky words,” as they appeared at least 60 times with a nonzero coefficient in cross-sectional analyses.

The core risky words revolve around energy terms (oil, gas, fuel), loan/bank/credit references, and specialized topics like “clinical trials,” “drilling,” “manufacturing.”

Positive correlation words frequently come from energy, drug, manufacturing, software, etc.

Negative correlation words revolve around bank, loan, mortgage, business in foreign contexts, and so on.

Words are grouped into 14 thematic clusters via word-embedding similarity. The largest negative cluster is typically “loan,” while “energy” and “drilling” are strongly positive.

Seyfi’s study notes that “risky words” are not just about negative connotation; rather, they capture exposure to systematic risks that, when signaled by the text, might raise or lower a firm’s expected returns depending on how the market prices those risks.

Below is an alphabetical listing, grouped by whether their average coefficient was positive or negative.

1A. Positive Average Coefficients

coal

clinical

company

drilling

drug

energy

fuel

gas

healthcare

manufacturing

oil

products

properties

software

steel

(For these words, higher usage in the 10-K’s Risk Factors tends to correlate with higher future returns. For example, “oil” has a mostly positive coefficient—although in certain sub-periods, it turns negative.)

1B. Negative Average Coefficients

bank

business

china

clients

credit

loan

loans

merger (in some references, “merger” is negative on average)

mortgage

portfolio (in the sense of “loan portfolio”)

reporting (often used in disclaimers, e.g., “small reporting company”)

risk (the generic mention, in some contexts—though it can appear in positive contexts, average is negative)

securities

shareholders

stockholders

(These negative-coefficient words often appear in contexts of credit risk, banking, or business-model vulnerabilities that historically preceded lower returns.)

Note: The precise most risky words can differ a bit depending on the sample window and exact filtering, but this list captures the central repeated words from the paper’s tables.

14 Themes & Representative Words

In the paper, Seyfi uses word2vec embeddings + agglomerative clustering (with cosine distance) to group these top “risky words” (plus additional ~1,000 other “less core” words) into 14 themes.

Below is the short label for each cluster along with a sampling of the core words.

Applicable

Words like “applicable,” “required,” “changes,” “affect,” “qualify.”

Average Coefficient: Mixed/Neutral.

Business

Words like “china,” “business,” “operations,” “foreign,” “markets.”

Average Coefficient: More negative overall.

Clients

Words like “clients,” “customers,” “patients,” “distributors.”

Average Coefficient: Slightly negative overall.

Company

Words like “company,” “stock,” “shares,” “corporation.”

Average Coefficient: Positive overall.

Drug

Words like “drug,” “clinical,” “trials,” “FDA,” “product candidates.”

Average Coefficient: Positive overall.

Drilling

Words like “drilling,” “exploration,” “research,” “offshore.”

Average Coefficient: Strongly positive overall.

Energy

Words like “oil,” “gas,” “fuel,” “coal,” “power.”

Average Coefficient: Positive overall.

Healthcare

Words like “healthcare,” “care,” “medical,” “hospitals,” “medicare.”

Average Coefficient: Slightly positive.

Income

Words like “income,” “trust,” “million,” “reit,” “tax.”

Average Coefficient: Mildly positive on average.

Insurance

Words like “insurance,” “reimbursement,” “claims,” “public.”

Average Coefficient: Mixed; can be slightly positive or neutral.

Loan

Words like “loan,” “loans,” “mortgage,” “credit,” “bank.”

Average Coefficient: Negative overall.

Manufacturing

Words like “manufacturing,” “production,” “demand,” “test,” “capacity.”

Average Coefficient: Positive overall.

Properties

Words like “properties,” “stores,” “facility,” “inventory,” “lease.”

Average Coefficient: Positive overall.

Software

Words like “software,” “solutions,” “internet,” “wireless,” “services.”

Average Coefficient: Positive overall.

(Some words might appear on multiple clusters if they have multiple contexts, but the clustering attempts to keep them in their closest semantic group.)

Positive & Negative Correlations by Clusters

Below is a broad stroke of which clusters, on average, mapped to positive vs. negative predictive signals:

Positive Clusters

Energy (oil, gas, fuel, coal)

Drilling (exploration, offshore, etc.)

Drug (clinical, product candidates, FDA)

Manufacturing (production, capacity)

Company (stock, shares, corporation)

Properties (stores, facility, brand)

Software (software, internet, wireless)

Healthcare (healthcare, hospital, care)

Income (trust, reit, tax)

Negative Clusters

Loan (loan, loans, mortgage, bank, credit)

Business (china, foreign, markets)

Clients (clients, patient, distributor)

Mixed / Neutral

Applicable (words like “applicable,” “requirements,” “affect,” “qualify,” which sometimes tilt positive or negative depending on context)

Insurance (insurance, claims, reimbursement—often near-neutral or mildly positive in some windows)

Time-Based Insights & Trend Changes

The total number of risky words seems to have increased significantly over the past 10 years (just under ~700 in 2023 vs. ~200 in 2000) and the ratios have changed (negative vs. positive %s).

1. Significant Time Variation in Coefficients

The coefficients on words like “oil,” “gas,” “clinical,” etc. are highly cyclical.

For instance:

“Oil” risk carried positive premiums in many periods but turned negative during the 2014–2016 oil-price crash—indicating a regime change in how the market priced “exposure to oil.”

“Loan” risk was especially penalized around the 2007–2009 global financial crisis period.

2. Shifting Importance Over Sub-Periods

Clusters such as energy, loan, or drug fluctuate in how much they contribute to explained cross-sectional variance. For example, from 2015 to about 2020, mentions of healthcare/drug sometimes had an outsized effect, whereas from 2005 to 2010, mortgage/loan risk was paramount.

The study’s variance-decomposition plots show that certain “risky word clusters” dominate in one era (e.g., loan in 2008–2009, energy in 2007–2008 and 2014–2016), then give way to others in later years.

3. Increasing Aggregate “Risk Words” in Recent Years

On average, the length of risk factors sections has grown dramatically since 2005, but the number of truly predictive “risky words” (those that appear with a nonzero coefficient) correlates more strongly with macroeconomic uncertainty (like VIX, EPU) than with year-by-year page inflation. In high-uncertainty times, more word-coefficients show up as significant.

Sector-Specific Insights?

1. No Single Industry Dominates

One might guess only Energy firms talk about “oil” or Healthcare firms talk about “clinical,” but the text reveals that:

All industries discuss broad sets of risks, often well beyond their “primary” sector classification.

For example, only about 25% of all “oil” or “energy” references come from Energy-classified firms; the other 75% come from Industrials, Manufacturing, Transportation, etc.

2. Industry Exposure vs. Risk Words

While each sector tilts its Risk Factors to certain themes (e.g., Healthcare mentions “clinical” more; Banks mention “loans” more), the out-of-sample predictive strategy remains strong within industries, too.

In separate tests, the author constructs “industry-adjusted” returns (subtracting average industry returns each month) and still obtains significant alphas from the risky-word strategy.

3. More Widespread than “Simple Industry Proxies”

Because many companies from diverse industries mention “oil,” “commodity,” “gas,” or “manufacturing” in their risk disclosures, the predictive signals cross typical sector boundaries. The synergy of many risk themes (rather than a single sector dimension) drives the large alpha.

Study: Risky Words & Returns (2024)

“Risky Words and Returns” combines high-dimensional textual analysis, machine-learning regressions, and asset-pricing insights.

It shows that firm-level risk disclosures—after proper filtering and shrinkage—yield strong predictive signals for future cross-sectional returns.

These “risky words” appear to capture a wide array of time-varying risk exposures and systematically outperform conventional factor models.

Despite some practical limitations (e.g., transaction costs, real-time implementation, exact causal interpretation), the study highlights the potential of big-data text analytics for asset pricing research and risk management.

Aims:

Identify & Quantify Dynamic Risk Exposures: The paper’s main goal is to discover the time-varying, high-dimensional sources of systematic risk that drive cross-sectional expected stock returns. Rather than assuming a small set of canonical risk factors, it starts from an expansive set of potential “risk exposures” directly disclosed by firms in the Risk Factors (Item 1A) sections of their 10-K filings.

Link Textual “Risk Factors” to Expected Returns: The paper hypothesizes that if firms’ expected returns compensate them for bearing certain systematic risks, then the text of these Risk Factors—which details the risks each firm perceives—should have predictive power. The core research question: Which disclosed risks (and to what extent) actually predict stock returns?

Provide a Framework for Parsing Risk-Disclosure Text: Rather than subjectively labeling risk topics, the author proposes a machine-learning-driven approach—first to pick out “risky words” that predict returns, and then to cluster them into interpretable “themes” (e.g., energy, loan, healthcare) using word embeddings.

Methods:

A. Text Extraction and TF-IDF Vectorization

Data Source

The author collects the text of 10-K filings (and variants) from the public repository provided by Loughran & McDonald.

Focuses on “Item 1A—Risk Factors,” isolated by regex/patterns (e.g., from “Item 1A” to “Item 1B” or “Item 2”).

Cleaning & Filtering

Removes stop words and non-alphabetic characters.

Constructs both unigrams (single words) and bigrams (two-word sequences).

Prunes extremely rare or extremely frequent terms (appearing in <5% or >95% of documents).

TF-IDF Representation

Translates each firm’s Risk Factors section into a high-dimensional TF-IDF vector: one dimension per (filtered) unigram/bigram.

Normalizes each firm-level vector so longer disclosures do not automatically dominate.

B. LASSO Regressions to Identify “Risky Words”

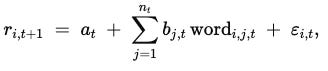

1. Cross-Sectional Setup

In each month t, runs a cross-sectional regression of one-month-ahead returns, where word i,j,t is the TF-IDF weight of word j in firm i’s most recent disclosure.

2. High-Dimensional Sparsity

Because the number of potential words nt often exceeds the number of stocks, the model uses LASSO (Least Absolute Shrinkage and Selection Operator). LASSO imposes an ℓ1-penalty on the coefficients, pushing many bj,t to zero and leaving only a small subset of “risky words” with nonzero coefficients.

3. Cross-Validation

Implements 5-fold cross-validation in each monthly cross-section to choose the regularization parameter λ. This helps prevent overfitting and ensures the chosen words truly have “out-of-sample” predictive capability.

C. Out-of-Sample Portfolio Strategy

1. Coefficient Averaging

To predict returns at month t+1, the paper uses coefficient estimates from a rolling 5-year window (or the preceding 60 months). It calculates an exponential-decay-weighted average of each word’s coefficient across this window, denoted bˉj,t.

2. Stock-Level Predictions

The predicted return for firm i at t + 1 is:

3. Decile (or Quintile) Portfolios

Sort stocks into decile portfolios based on r~i,t+1. A long–short strategy buys the top decile and shorts the bottom decile.

Assesses the strategy’s performance from 2005 to 2023, reporting both mean excess returns and factor-model alphas (CAPM, Fama-French-Carhart 4-factor, a broader 8-factor, and so on).

D. Word Embeddings and Clustering

Word2Vec

Independently trains a word2vec (CBOW architecture) embedding model on all risk-section text, generating a 300-dimensional vector for each unique word.

Agglomerative Clustering

Uses hierarchical clustering (with cosine distance) to group the discovered “risky words” into 14 orthogonal clusters such as Energy, Loan, Healthcare, Drug, etc.

Each cluster emerges because words in it appear in similar “contexts” in the risk sections.

Interpreting “Risk Themes”

The paper shows which words fall into each cluster, how many times they appear with nonzero coefficients, and whether they predict positive or negative return premia on average.

What were the key results?

1. Portfolio Performance

A long–short decile strategy (based on the Risky Words predictions) earns annualized market-adjusted returns of up to 22% from 2005–2023.

Even if the smallest microcaps are excluded, the strategy still generates ~13% annual alpha.

The alphas remain statistically and economically large in CAPM, 4-factor, 8-factor, and 14-factor regressions.

2. Stability and Key “Risky Words”

The LASSO procedure repeatedly identifies a small core set (~50 words) with consistently nonzero coefficients. Examples include “oil,” “gas,” “loans,” “clinical,” “mortgage,” “manufacturing,” “software,” etc.

Some words reliably predict positive future returns (e.g., references to “oil” or “energy” often come with higher subsequent returns), whereas certain terms (like “loan,” “mortgage”) correlate with negative future returns on average.

3. Semantic Clusters

Grouping these words into 14 clusters (e.g., Energy, Healthcare, Loan, Drug, Manufacturing) shows that, when used together, they better predict returns than any single cluster alone.

Clusters such as “energy” have particularly time-varying coefficients that spike around oil-price shocks or the 2014–2016 oil downturn. “loan” spikes around the Global Financial Crisis of 2007–2009.

4. Independence from Industries, Sentiment, and Standard Factors

Double-sorts and Fama-MacBeth regressions confirm that the “risky words” strategy is not a mere reflection of industry bets, textual sentiment, or size–value–momentum exposures.

Controlling for Li’s (2008) readability, Cohen et al. (2020) changes in 10-K, or negative/positive words from Loughran & McDonald does not explain away the abnormal returns.

5. Macro Uncertainty Connection

The total number of risky words chosen each month correlates strongly with the VIX and the Economic Policy Uncertainty (EPU) index, suggesting that when the market environment is riskier, a larger variety of “risky words” matter for returns.

6. Randomization Tests

Randomly reassigning risk sections to firms or injecting artificial noise matrices destroys the predictive power, reinforcing that the results are not spurious or an artifact of the model specification.

What were the key takeaways?

High-Dimensional Nature of Systematic Risks: The paper finds that risk exposures relevant to expected returns are neither captured by a single factor nor restricted to a small set. Instead, many different risk “themes” (energy, loan, drug, etc.) are priced in, and the importance of each theme is time-varying.

Firms’ Own Risk Disclosures are Predictive: The Risk Factors section, often perceived as boilerplate or too generic, contains clear signals about which risks the market prices. Investors demand higher expected returns if a firm discloses (and thus likely faces) exposures to certain systematic risks.

Textual Analysis > Traditional Factor Models?: A flexible textual approach powered by machine learning can discover priced risks that standard factor models overlook or bundle incorrectly. The paper demonstrates that the long–short “risky words” portfolio can even explain some known anomaly factors better than the market factor does.

Clustering as a Meaningful Economic Lens: The word2vec-based grouping helps interpret the “zoo” of discovered predictors, confirming that certain semantic fields (e.g., “healthcare” vs. “software”) represent qualitatively different systematic risk exposures.

Managerial & Research Implications

Practitioners might track dynamic textual signals in real time to build or hedge risk exposures.

Academics might integrate textual disclosures with factor modeling, especially in forecasting or explaining cross-sectional anomalies.

Limitations worth considering

Explanatory Rather Than Fully Causal: LASSO regressions are predictive. The paper interprets positive coefficients as indicating priced “risks,” but there is no formal causal identification. Future research may study the mechanisms more explicitly.

Word2Vec Training Across Entire Dataset: The word-embedding model is trained on the entire 2000–2023 corpus, not purely out of sample. Although the text embeddings are not used in the return-predictive step (only for clustering post hoc), it still draws on future textual context. A fully rolling approach might offer more “pure” out-of-sample embeddings.

Magnitude of Reported Alphas: Earning ~22% per year may raise questions about transaction costs, liquidity, or short-selling feasibility. The paper partially addresses microcap biases by excluding tiny stocks, but real-world implementation challenges (latency, slippage, short constraints) may reduce net returns.

Potential Overlap with Idiosyncratic or Fundamental Information: While extensive controls for firm characteristics are included, some portion of the discovered words might reflect idiosyncratic news or intangible capital. The paper argues the patterns appear systematic, but pinning down purely idiosyncratic vs. macro risk is still an open question.

Timing of 10-K Filings: The risk disclosures are filed at various times throughout the year. The study updates monthly, assuming the last filing remains “current” until a new 10-K is filed. In practice, some large events or 8-K disclosures might update risk factors mid-year. Future work could refine how quickly markets incorporate new disclosures.

Unsupervised vs. Supervised Tension: Existing topic-model or unsupervised text-mining approaches might group risks differently from the LASSO-based approach, which is purely return-supervised. The paper gives a supervised lens but does not systematically compare to an LDA or more advanced NLP approach.

Potential ROI from this “Risky Words” Strategy

1. Reported Annualized Performance

The paper’s main out-of-sample long–short decile strategy shows up to 22% annual alpha (market-adjusted return) from 2005–2023.

Excluding tiny stocks (e.g., bottom 20% by NYSE size), the strategy’s alpha is still around 13% per year.

2. Practical Notes

Rebalancing occurs monthly, with returns predicted from the most recent 10-K risk disclosures.

Real-world transaction costs, shorting constraints, or delays in acquiring 10-K text could trim these raw alphas, but the paper’s core finding is that there is significant predictive power.

How Many 10-K Filings to Analyze?

Number of 10-K Filings: The study examined ∼108,000 annual filings (10-K, 10-KA, etc.) containing a Risk Factors section from 2000–2023.

Firm-Month Observations: After matching with CRSP/Compustat data and rolling forward each “Item 1A” text until the next filing, the paper’s dataset has ∼1.23 million firm-month observations.

In practice, if you wanted to replicate the approach, you would need to parse and TF-IDF transform all current or recent 10-K risk sections for each stock you trade.

How could a person replicate this strategy?

In practice, you would:

Continuously gather new 10-K texts, parse and TF-IDF them.

Use LASSO on a rolling basis with cross-validation to detect the few hundred or few dozen “risky words” that matter for next-month returns.

Predict and build a monthly rebalanced long–short portfolio (or choose a confidence-weighted approach).

Aim for an annual alpha in the neighborhood of 10–15% (after transaction costs, realistic scenario), with the caveat that small/microcap heavy implementation could realize bigger returns, but also higher costs.

While no strategy can guarantee results, the study provides robust historical evidence that risk-factor text (properly filtered and weighted by LASSO) carries significant predictive power for cross-sectional returns.

Why this strategy is impractical for most people…

Unless you’re extremely high IQ and want to invest a lot of time, effort, and money - it’s likely not worth trying.

In fact, there’s no guarantee you’ll even have the success that was demonstrated in this paper… you may lose bigly to an ETF like QQQ.

And even if you manage to beat QQQ, you still might lose out to Bitcoin (BTC) and/or other ETFs like SMH.

That said, using this strategy may be more reliable/predictable… it’s just too big-brained for most people to attempt and the results may still lose to the Top Tech ETFs.