Cerebras Systems (CBRS) IPO: Wafer-Scale AI Chips to Compete with NVIDIA?

Will Cerebras Systems outperform NVIDIA in the AI accelerator market?

I took a little time to research the company Cerebras Systems, a company that specializes in AI hardware centered around “wafer-scale technology” (think semiconductor sector).

Why? Because Cerebras will IPO soon (2024-2025) with Class A Common Stock under the ticker “CBRS” and is being hyped as a potentially elite specialized player in AI infrastructure.

While the moniker “NVIDIA killer” may be sensationalized (potential MotleyFool headline), Cerebras has managed to carve out a unique niche in the AI hardware space - making it a company worth analyzing for potential investment.

Cerebras claims to have built the “industry’s fastest AI accelerator” and massively accelerated Lama 3.1 405B (12x faster than GPT-4o and 18x faster than Claude 3.5 Sonnet).

Flashback: In 2017 OpenAI considered teaming up with Tesla to buy Cerebras Systems. The current feud between Altman & Musk would’ve become even more entertaining.

Cerebras Systems: What do they do?

Cerebras Systems is a technology company focused on creating hardware solutions specifically for AI and high-performance computing (HPC).

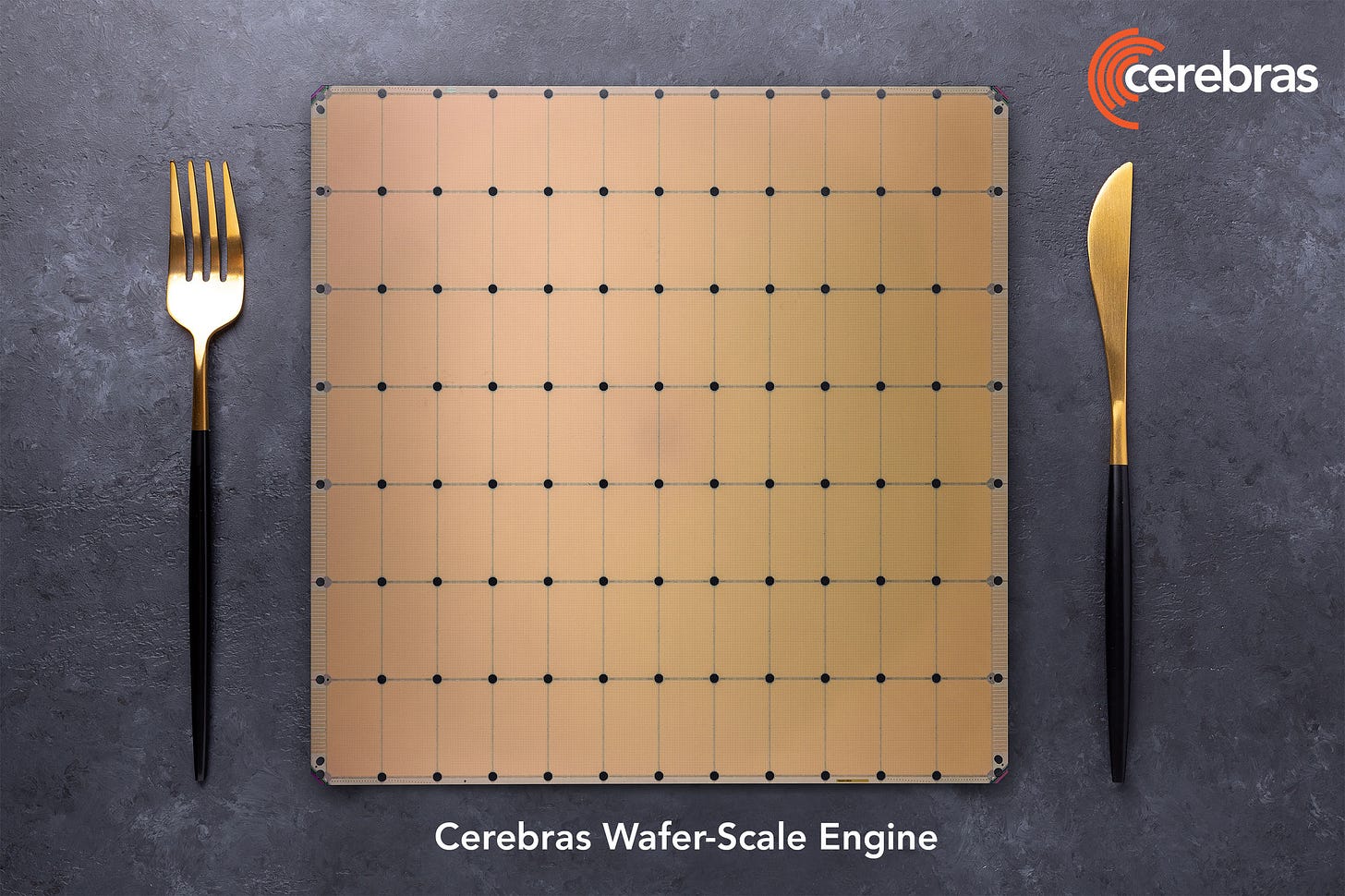

Known for developing the Wafer-Scale Engine (WSE), the largest AI processor ever built, Cerebras has redefined how computational power can be harnessed to process complex and data-intensive AI workloads.

The WSE, housed within Cerebras’ flagship CS System, is engineered to accelerate AI model training and inference far beyond what traditional CPU and GPU architectures can achieve.

Founded: 2015

Founders: Andrew Feldman (CEO), Gary Lauterbach, Michael James, Sean Lie, Jean-Philippe Fricker

Headquarters: Silicon Valley, California

Cerebras (CBRS) IPO Details (Pre-IPO)

Cerebras is thought to IPO in 2024 or 2025.

Target raise: $750M-$1B

Valuation: Estimated between $7 billion and $8 billion

Ticker Symbol: CBRS

Underwriter: Citigroup

Employees: 401, with a revenue per employee of $514,918

CBRS: 2023-2024 Revenue Growth

Cerebras has demonstrated high revenue growth, underscoring its increasing market adoption.

2023 Revenue: $78.74 million (+219.85% from 2022)

Trailing 12 Months (June 2024): $206.48 million

H1 2024 Revenue: $136 million

Group 42 (G42): Customer concentration

G42 (Group 42), a major UAE-based AI tech holding company accounted for over:

83% of revenue in 2023

87% of revenue in H1 2024

G42 is using Cerebras Systems tech for: supercomputers to power LLMs and run advanced data analytics (e.g. genomic analysis).

G42 was founded in 2018 in Abu Dhabi and chaired by UAE’s national security advisor Tahnoun bin Zayed Al Nahyan.

Microsoft invested $1.5B in G42, Brad Smith (MSFT’s vice chair joined G42 board), and OpenAI partnered with G42 in October 2023.

While the concentration in G42 raises dependency concerns, it also highlights Cerebras’ ability to secure and scale major partnerships.

Cerebras USP: Wafer-Scale Tech

The Wafer-Scale Engine (WSE) gives Cerebras a distinct edge, directly addressing limitations in traditional CPU and GPU-based architectures like interconnect bandwidth, scaling challenges, and energy inefficiency.

4 trillion transistors and 900,000 AI-optimized cores: The largest AI processor in the world on TSMC’s 5nm process.

125 petaFLOPS peak performance: Rivaling GPU clusters with unprecedented efficiency.

44GB of on-chip SRAM & support for 1.2PB external memory: Breaking traditional memory bottlenecks.

Energy Efficiency: Doubles performance from its predecessor while maintaining the same power usage.

Performance:

Model Training: Can train AI models with up to 24 trillion parameters.

Scaling: Scales up to 2048 systems in a cluster.

Rapid Fine-Tuning: Refines 70 billion parameter models in one day with just four systems.