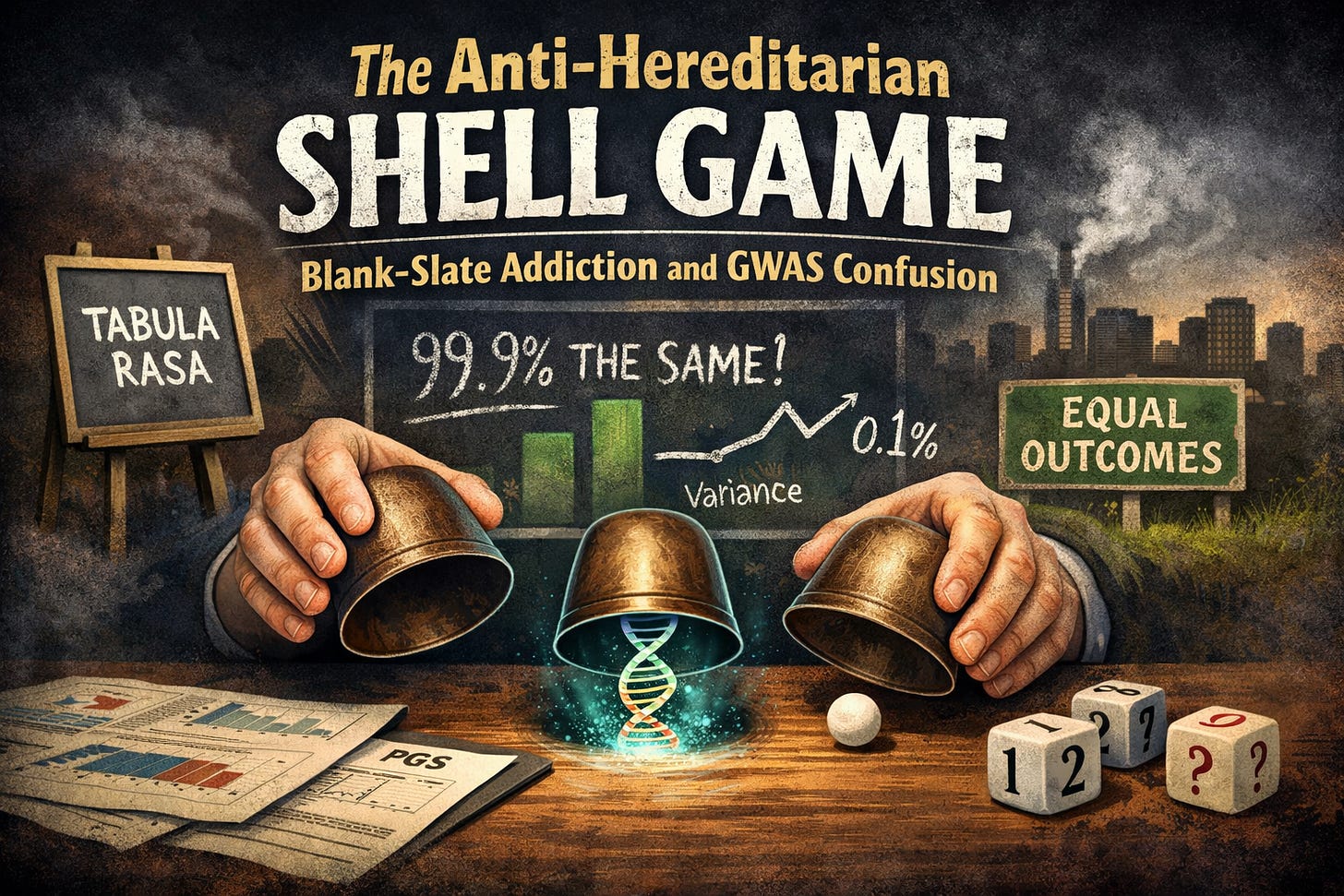

The Anti-Hereditarian Shell Game: Blank Slate Addiction & GWAS Confusion

"We are 99.9% the same"... ad infinitum.

Anyone who can (1) observe reality (history + the present), (2) think in first principles and feedback loops, and (3) interpret data without flinching already knows the core truth: for most human traits, genes run the show.

The Blank Slate isn’t just a fallacy; it’s an intellectual narcotic. It seduces the masses of randoms into believing they’re just one 80,000-hour grind or a few more books away from becoming Einstein or Elon.

I’d love that to be true. We could just tweak a few environmental knobs, dump money into “higher-quality education” and VOILA — the entire U.S. is printing geniuses like The Fed prints fiat.

But biology doesn’t take bribes.

Humans are the cold output of evolution, and evolution doesn’t deal in blank slates. It deals in trait distributions. It yields a hard-coded variance in ability, temperament, and impulse control.

Selection doesn’t spend eons sculpting the individual; it carves the population. You aren’t a lump of clay; you’re the refined edge of a billion-year-old blade.

Meanwhile, most “science messaging” isn’t innocent error — it’s motivated narrative management and malicious gatekeeping by left-wing (progressive/liberal) woke academics and scientists.

Environment gets embellished. Genetics gets minimized. And anyone who notices gets smeared as immoral for even looking.

Public-facing messaging consistently relies on 3 repeatable talking points because they are persuasive — not because they’re accurate.

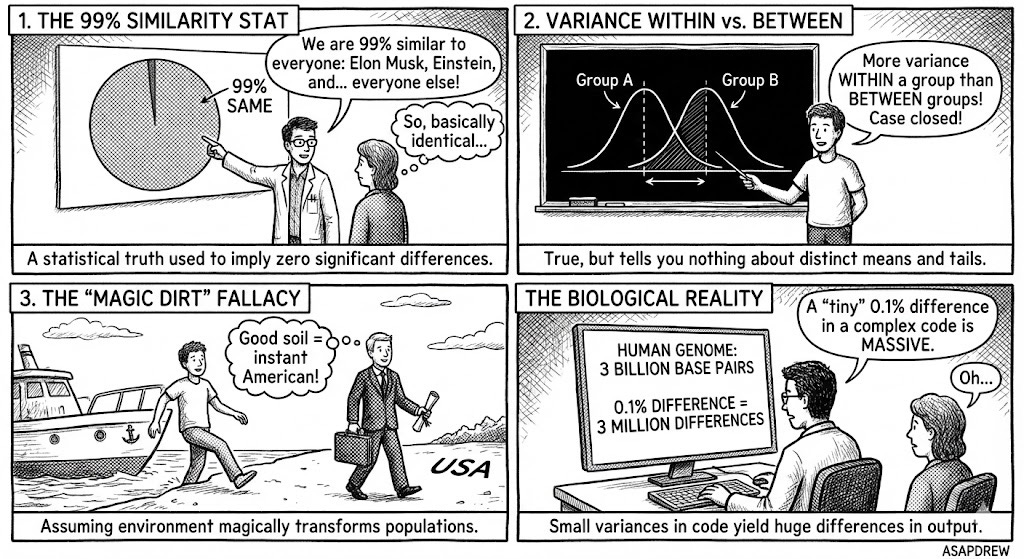

1. Humans are 99.9% the Same (Psychological trickery)

This is a statistical truth leveraged dishonestly to plant the idea that we are all so similar that there are basically zero genetic differences of significance… and you are racist, xenophobic, a Hitlerian eugenicist or whatever if you even think about this.

People of different races, ethnicities, etc. are extremely genetically similar.

You and I are 99.9% similar at the base-pair level to Elon Musk, Adolf Hitler, criminal warlords, super geniuses, people with intellectual disabilities, etc.

This is just a really fucking stupid way to think.

If 1% of DNA is the genetic difference between a chimp that throws its own feces and a creature that builds a SpaceX Falcon 9, then 0.1% within humans is more than enough of a difference to account for the gap between a 70 IQ laborer and a Nobel laureate.

Yet anti-hereditarians can’t resist the urge to repeatedly spam: “We ArE 99.9% tHe SaMe!!!”

This is a base-pair (single-letter) talking point framed to make the general public feel like the differences are objectively trivial. Yet a simple “0.1%” slice is still millions of base pairs. (R)

And when you include other kinds of variation (not just single-letter swaps), NHGRI notes that any two people’s genomes are closer to ~99.6% identical and ~0.4% different on average. (R)

Reality: Humans are complex systems and the code is non-linear. A 0.1% change in a 3-billion-letter sequence is 3 million differences. If a 1.2% difference creates the gap between a bonobo and a nuclear physicist, why would anyone assume 0.1% is trivial?

The same institutions that chant “tiny differences don’t matter” will also casually admit things like this:

Human vs chimp: AMNH says human and chimp DNA is ~98.8% the same, and that the remaining 1.2% is ~35 million differences. (R)

Human vs bonobo: A Nature bonobo genome analysis reports bonobo sequences are ~98.7% identical to human in single-copy autosomal regions. (R)

Human vs mouse: Even mice share ~85% similarity in protein-coding parts of the genome. (R)

(Related: Super Rats: Rapid Urban Evolution)

Point: Percentages are a helluva narcotic. “Only 1% different” can still mean tens of millions of differences. That’s not “basically the same.” That is a fuck ton of biological instruction.

This talking point is so brutally misleading to the general public, it should make you sick to your stomach. Why? Because this ~0.1% difference is essentially the gap between (A) intellectually disabled and (B) John von Neumann.

2. More Variance within Groups than Between Groups

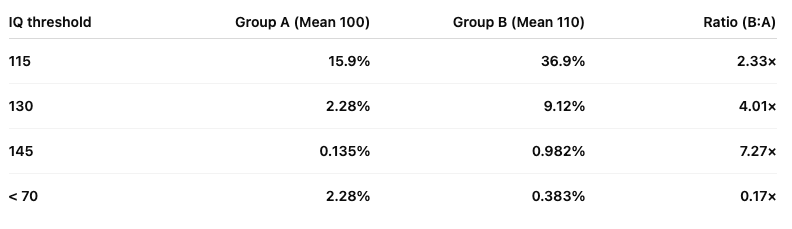

Completely true and insanely abused. Even small mean (i.e. average) shifts yield massive, multiplicative changes in tail mass (and tails make or break countries).

Racial and ethnic groups have distinct trait distributions downstream of selection effects and evolutionary pressures.

Woke academics leverage this “more variance within groups than between” spam to imply that all groups are basically the same… you can swap one out for another and get a roughly similar outcome.

The "Lazy Native" Fallacy

To add fuel to the fire, they frequently highlight the bottom tail of the native population in the U.S. (i.e. "unproductive, lazy white trash") and use them as a human shield to push immigration policy.

The argument goes: "Since your own group has low-performers, you have no moral right to exclude immigration from failed states."

This is a downright malicious con.

The native-born population — especially those downstream from the nation-builder (pre-welfare/handout) stock — has a birthright to their land that isn't contingent on an IQ test or a productivity score.

You don't get to use the existence of a group's "low-performers" to justify the demographic replacement of their ancestors' legacy if they don’t want it.

Wokes point at the bottom tail of the native population and say:

“See? Even in the U.S. there are tons of low performing Whites — so borders are immoral and selection doesn’t matter.”

Like look at all these unproductive lazy white fuckers in the U.S. who were lucky to be born there! Therefore you should allow unfettered immigration from failed states because they deserve a chance too.

The Reality of the Tails

Even if groups overlap, the “Between Group” differences are what actually dictate the fate of a civilization. Small differences in means/medians matter quite a bit and compound significantly, but the other massive impact is a populations' tails (ratio of extreme competence/genius to extreme dysfunction).

If you shift a population’s mean even slightly (e.g. ~0.5 SD), you don’t just get a “slightly different” society. You get a massive, exponential shift in the number of inventors, surgeons, and founders versus the number of violent predators and dependents.

A 10-point gap in the mean creates ~7 times more geniuses (e.g. IQ > 145) in the higher performing group. That’s the difference between a tech-hub and a rust belt.

The Trap: Just because there is variance within a group doesn’t mean the groups are interchangeable. Knowing there is variance within a population tells you absolutely nothing about the distinct, hard-coded averages that separate different evolutionary lineages.

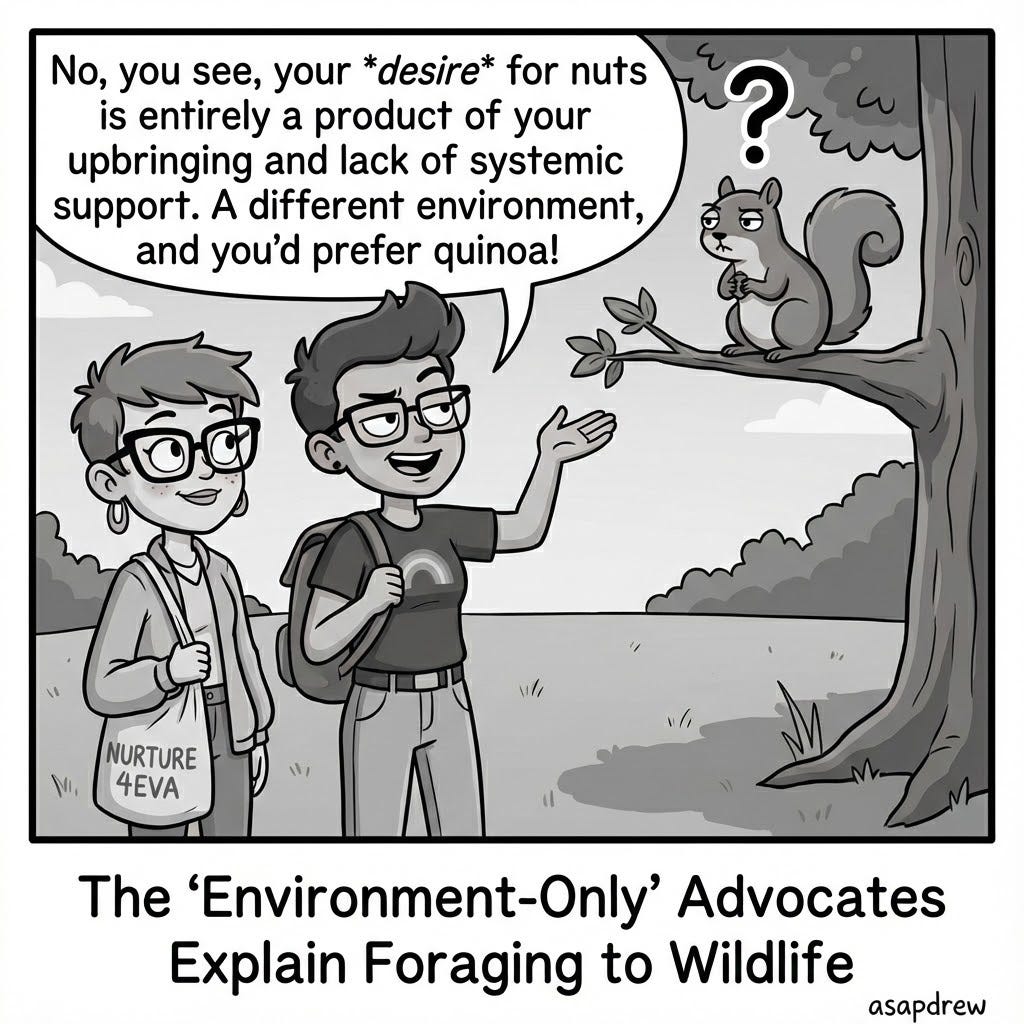

3. The “Magic Dirt” Fallacy (Immigration)

This is where the logic falls apart completely. People assume that because we have “good soil” or “democracy,” we can import any population, and the environment will magically transform them into Americans.

Migration is the ultimate test of the “Nature vs. Nurture” debate.

If the “Magic Dirt” theory were true, moving a population from a failed state to a high-trust, first-world environment would cause their outcomes to converge with the native population within a generation.

We have run this experiment. It doesn’t happen. They do improve but typically remain at least 0.5-1.5 standard deviations below the native population in IQ.

The Step-Function vs. The Slope

Environmentalists confuse a one-time Step Function for a continuous Slope. This is because they don’t understand the Law of Environmental Saturation.

Environment isn’t a linear slope; it’s a concave curve. Remove catastrophic constraints (disease, malnutrition, toxins, illiteracy) and you get huge gains.

After that? Returns collapse. Past the saturation point, government spending on education doesn’t manufacture geniuses — it mostly funds new stories for why the geniuses didn’t appear.

Sure when you move people from extreme deprivation to the U.S., you see an initial bump (e.g., IQ 70 → 85) as you optimize nutritional and educational effects.

Yet the “LeBron James School” model assumes that if we just pour more money in, that line keeps going up to 100, then 110, then 120, etc. Reality: It doesn’t.

Once the environmental low-hanging fruit is harvested, the group hits its genetic ceiling and stabilizes — often still roughly 1 SD below the native mean. You get a boost, not a transformation.

Traits Beyond IQ (The “Hidden” Phenotype)

Assimilation implies that only GDP matters.

But populations differ in heritable traits beyond raw intelligence: clannishness (tribal norms vs. impersonal institutions), corruption propensity, time preference (delay gratification vs. life-for-today), and violence propensity.

These are not “software” patches you can overwrite with a civics class; they are part of the group’s evolutionary hardware.

A high-IQ but highly clannish/corrupt group builds a fundamentally different society than a high-IQ, high-trust group.

The “Magic Dirt” fallacy assumes people are interchangeable economic units. They are not. They are biological carriers of deep time.

These patterns are sticky, self-reinforcing, and contagious in both directions. Scale the inflow high enough and the host system changes — not because dirt is magic, but because institutions are downstream of people’s repeated behaviors at scale.

It’s Genetics (Stupid)

This isn’t an open question or even up for debate.

In a modern, stable country (outside of zones of extreme deprivation) persistent differences in ability, temperament, and life outcomes are primarily biology-driven.

The standard objection is: “But wait! Evolution proves that environment shapes genes!”

This confuses timescales. Deep Time (Evolution) writes the code. Real Time (Civilization) runs the program.

Deep Time (Evolution): Over thousands of years, harsh environments and selection pressures indeed shape the genome.

Real Time (Civilization): Over decades or centuries—the timescale of nations and policy—the arrow of causality reverses. The genome shapes the environment.

We are currently operating with the hardware we inherited. In a modern welfare state, we have removed the raw mortality pressures that drove upward evolution in the past.

In fact, current selection pressures are likely modestly dysgenic (selecting against intelligence and delayed gratification), as higher-IQ groups reproduce below replacement levels. (R)

We certainly aren’t selecting for the traits that built the modern world. In 2025, “environment” is mostly the scoreboard not the underlying engine.

Bottom line: On civilizational timescales, “environment” is mostly downstream of people’s repeated behavior at scale. That’s the engine, not the soil.

The “Missing Heritability” Hoax

For over a decade, “missing heritability” was the favorite cudgel of people who really didn’t want genes to matter very much for complex human traits like IQ and educational attainment.

The setup was elegant: treat the limits of measurement technology as limits of biology itself.

Early array-based GWAS (common-variant tag scans) were like a low-resolution camera from 2005. They only scanned common genetic markers (like picking a few words out of a book) and missed rare variants and complex structures. Because the camera was blurry, the genetic influence looked small.

GWAS/PGS were never a serious ceiling on “how genetic” anything is.

GWAS is a cheap tag-scan of mostly common variants, with imperfect linkage tagging, crude phenotypes, and (historically) weak sample sizes.

A low PGS R² (predictive accuracy) is not a deep truth about biology — it mostly says “prediction is hard with a blurry sensor.”

Meanwhile, twin and adoption studies routinely found substantial heritability:

Yet early GWAS could only “find” common variants explaining maybe 10–20% of variance in many traits. (R)

Here’s what people were confusing:

h² (heritability): Share of variance explained by genetic differences in a population, given the current range of environments.

SNP h² / GWAS h²: How much variance is tagged by the common SNPs you measured/imputed under a basic model (a known lower bound).

PGS R²: How well we can predict right now with today’s GWAS weights (a prediction limit, not a “genes only matter this much” limit).

Translation:

Heritability asks: “How much of the spread is genetic?”

GWAS h² asks: “How much can our cheap tag-scan see?”

PGS R² asks: “How good is today’s prediction model?”

Confusing these is like claiming a disease doesn’t exist because your X-ray machine is too old to see it.

The entire “Blank Slate” survival strategy relied on one simple trick: Confusing a blurry photo with reality.

For years, anti-hereditarians pointed at early DNA studies and said:

“Look! Our DNA models can only predict 10% of the difference between people. Therefore, genes barely matter.”

This was a category error. It treats a limit of the technology as a limit of biology.

This wasn’t a deep rebuttal. It was a sensor trick. They treated a cheap tag-scan (SNP h² / PGS R²) like it was the full causal contribution of the genome, then called everything else “shared environment” to keep the Blank Slate story alive.

The skeptics weaponized this blurriness. They argued:

“Twin studies claim IQ is ~80% heritable, but our tag-scan only finds 10%. Therefore, findings from the twin studies are misleading and/or inflated.”

Put more simply: “GWAS” was marketed to the general public as the most accurate predictive method for heritability of traits like IQ. This let the anti-hereditarians downplay twin studies by suggesting something like: Sure twin studies show that, but our population-level studies with GWAS show that’s bullshit… so therefore genes don’t matter much!

The Nuke: Whole-Genome Sequencing (WGS)

New technology (Whole Genome Sequencing a.k.a. “WGS”) allowed us to upgrade from a blurry snapshot to a 4K video. We stopped "scanning" and started reading every single letter of DNA.

WGS replaces the input data from common variant tag scans to full sequence, including rare/structural variation.

A massive 2025 whole‑genome sequencing (WGS) study in Nature: “Estimation and mapping of the missing heritability of human phenotypes” by Wainschtein, Yengo et al. analyzed ~347,000 UK Biobank genomes using ~40 million variants and a GREML‑WGS framework.

Key findings:

When you read the whole sequence (including rare variants), the DNA explains ~88% of the heritability that twin studies predicted.

For traits like height, the DNA estimate now matches the twin study estimate almost perfectly.

On average across 34 traits, WGS variants explain ~88% of pedigree‑based narrow‑sense heritability (about 68% from common variants and 20% from rare variants).

For many highly powered traits (e.g. height), there is no meaningful difference between sequence‑based and pedigree‑based estimates. (R)

In other words: Classic family designs were mostly right about additive heritability. Early GWAS were underpowered and incomplete. For complex traits you miss significant heritability if you don’t use WGS to detect the rare variants.

The anti-hereditarian position didn’t update.

If you were genuinely updating on evidence, you’d say something like:

“Okay, classic family designs were mostly right about additive heritability. Early GWAS were underpowered and incomplete.”

Instead, for those deeply committed to minimizing genes in public narratives, something else happens: the goalpost itself moves.

What counts as “genetic” changes.

The metric used changes.

The methods you trust change.

The parts of the pipeline you spotlight vs ignore change.

This is the anti-heritability shell game.

In this piece I’ll highlight recurring tactics and deep motives that make them attractive to woke scientists and academics.

You can believe some of these in good faith but that’s not the point.

The point is: taken together, they let the “genes are small, environment is huge” story survive, even when the data are marching the other way.

Hiding the Effects of Genes

To see the anti-hereditarian shell game, you need a clean mental model of where traits actually come from.

Start with IQ (or whatever you care about). Strip away the crappy psychometrics and imagine an alien brain‑scanner that reads out latent g (i.e. general intelligence) perfectly: no test noise, no cultural bias, no “did you sleep badly last night”.

Also strip away the fake dichotomy where genes are “little knobs” and everything else is “environment”.

What you actually have is:

A genome – a big combinatorial rulebook, not a JPEG.

An unfolding process – the DNA → brain build process: which genes switch on/off when, how wiring is laid down, how timing differences get amplified, and how the system self-tunes over time (gene regulation, chromatin folding, tissue growth, cortical wiring, etc.)

That system embedded in a physical context – womb, placenta, hormones, nutrients, infections, later sensory input, etc.

The key point: most of what people wave away as “environment” in humans is either:

directly controlled by someone’s genome, or

the predictable output of those unfolding rules given the kind of world humans have actually built.

So when we talk about variance in IQ (or anything else), the conceptually right decomposition is something like:

IQ ≈ (G₁ direct) + (G₂ gene-shaped environment) + (E exogenous) + (R noise)Where:

G₁ – Direct genetic + unfolding effects (“within‑skin blob”)

This is everything that starts in your DNA and propagates forward via the laws of physics and development:

The exact sequence: SNPs, indels (insertions and deletions), rare variants, structural variants, CNVs, etc.

The 3D folding pattern of your genome in each cell type (TAD boundaries, enhancer–promoter loops, etc.), which changes which genes “see” which regulatory elements.

The developmental program: how your brain actually grows and wires — neuron counts, migration patterns, synaptic density, receptor profiles, cortical thickness, white‑matter tracts, etc.

The way your nervous system updates itself over time in response to input, given that starting architecture and those plasticity rules.

This isn’t “genes vs environment”. This is genes‑plus‑unfolding as one physical dynamic system. Two people can have very similar genotypes at the coarse level but different rare variants, different structural variants, different chromatin architecture, slightly different developmental timing, etc. That yields different brains, different stable attractors in state space, different “default policies” for cognition and behavior.

When most people say “I believe IQ is ~80%+ heritable once you control for obvious environment”, what they actually mean is:

“Given a modern rich country and no catastrophic deprivation, most of the stable differences in people’s cognitive ability are explainable by G₁: their full personal genome plus how it unfolds into a physical brain.”

Not just “SNP list in a spreadsheet”. The entire permutational blob: full WGS, all the rare stuff, all the structural/folding patterns, all the internal interactions.

G₂ – Indirect genetic effects (genetically structured environment)

This is why “genes vs environment” is a fake binary. G₂ is not “your DNA” (G₁), and it’s not a free-floating, plug‑and‑play “environment” either.

Important clarifier: G₂ isn’t just “other people’s genes.” It includes any environment systematically produced by heritable traits — including the environments your own temperament and ability cause you to seek, evoke, and build. The point is: it’s genome-structured and therefore not a free, copy-paste policy dial.

You can’t just clone a high‑IQ, high‑conscientiousness home/peer ecology and hand it out like a school voucher — because that “environment” is largely produced by other people’s heritable traits interacting and assorting.

So I treat G₂ as its own category: an environment term that is genetically structured and therefore not an independent policy dial.

Parents’ genomes → their traits → your “home environment”. Their IQ, conscientiousness, mental health, income, stability, values, and even uterine biology are genetically influenced. Those in turn determine prenatal care, nutrient supply, toxin exposure, stress hormones, how much they talk to you, what books are in the house, how chaotic things are, etc.

Your own genome → how others treat you (evocative effects). High‑g, low‑neuroticism kid gets very different feedback and opportunities from teachers and peers than a low‑g, high‑disruptiveness kid, even in the same school.

Your own genome → which environments you seek (active effects). Some kids are drawn to books and coding; others to risk and chaos. That’s not a purely free variable; it’s heavily temperament‑driven.

Peers’ genomes → peer environment (social genetic effects). The “culture” of your friend group or cohort isn’t random; it’s the aggregate behavior of a genetic sample.

Kong et al. (2018) show this cleanly: parents’ non-transmitted alleles still predict children’s educational outcomes via the environments parents create (“genetic nurture”). That’s G₂ in the flesh. (R)

Follow‑ups using sibling and friend polygenic scores show the same pattern: genotypes sitting around you matter, even when they aren’t inside you.

So conceptually, G₂ is:

Everything in your “environment” that is systematically caused by somebody’s genome.

The G₂ Extended Phenotype: Think of G₂ as the beaver’s dam. The dam is “outside the beaver” (environment), but it’s built by beaver behavior — which is downstream of beaver DNA (G₁). You can’t “hand out” dams to squirrels and expect squirrel civilization to become beaver civilization.

When blank-slaters point at a high-trust neighborhood and call it “environment,” they’re staring at a human dam and pretending the builders don’t matter.

Prenatal “environment”? Heavily shaped by maternal biology — which is itself genetically influenced.

Household “environment”? Strongly shaped by parents’ traits and stability — again, substantially heritable.

Peer “environment”? Not random either: who clusters with whom is driven by temperament, ability, and assortative sorting.

Calling all that “environment” and then using it to argue “see, it’s not genes” is pure re‑labeling and extremely misleading to the general public.

Why? When they think of “environment” — they are thinking of things that you can essentially copy across the population for a big effect.

E – Exogenous environment (what’s actually non‑genetic)

This is the stuff that doesn’t track any particular genome in a neat way:

National policy, school funding formulas, tax code.

Lead in the pipes vs no lead, generalized air pollution.

Which specific teacher you randomly get in 4th grade.

Whether a random drunk driver hits you at 17.

Whether your country has a civil war while you’re in high school.

It matters, and in extreme cases it can nuke everything else (famine, war, industrial levels of lead). But once you’re in a rich, relatively stable society with basic public health, E is much flatter within that country than people like to pretend.

R – Developmental randomness (“true noise”)

Finally, there’s the pure noise term:

Slightly different micro‑timing of cell divisions.

Which exact neurons die or survive during pruning.

Random infections, fevers, minor injuries.

Chaotic dynamics inside a massively complex brain.

Even with identical G₁ and extremely similar G₂/E, monozygotic twins aren’t literally identical in IQ or temperament. That residual isn’t “secret social factors”; it’s physical stochasticity.

Put in variance form

Var(IQ) ≈ Var(G1) + Var(G2) + Var(E) + Var(R) + (overlap terms)G₂ is the beaver dam: “environment,” yes — but environment built by heritable traits. Relabel that as “non-genetic,” and you can manufacture any denominator you want.

Now the critical move that the anti‑hereditarian people never say out loud:

If you define “genetic” = G₁ + G₂ (the whole genetic blob – every influence whose causal chain starts in DNA), genes are massive.

If you define “genetic” = G₁ only, shove G₂ into “environment”, and quietly roll R into “environment” too, genes look small.

Everything downstream in the debate is people playing denominator games on that choice.

You can take the same world and describe it as:

If you define “genes” as G₁ only, you’ll say: “Your genes explain only a slice; the rest is environment and noise.”

If you define “genetic” in the normal-human sense — G₁ plus gene-shaped environments (G₂) — you’ll say: “Most stable differences are genome-rooted once obvious deprivation is removed.”

Both can be algebraically true. Only one matches how normal humans use the word “genetic”.

And notice where all the “unfolding” lives:

Brain morphology, wiring, plasticity rules: G₁.

Parent’s uterine biology, metabolism, placental function: their G₁, shows up as your G₂.

The fact that humans as a species have certain developmental attractors and learning trajectories: species‑level G₁.

Once you see that, a huge chunk of what gets sold as “non‑genetic environmental factors” is just the extended phenotype of genomes bouncing off each other and the world.

Everything after this is basically one long attempt to hide that blob.

Part I: The Technical Shell Game (Data & Methods)

Tactic 1: Treat early GWAS as a ceiling, not a floor

GWAS h² and PGS R² were floors, not ceilings.

Early GWAS didn’t “fail to find the genes.” It was never in position to find most of them.

SNP GWAS is a tagging technology. So treating early GWAS as a ceiling on “how genetic” a trait is was always either ignorance or opportunism (or both).

It also misses or poorly captures exactly the stuff that matters in real genomes:

Rare variants, structural variants, repeats, copy-number changes, and nonlinear/interaction structure, unfolding morphology — i.e., most of the interesting biology.

Every geneticist knew SNP‑based h² was a lower bound on additive heritability.

But the anti‑hereditarian rhetorical move was:

Twins and pedigrees say: trait X is 50–80% heritable. (R)

GWAS finds SNP h² of ~10–20% for IQ/EA. (R)

Therefore: twin studies must be inflated; the “real” genetic effect is ~10–20%, the rest is environment.

Then Whole‑Genome Sequencing surfaces: Wainschtein et al. show WGS recovers ~88% of pedigree narrow‑sense heritability across traits on average, and essentially all of it for several traits. (R)

The honest update would be:

“Right, twin/pedigree methods weren’t wildly wrong; early GWAS were incomplete.”

The shell‑game version quietly drops the old argument and moves on to new ones without ever saying “we were using SNP h² as a ceiling when it was always a floor.”

Tactic 2: GWAS is “good enough” freeze

This tactic involves stopping the tape at the exact moment genes still look small.

Once GWAS and polygenic scores got big (hundreds of thousands to millions of people), a softer but convenient line appeared:

“We already have huge GWAS. Polygenic indices for education explain ~12–16% of variance. That shows genes are only a modest piece and most of it is environment. No need for exotic, expensive tech.” (R)

The implied story:

“The marginal return from WGS, deeper multiomics, or better phenotyping is tiny.”

“We already more or less know how big genetics is for these traits.”

Reality:

WGS clearly changed the picture for height and other traits, showing rare variants and improved modeling can recover a big chunk of previously missing heritability.

Integrative multiomics and within‑family designs are explicitly designed to separate direct (G₁) and indirect (G₂) effects and cleanly estimate causal parameters. (R)

“GWAS is enough” becomes a way to freeze the evidence at the point where genes still look relatively modest, and preemptively dismiss the more powerful tools (WGS, within‑family GWAS, deep phenotyping) that are likely to push estimates toward the hereditarian side.

If you want the most accurate estimate, you don’t stop at GWAS; you go to WGS + better phenotypes + within-family designs and watch the “environment did it” story shrink.

Tactic 3: Redefine “genetic” to G₁ only (direct h² or nothing)

Once WGS closes the missing heritability gap, the next move is to redefine the question:

“Twin and pedigree estimates aren’t wrong, they’re just not the question we care about. We only care about direct genetic effects, not indirect ones.”

So classic heritability (H² or narrow h²) effectively counts G₁ + a lot of G₂ as “genetic.”

New “direct h²” definitions explicitly try to strip out G₂:

Within‑sibship GWAS shrink effect sizes for traits like educational attainment and cognition compared to population GWAS, because they remove stratification and family‑level indirect effects. (R)

Trio‑based and related methods (e.g., using non‑transmitted alleles) separate transmitted (direct) from non‑transmitted (indirect, “nurture”) effects. (R)

Scientifically, that’s fine — if you’re explicit about what you’re estimating.

“Direct h²” is a causal estimand: it deliberately strips out G₂ (genetic nurture / social genetic effects) and cleans up some confounding.

The shell game is what happens next:

You take that narrow estimand and market it as the answer to: How genetic is this trait? … “Direct h² is only ~10–30%” — with no mention that G₂ didn’t vanish. It got re-labeled as “environment.”

To a normal person asking: “How genetic is IQ?”… the answer they care about is the full genome-rooted blob: G₁ plus the gene-shaped environments (G₂) that can’t simply be copied across the population.

“Direct-only” heritability is useful for a specific causal question. It is not a morally privileged definition of “genetic.”

Tactic 4: Over‑adjustment: control away the paths genes actually use

Another clever trick is to regress away the very variables through which genes exert their effects, then point at the smaller residual as proof that genetics is minor.

Examples:

Genes → brain structure → IQ: Regress IQ on genotype and detailed brain imaging measures. The regression coefficient on genotype shrinks, because you’ve controlled a major mediator. You then say: “Genes add little beyond the brain.”

Genes → parental education/SES → child IQ: Regress child IQ on child genotype plus parental education, income, and school quality. The coefficient on the child’s genotype shrinks. You then say: “Most of IQ is environment.”

But in both cases, you’ve just blocked the G₁→G₂→IQ pathway by design.

That’s okay if you’re explicit (“we’re estimating the effect of genes not mediated by X”), but it’s deception‑adjacent if you then imply that the smaller, over‑adjusted coefficient measures the total genetic impact.

It’s like measuring the effect of smoking on lung cancer after adjusting for lung damage and tumor burden, then concluding tobacco doesn’t matter much.

Tactic 5: Outcome laundering (pick the softest possible phenotype)

Another way to make genes look small is to rig the outcome variable.

Instead of asking:

“How much of stable adult cognitive ability is genetically rooted?”

You ask:

“How much of a one‑off child IQ score or ‘years of schooling’ is explained by genes?”

If you pick outcome metrics that are noisy, unstable, or defined on a moving scale, you automatically:

Pump up “environment” and “noise”

Bury a lot of G₁+G₂

And get to say: “Look how much we changed things. Environment is huge.”

That’s outcome laundering.

Child IQ: half‑baked g (general intelligence)

One common move is to work with single child IQ tests instead of adult IQ or long‑run outcomes.

Early IQ scores are:

more volatile,

more sensitive to short‑term shocks (school, home chaos, health, sleep),

and show lower heritability because measurement error + transient environment eat into the signal.

It’s a half‑baked phenotype. The rank order is still settling.

If you care about how much stable ability is genetically rooted, you look at adult IQ, repeated measures, and long‑run achievements.

If you want “environment” to look big, you instead:

Take one child IQ snapshot

Add heavy SES controls (parental education, income, school quality), which soak up a lot of gene‑driven variance (G₂)

Then point at the tiny residual genetic coefficient and say, “See? Most of IQ is environment!”

Nothing about G₁+G₂ changed. You just chose a noisy outcome and covariates that re‑label genetic effects as “environment”.

Education and credentials on a moving scale

“Years of schooling” or “has a college degree” look clean, but:

schooling and college attendance have exploded

universities shifted from elite selection to mass credentialing

grades and standards drifted (inflation)

A “college degree” in 1960 and 2025 are not the same trait.

Historically, credentials tracked something closer to selective academic ability because access was tighter and standards were harsher.

In the modern mass-credential era, the label is far less telling of someone’s IQ/cognition — so it’s not as useful if asking: “how genetic is underlying cognitive ability?”

Treat that label as if it were a fixed, objective measure of “educational success” and of course policy and environment look overwhelmingly powerful: you baked policy and standards into the metric.

Within any given sample, though, who ends up in which track and who actually masters complex material still tracks G₁+G₂ heavily once you strip away outright deprivation.

How it plugs into the shell game

Outcome laundering dovetails with everything around it:

Over‑adjustment (previous tactic) helps you scrub away the paths genes actually use.

Denominator games (next tactic) let you quote the fraction that flatters “environment”.

You never have to say “genes don’t matter.” You just pick weak targets as outcomes, and let the metrics do the talking.

Tactic 6: Denominator games: choose the fraction that flatters your story

People hear “genes vs environment” like it’s one clean split. It isn’t. It’s a bookkeeping choice.

Start with the decomposition:

G₁ = your genome + the DNA→brain build process (“within-skin blob”)

G₂ = gene-shaped environment (genetic nurture, social genetic effects, self-selected/evoked environments)

E = truly exogenous environment (toxins, shocks, policy, random teacher draw, etc.)

R = randomness + measurement limits (stochastic development, noise you can’t fully get rid of)

With G₁ + G₂ + E + R = 1 (100% of variance).

Now watch how you can generate multiple technically true but rhetorically incompatible statements by swapping denominators:

Headline #1 (minimize genes): “Your genes only explain G₁; the rest is environment and noise.”

Headline #2 (plain-English genetic): “Genetically rooted factors explain G₁+G₂.”

Headline #3 (policy reality): “After you remove obvious deprivation, a lot of what’s left isn’t copy-paste environment — it’s genome-rooted structure and randomness.”

A concrete toy example (illustrative only)

(These numbers are not “the estimate for IQ.” They’re a toy to show how the rhetoric works.)

Say the world looks like:

G₁ = 0.25

G₂ = 0.35

E = 0.20

R = 0.20

Then all of the following are “true”:

“Your own genes explain 25%; the other 75% is environment and noise.” (G₁ / total)

“Genetically rooted factors explain 60%.” (G₁+G₂ / total)

“Only 20% is truly exogenous environment you can plausibly copy-paste at scale.” (E / total)

Swap the numbers and the trick still works. The scam is the denominator, not the exact percentages.

Same world. Three totally different stories.

The anti-hereditarian rhetorical style reliably does this:

Always quote G₁ / total

Never quote (G₁+G₂) / total

Quietly lump R into “environment” whenever convenient

So the public hears “genes: 25%; environment: 75%” and never gets told what environment secretly includes.

And in modern rich countries, the truly copy-paste part of “environment” (E) is often the smallest slice. A huge chunk of what gets counted as “environment” is either G₂ (gene-shaped) or R (noise/limits) — not some giant lever you can fund into existence.

That’s the denominator game.

Tactic 7: The “Chopsticks Gene” (Universal Stratification)

This is the nuclear option for dismissing any GWAS you don’t like:

“All those SNP hits are just population stratification. They’re not really genetic effects, they’re just picking up ancestry and culture.”

There’s a real technical issue here. Then there’s the misleading tactic.

The real issue: population stratification.

In actual statistical genetics, people worry about population stratification or geographic structure:

If you mix ancestry groups in one GWAS, allele frequencies and outcomes both vary by ancestry.

You can get SNPs that tag “which population/package you’re from”, not SNPs that causally move the trait within a population.

Toy examples you see in talks and papers:

“Chopsticks gene”: Run a GWAS of “regular chopstick use” in a mixed European + East Asian sample. You’ll find SNPs that tag East Asian ancestry. Those variants predict chopsticks because they predict which cultural‑linguistic environment you grew up in, not because flipping the allele flips your utensil preference in Tokyo vs Osaka.

“Drives on the left”: GWAS of “uses left side of the road” in a UK + US sample. You rediscover “UK ancestry.” The SNPs don’t cause left‑side driving; British traffic law does.

“Speaks Japanese”: GWAS of “native Japanese speaker” across Japanese and non‑Japanese. Again, you just found “Japanese ancestry.”

All of these share the same causal pattern:

Genotype → ancestry / region → language / institutions / norms → trait

No serious person thinks the individual SNPs are direct levers for “drives on the left” or “speaks Japanese.” They’re markers of which gene–culture–institution bundle you came from.

That’s the legitimate stratification story.

My view: culture is downstream of genes.

The only reason there is a “Japan” with Japanese language, chopsticks, and particular institutions is that some population with a particular genome, in a particular ecology, generated those norms and then reproduced/maintained them.

Over time: Aggregate genomes → typical psych traits / behaviors → culture & institutions → environment for the next generation.

So at the long‑run group level, “chopsticks,” “left‑side driving,” and “Japanese language” are absolutely part of the extended phenotype of those populations.

But that’s not what a sloppy cross‑ancestry GWAS is measuring.

A badly controlled “chopsticks GWAS” is only telling you:

“This SNP tags membership in a population that happens to have chopstick culture.”

It’s identifying which package you came from, not giving you a causal knob that moves chopstick usage inside that package. It tells you almost nothing about within‑population genetic variance for that trait.

Tactic: everything becomes chopsticks

The upgrade from real caveat to rhetorical weapon is:

Take these toy examples (chopsticks, drives‑on‑left, speaks‑Japanese).

Use them to teach that GWAS can be confounded by ancestry.

Then quietly act like every inconvenient GWAS on IQ, education, personality, etc. is “basically chopsticks.”

So you get lines like:

“Those IQ SNPs are just tagging rich vs poor, or majority vs minority, or whatever. It’s all uncorrected structure.”

This ignores a few awkward realities:

Modern GWAS don’t just throw Europeans, East Asians, Africans into one pot and hope. They routinely include ancestry principal components, relatedness matrices, and other corrections that wipe out most broad‑scale stratification.

Within‑family GWAS (sibling‑difference designs) automatically remove between‑family ancestry and SES confounding. Siblings share parents, ancestry, neighborhood. Whatever SNP signal survives there is not “you’re slightly more British than your brother.”

Trio / non‑transmitted allele designs explicitly separate: direct genetic effects (what you inherited), from indirect “genetic nurture” effects (what your parents’ genomes do to your environment).

In other words: the very designs that would kill a true “chopsticks” artifact for IQ/EA already exist and still show non‑trivial genetic effects.

To keep the “it’s all stratification” line alive, you basically have to:

Pretend those methods don’t exist, or

Dismiss them as “underpowered / flawed” whenever they give answers that are too hereditarian for your taste.

What this tactic really does

So the move is:

Take a narrow, valid warning (“cross‑ancestry GWAS of pure culture/legal traits finds ancestry markers, not causal SNPs”).

Inflate it into a universal veto on any uncomfortable behavioral GWAS result.

Hand‑wave away the very designs (within‑family, WGS + careful modeling) built to address the real problem.

You end up in a world where:

Any genetic result you like = “high‑tech proof”.

Any result you don’t like = “chopsticks gene, obviously confounded.”

Yes, cultures ride on genes. That’s the big picture. The “Chopsticks Gene” tactic game is about using that deep truth to ignore specific, well‑designed evidence about within‑population genetic variance in traits like IQ and educational attainment.

Tactic 8: “God of the Gaps” for environment (Residual Optimism)

We hear a lot about missing heritability. Much less about missing environment.

Reality:

Twin/family/WGS + modern modeling together explain a big chunk of variance for many traits. (R)

Measured environmental variables – income, school quality, classic parenting questionnaires – usually explain quite modest additional variance, especially for adult IQ and educational attainment, once you control genetic factors. (Often single-digit percentages.)

The honest decomposition is:

Unexplained variance = Environment + Randomness (R) + Measurement error

The shell game defines:

Unexplained variance = “Systematic environment we just haven’t discovered yet.”

So any part of variance not currently attributed to genes is automatically placed in a giant implicit “Hidden Environment” bucket:

Randomness (R) disappears.

Limits on what environment can do disappear.

The belief that there must be a huge, as‑yet‑unknown environmental lever we can pull stays intact.

That’s the God‑of‑the‑Gaps for nurture: everything we don’t understand is assumed to be a big, untapped environmental knob rather than some mix of randomness, complexity, and diminishing returns.

Part II: The Conceptual Firewall (Theory & Rhetoric)

Now we move from data tactics to conceptual firewalls: ideas that are partly true, but deployed in ways that block heritability from influencing the narrative.

Tactic 9: The G×E Fog (“Interactions make heritability meaningless”)

When you can’t deny G₁ and G₂ exist, you can still say:

“Heritability models are too simple. Genes and environments interact so wildly that any single h² number is basically a lie.”

The "holy grail" for this tactic is the Scarr-Rowe hypothesis.

A 2003 study (Turkheimer et al.) suggested that for poor families, genes explained 0% of IQ variance, while for rich families, they explained 72%. (R)

Tactic: Woke academics treat this one 20-year-old study as a universal law of nature to suggest that “genes only matter for the privileged.” They use it to argue that for the “disadvantaged,” biology is irrelevant and only “funding” matters. (Another major issue with the Turkheimer et al. (2003) study is that it involved CHILDREN ~7 y/o twins! Childhood IQ is not a reliable IQ measurement… and using this measure to reach this conclusion is insanely misleading! We discussed this earlier.)

Reality: Scarr-Rowe is a “Third-World Artifact.” It only shows up in environments of extreme, catastrophic deprivation where the “soil” is so toxic it prevents the “seed” from growing at all.

In modern, large-scale replications (e.g. Figlio et al. 2017), using hundreds of thousands of siblings, the effect disappears. (R)

In a modern welfare state with basic nutrition and public schooling, the environment is “saturated” enough that h² remains high across the board.

The “Fog” has cleared, but the anti-hereditarians are still pretending they can’t see through it.

Why this works rhetorically is because it lets you get away with suggesting:

“Genes only matter for the privileged; for the disadvantaged it’s all environment.”

That is, conveniently, exactly the story many people want to tell for moral and political reasons.

Tactic 10: The Epigenetic Escape Hatch (Lamarckian Hope)

Epigenetics is a credible area of scientific research. But in pop discourse it gets promoted from “mechanism of gene regulation” to “biological backdoor to override DNA.”

The narrative:

“DNA is just a script; epigenetics is the director. Trauma, stress, and poverty rewrite your epigenome — and those changes are passed down generations. What looks like ‘genetic inheritance’ is actually biological scarring from injustice.”

The reality:

In mammals, there are 2 major waves of epigenetic reprogramming where most DNA methylation marks are erased:

After fertilization in the early embryo.

In primordial germ cells before they become sperm/eggs. (R)

This “epigenetic reboot” is a huge barrier to stable transgenerational epigenetic inheritance. It doesn’t make it impossible, but it makes strong, multi‑generation effects hard and rare. (R)

The shell game is to talk as though:

Epigenetics were a soft, Lamarckian inheritance system that easily transmits social trauma for many generations.

And that it is somehow orthogonal to “genetics,” rather than a regulatory layer mostly orchestrated by the genome itself.

This satisfies the demand for biology‑flavored explanations while preserving the moral story that “it’s really environment all the way down.”

Tactic 11: The Flynn Effect Pivot (Means vs Variances)

The Flynn effect is real: Meta‑analysis and historical test comparisons find raw IQ test scores rose roughly ~2.9–3 points per decade through much of the 20th century in many developed countries. (R)

Also: if Flynn gains are driven by broad improvements (less disease, better nutrition, more schooling, more test familiarity), then as conditions saturate you should expect gains to plateau. That’s not heresy — it’s exactly what the “environment explains Flynn” story predicts once you hit diminishing returns.

And in some rich countries scores have even drifted downward on some batteries/cohorts — which is exactly why “environment” is not a magical always-up lever, and why the mean-shift story doesn’t touch the rank-order/heritable-variance story.

The anti‑hereditarian pivot:

“IQ rose 15 points in 50 years, and genes didn’t change that fast. So environment is obviously vastly more powerful than genes. Just improve environment and everyone becomes a genius.”

The confusion here is between:

Mean changes: how high the average score is.

Variance / ranks: who is higher or lower within the population.

Corn analogy:

Fertilize a field → all stalks get taller (mean shifts up).

Genetic differences still determine which stalks are tallest (rank ordering stays strongly genetic).

So you can have both:

High heritability of who is taller than whom, and

Big environmentally driven changes in how tall everyone is on average.

Same with IQ:

Flynn gains show that environments and living conditions can shift the mean level of performance.

They don’t remotely prove that, within a given cohort and environment, most of the variance isn’t genetically rooted.

The shell game is to shout “Flynn effect!” any time someone mentions heritability of individual differences, as if mean gains logically contradict high h². They don’t.

There are a few extra details that mysteriously drop out of the public story.

Flynn gains aren’t pure g, and tests aren’t static

A lot of Flynn gains show up more on certain subtests (abstract, test‑specific skills) than on others.

Test familiarity, schooling, and “test‑wiseness” matter.

Norms get re‑scaled, content drifts, and there’s straightforward score inflation on some widely used tests.

So some chunk of the Flynn effect is “people are radically more cognitively capable than their grandparents,” but some chunk is:

More practice with similar puzzles,

Better schooling in the specific skills these tests tap,

And straight‑up measurement drift.

Treating all of it as “deep latent g going up 15 points” is generous at best.

There’s plateau and reverse Flynn

If “better environment = higher IQ forever” were the whole story, you’d expect IQ scores to just keep climbing as countries get richer, healthier, more educated.

Instead, in many rich countries you see:

Plateaus in test scores,

Stagnation, and even

Reverse Flynn effects (scores drifting down on some batteries and cohorts).

If your entire narrative was “environment is king, and we’re always improving environment,” this is awkward. You can’t simultaneously say:

“Environment explains the huge rise,” and

“Environment has nothing to do with the recent stagnation/decline,”

while treating genes as a rounding error.

Once you admit that macro‑environmental effects can go both directions, the honest takeaway is:

Environment can shift means, especially when you go from bad conditions to decent ones.

After that, gains flatten or even reverse; you run into limits and trade‑offs.

Everyone can gain and still not match (group gaps remain)

The biggest sleight of hand is pretending that:

“Group A and Group B both show Flynn gains” ⇒ “Group A and Group B now have the same means / distributions.”

That’s not how it works.

If 2 groups start at different averages and both gain from better nutrition, education, test familiarity, etc.:

Both lines can move up

Relative gaps can persist

You can easily have:

Group A: +10 points

Group B: +10 points

Same gap as before, just at a higher overall level.

There are plenty of cases where:

Different countries started at very different baselines,

All saw some gains as basic conditions improved,

Yet the ordering of group means did not magically collapse into one flat line.

Even within the same country and broadly similar environments, you can see:

Unselected subgroups all gaining with time,

And still lagging by a substantial margin on the same tests, under the same norms.

Everyone goes up. That does not mean everyone converges.

What the Flynn effect really says

Once you strip out the spin, the sane interpretation is:

Going from bad conditions → decent conditions (less disease, more schooling, less malnutrition, cleaner tests) can push up average test performance a lot.

In already rich, stable countries, we’re probably near the ceiling of what “environment” can do for IQ. That’s why gains slow, plateau, or reverse.

Within those modern conditions, most of the stable differences in who is where in the distribution are still strongly genetically anchored (G₁+G₂), with some exogenous environment and randomness on top.

So the Flynn effect is not a magic “gotcha” for heritability. It’s mostly:

“We made conditions much better for almost everyone, so raw scores rose.

The gaps and rank ordering didn’t just evaporate.”

Using Flynn to argue “genes don’t matter; it’s all environment” is like pointing at fertilizer making the cornfield taller and declaring:

“Therefore genetics can’t explain why some stalks are still taller than others.”

This is why third-generation unselected immigrants to developed countries (e.g. US, Canada, UK, EU) fail to bridge the IQ/behavioral gap with the native population.

Tactic 12: The Populations Firewall (Lewontin’s Plant Analogy)

Here’s the hard firewall that gets trotted out whenever someone even hints at genetic explanations for group differences.

The statistical core is correct:

Heritability is a within‑population statistic.

You can have h² = 1.0 in group A and h² = 1.0 in group B, and the mean difference between A and B could still be 100% environmental, 0% genetic.

Lewontin’s famous plant analogy:

Two pots: rich soil vs toxic soil.

Same bag of seeds, randomly assigned to each pot.

Within each pot, all height differences are 100% genetic, because soil is uniform.

The average difference between pots is 100% environmental (soil).

That logic is fine.

The shell‑game tactical upgrade:

Treat human populations as if they are literally those flowerpots, with totally distinct, non‑overlapping “soil.”

Declare that the default assumption about any mean group difference must be “100% environment,” until proven otherwise by impossible experiments (e.g., raising thousands of infants in controlled conditions).

Use the within/between distinction to say: “Heritability tells you nothing about group differences.” Which gets heard as: “Any talk of genetics and group differences is inherently unscientific and suspect.”

Notice the asymmetry:

If you suggest genetics might contribute some share to a group difference, you’re told “heritability is irrelevant; you’re committing Lewontin’s fallacy.”

If someone asserts it’s entirely environmental, they’re almost never held to the same evidentiary standard.

The firewall becomes a one‑way gate: heritability is allowed to argue for environment‑only explanations, but never for mixed explanations.

(Needless to say, nothing here justifies bigotry or fatalism; but using Lewontin’s valid point as a blanket veto on any genetic discussion is still a shell game.)

Tactic 13: Measurement Denialism (“IQ isn’t real, so its heritability doesn’t matter”)

When the genetic data on IQ become too robust to ignore, the final fallback is to attack the trait itself:

“IQ is just a measure of how well you take IQ tests.”

“IQ is a Western socio-cultural construct with no biological reality.”

“There are many intelligences; IQ only measures a narrow, culturally specific one.”

But in aggregate, IQ‑like g measures:

Correlate with biological latency (simple reaction time and inspection time); meta‑analyses find meaningful negative correlations (lower reaction time ⇄ higher IQ), often in the r ~ 0.3 range uncorrected, stronger after corrections. (R) You cannot “bias” a stopwatch measuring how many milliseconds it takes for a neuron to fire.

Are associated with brain volume and structure; multiple meta‑analyses find r ≈ 0.2–0.3 between total brain volume and general intelligence in healthy adults. (R)

Predict job performance and training success strongly; classic and updated meta‑analyses put general mental ability’s predictive validity around r ~ 0.4–0.6 for many medium‑to‑complex jobs and for training outcomes. (R)

You don’t get that pattern from a meaningless cultural ritual.

The shell game is to:

Ignore the biological and predictive validity literature.

Assert that IQ is too “constructed” or too culturally loaded to count as a real trait.

If the trait is fake, its heritability can be safely disregarded, and any genetic results tied to it can be dismissed as morally suspect by definition.

Tactic 14: The Immutable Strawman (Determinism Bait‑and‑Switch)

This one is pure rhetoric. It confuses: (A) What explains variance right now with (B) What could change under a new intervention.

The move:

“If you say IQ is 50–80% genetic, you’re saying we can’t improve education, can’t help the poor, and should give up on social policy. You’re a genetic determinist.”

Reality:

Myopia is highly heritable, yet glasses work perfectly.

Phenylketonuria (PKU) is fully genetic — a single gene disorder — yet a dietary intervention prevents cognitive damage.

Heritability is always defined relative to current environments; it says nothing on its own about what would happen if you introduced a brand‑new intervention.

What high heritability does mean is:

Within the existing range of environments, most of the variance is coming from genetically rooted factors.

It does not mean that:

The trait is metaphysically fixed.

No intervention can change mean levels or individual outcomes.

The shell game is to deliberately conflate “heritable” with “unchangeable” so that anyone who acknowledges strong genetic influence can be safely cast as heartless and anti‑hope, while the anti‑hereditarian side gets to role‑play the guardians of possibility.

Genetic blackpill: Genetic influence may imply real deterministic limits. Acknowledging these limits is not a bad thing… it’s just being honest. Reality doesn’t care how we feel about it. If a trait is 95% fixed by DNA, yelling about how “that’s determinism" or whatever isn’t going to change anything!

The only thing people accomplish by banning “bleak” descriptions is to make models dumber and policies less effective.

If the world has hard limits, they exist whether we find them depressing or not.

Blank Slate is arguably the cruelest position: It implies that a person with an 85 IQ failed because they didn't try hard enough, or because society oppressed them.

Acknowledging limits is reality-based compassion. It allows us to build a society for the humans we actually have, rather than the ones we wish we could manufacture.

Part III: Why these tactics are so attractive (5 motives)

None of this requires a secret conspiracy. You just need strong priors, social incentives, and human psychology. Here are 5 reasons the shell game is so sticky.

Motive #1: Status‑quo egalitarian storytelling

A simple, appealing moral narrative:

“Differences in outcomes are mostly caused by unjust environments (class, racism, sexism, etc.). Fix the environment and you fix the gaps.”

Strongly genetically rooted variance in traits like IQ and conscientiousness doesn’t refute egalitarian ethics, but it complicates the story:

Even in a fair system, some differences in outcomes will track differences in abilities and temperaments that are partly inherited.

There are limits to how much you can equalize outcomes by policy alone once you’ve equalized opportunities and basic conditions.

It’s much easier to preserve the clean story if you can keep genes in the background and push G₂ and R into “environment.”

Motive #2: The “Noble Lie” of Plasticity

Some people (often implicitly) adopt a kind of noble‑lie posture:

“Even if genes matter a lot,” emphasizing that could:

Fuel prejudice or fatalism.

Undermine support for redistribution and welfare.

Be misused by racists or eugenicists.”

So they lean toward pseudo truths that feel socially safer:

“We don’t really know how genetic IQ is.”

“It’s mostly environment and experience.”

“Epigenetics shows trauma and poverty are the real inheritance.”

You can see this in how often:

Plasticity is oversold relative to the effect sizes of actual interventions.

Genetic findings are framed as things to be “handled carefully” rather than straightforward pieces of the causal structure.

The noble intent (reducing harm) doesn’t change the fact that this is a distortion.

Motive #3: Professional sunk costs and face‑saving

If your career (most woke academics/scientists) is built on bullshit:

Purely socio‑economic explanations of inequality,

Environment‑only models of cognitive or achievement gaps,

Large‑scale interventions promising to “fix” outcomes via school and parenting reforms,

… then strong, stable heritability is a threat.

Admitting that:

G₁+G₂ explains a large chunk of variance, and

Many educational / parenting interventions have modest, temporary effects by adulthood,

… means admitting that some of your theoretical frameworks and favored programs were over‑optimistic.

It’s not shocking that people in that position:

Emphasize every methodological critique of heritability.

Prefer definitions of “genetic” that minimize G₁+G₂.

Default to “we already know enough from GWAS” when new genetic methods come along.

Motive #4: Fear of being labeled a bigot or eugenicist

The history here is front-and-center:

Intelligence testing and genetics were utilized by eugenics movements and racist ideologies in the 20th century.

Contemporary studies of group differences are extremely contentious and politically charged.

So even researchers who privately accept strong genetic influence on IQ/EA may:

Avoid those topics completely.

Use aggressively nurturist framing no matter what the numbers suggest.

Oppose further genetic research on cognitive traits, or treat it as uniquely dangerous.

The simplest way to stay safe in that environment is to support, at least in public, the line that “genes are only a small piece; environment is the real story.”

Heritability, genetics, et al. are such sensitive topics that if a researcher, academic, scientist, etc. are framed incorrectly by the media or general public — they will lose their jobs.

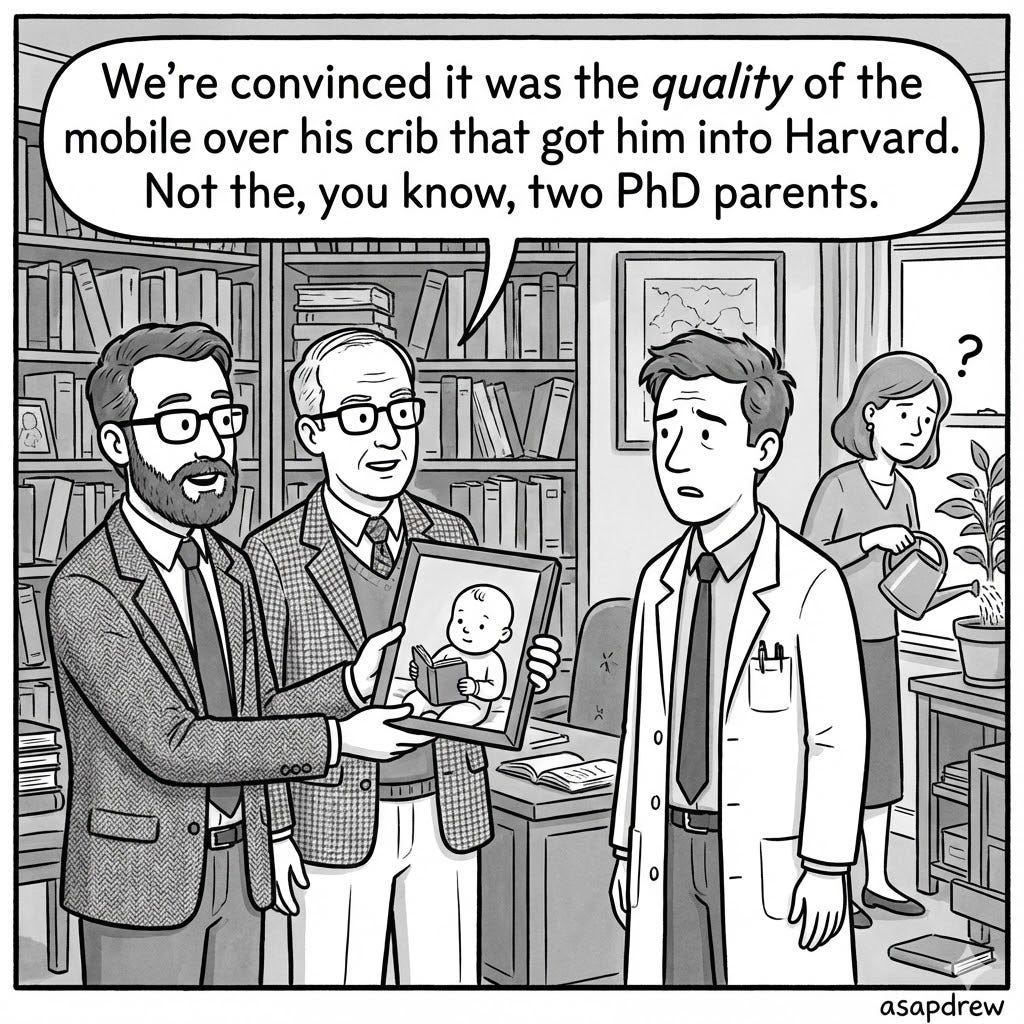

Motive #5: The Super‑Parent Illusion (Parental Agency)

Finally, the psychological motive for ordinary, well‑meaning people:

Modern middle‑class parenting culture is built on a deep belief:

“Inputs = outputs. If I do the right things — the right books, the right toys, the right schools — I sculpt my child’s destiny.”

Strong heritability suggests something less flattering:

Parents are more like shepherds than sculptors. They protect and provide, but much of the child’s eventual cognitive and temperament profile was baked in genetically the moment conception happened.

For many educated, conscientious parents, especially in the same demographic that leans anti‑hereditarian in public debates, that’s emotionally brutal:

It reduces the perceived causal power of their parenting labor.

It reframes their child’s success as heavily dependent on genetic luck, not parental merit.

It makes the grind of endless optimization feel less rational and less special.

The shell game: “It’s mostly environment, and good parenting is hugely powerful” acts as an emotional reassurance that their effort is the main driver and that their children’s outcomes are chiefly the result of what they did, not what they passed down.

Where all this leaves the actual science…

Strip away the tactics and you get something like this:

We now have strong evidence that most additive genetic variance family studies see is really in the genome. WGS recovers ~88% of pedigree narrow‑sense heritability across traits on average; for many traits, WGS ≈ pedigree.

We can cleanly separate direct (G₁) from indirect (G₂) genetic effects using within‑family and genetic‑nurture designs. Non‑transmitted parental alleles affect kids via the environments parents create. (R) Within‑sibship GWAS shows how much of population SNP h² is G₁ vs G₂+stratification, especially for EA and cognition. (R)

IQ‑like traits are not arbitrary social constructs. They correlate with reaction time, brain volume, and job performance in ways that are hard to square with “just a cultural ritual.” (R)

Heritability is high for many traits in rich, stable societies. That doesn’t mean the mean can’t change (Flynn effect), or that interventions can’t help; it means that, within the current environmental range, a lot of variance is genetically rooted.

In an equal‑environment thought experiment (basic E equalized, alien IQ scanner, G₂ not centrally engineered): (1) Most of the remaining systematic differences in IQ would be due to G₁ + G₂ and (2) the residual is genuine exogenous environment and random R — important but smaller.

In that world, the question:

“Are individual IQ differences mostly genetically rooted once you equalize obvious environments and recognize gene‑driven environments as such?” probably has a boring, uncomfortable answer:

Yes, they mostly are.

That does not justify discrimination.

But it does mean:

The classic “we can’t find the genes, so twin heritability must be wrong” argument is dead.

The modern “direct h² is modest, therefore genes don’t matter” line is, at best, a definitional trick unless it’s crystal clear that G₂ hasn’t magically become non‑genetic.

The anti‑heritability shell game is what you get when these realities collide with:

Egalitarian moral instincts

Identity and career investments

Fear of social punishment

Deep personal hopes about parenting and plasticity

You don’t have to be a hereditarian ideologue to see that. You just have to keep track of the buckets — G₁, G₂, E, R — and notice every time someone quietly re‑labels them mid‑conversation.

The Anti-Hereditarian Shell Game isn't just a scientific disagreement, it’s a malicious misallocation of human capital.

When you pretend outcomes are mostly programmable by broad environmental spending, you build systems that (1) overpromise, (2) underdeliver, and (3) blame people for limits that were never politically negotiable in the first place.

Biology doesn’t take bribes, and reality won’t bend to woke lies.

Once you see the anti-hereditarian shell game, you realize that woke science isn’t trying to find the truth — it’s trying to manage the fallout of the truth.

As better WGS data rolls in, the “Missing Heritability” is found… the blade of genetic heritability is finally visible.