AI Hype: Google's Gemma AI Model with New Cancer Treatment (Expect More Incremental Gains... Maybe Not High Novelty)

Google's Gemma AI with potential new cancer treatment adjunct... an iterative proposal but not "high novelty" or a "major breakthrough"

“Gemma model helped discover a new potential cancer therapy pathway”… was published October 15, 2025 on Google’s blog.

The AI hype monkeys on X reacted: “anyone doubting AI is retarded” (I can get on board with this) and rubbed the headline in the doubters’/skeptics’ faces (am fine with this too).

No denying that this is a favorable newsworthy development… we should expect more incremental advancements popping up randomly given that AI can analyze large datasets and propose connections that are logically sound — that would’ve been too tedious for humans to spend time on (think massive datasets… genomics, biology, pharma, etc.).

The headline notes “potential cancer therapy pathway”… so they’re not trying to overhype it. It was "technically” novel… but it wasn’t high novelty.

But we don’t necessarily need high novelty to advance humanity.

Incremental improvements in any niche have potential to yield large effects and/or compound. Get a bunch of incremental improvements across many domains at a faster rate… and the macro impact is massive.

Why many people think AIs are inventing highly novel stuff…

Scott Aaronson recently discussed how “GPT-5 Thinking” (from OpenAI’s “ChatGPT) proposed a key technical step in unlocking a mathematical proof (proved via a quantum-oracle separation and approximation theory).

Could Aaronson et al. have figured this out without ChatGPT? Eventually probably yes. With enough brute force and thinking and research.

But they didn’t. And they didn’t need to. They saved themselves a lot of brain cycles or cognition tax (thinking/effort) by letting GPT-5 Thinking figure shit out.

Was the proposed step highly novel? No. But who cares? It was very useful.

Many extrapolated this news as: AI invented new math and led to a major breakthrough… not really what happened here. Still good. But not some new paradigm shift proposed solely by the AI.

Cognition saver? Yes. Time Saver? Yes. Massive breakthrough? No.

But whatever. The stage is set. Now many have the Aaronson news in their heads and they see a headline from Google about some Gemma AI cancer treatment and the dopamine rush of embellishment continues.

What was the Gemma/DeepMind cancer drug hypothesis? (GPT-5 Explains)

TL;DR: Cool method, early science. What’s new here is not a brand‑new cancer drug—it’s an AI‑generated mechanistic hypothesis about how to use an existing investigational drug (silmitasertib) in a very specific immune context. It was validated in vitro (cells in a dish), not in animals or people. So: methodological significance = moderate to high; clinical significance (today) = low; hype about “AI discovered a cure” = not warranted.

What actually happened?

Google DeepMind + Yale released Cell2Sentence‑Scale 27B (C2S‑Scale), a Gemma‑based model trained on single‑cell data. They asked it to find a “conditional amplifier”: a drug that would boost antigen presentation (MHC‑I) only when there’s a low level of interferon signal—i.e., a way to turn some “cold” tumors “hot.” The model predicted silmitasertib (CX‑4945), a CK2 inhibitor, would increase MHC‑I only under that low‑interferon condition. In lab tests on human neuroendocrine cells (a type it wasn’t trained on), silmitasertib + low‑dose interferon increased antigen presentation by ~50%; either alone did little. This is framed as a new therapy pathway rather than a treatment ready for the clinic.

The underlying writeup is a bioRxiv preprint (not peer‑reviewed yet); Google also open‑sourced the model artifacts.

How novel is it?

What’s legitimately new

Context‑conditioned mechanism: The specific claim is that CK2 inhibition (silmitasertib) amplifies MHC‑I only in an interferon‑positive, low‑signal state—a “split” the team says smaller models couldn’t resolve and which they say hasn’t been reported before for silmitasertib. I couldn’t find prior literature explicitly showing silmitasertib → MHC‑I upregulation in a low‑IFN condition; the preprint and Google’s post present this as novel.

What is not new

Using interferons to boost MHC‑I (and thus tumor visibility) is textbook immunology. Many drugs and radiation can increase MHC‑I via interferon pathways.

CK2’s role in immunity/cancer is well‑studied, and CK2 inhibition has been explored as an immunotherapy adjuvant in preclinical work. So the drug class isn’t new; the context‑specific use is the twist.

Silmitasertib itself is an investigational CK2 inhibitor with multiple ongoing/finished early‑phase trials in various cancers; it’s not a new chemical entity.

Bottom line on novelty: Moderate. It’s a new, testable mechanism of action and use‑case for a known drug, not an entirely new therapy or target.

How significant is it?

Today (scientifically)

The in vitro result (~50% MHC‑I increase with the combo) is a real signal, and it came from an AI‑driven dual‑context virtual screen. That’s a compelling methodological proof‑of‑concept for using scaled single‑cell models to propose context‑specific combinations.

Clinically

No animal data and no human data for this specific combo/conditioned use yet. Many in‑dish MHC‑I boosts don’t translate to actual tumor rejection or better survival. We also don’t know which tumor types and interferon levels are required, how durable the effect is in vivo, or how it interacts with checkpoint inhibitors. Interferons can be toxic; whether low‑dose regimens with silmitasertib avoid that remains to be shown. So the clinical significance right now is low.

Strategic angle

If replicated in vivo and in patients, this could re‑position silmitasertib as an adjuvant to immunotherapy for “cold” tumors. Because the drug already has clinical experience, timelines for testing might be faster than for a brand‑new molecule—but that’s still a multi‑year path.

Is this “just an iteration of a current treatment”?

Mostly yes. It’s a repurposing/combination insight about when and with what to use an existing investigational drug, guided by a scaled biological LLM.

The therapy components (interferon; CK2 inhibition) are familiar; the context‑conditioned mechanism is the new bit.

Is the hype warranted?

“AI discovered new scientific knowledge” → Cautious yes, in the sense that an AI model generated a novel, experimentally confirmed hypothesis about cell behavior. That’s meaningful methodologically.

“New cancer treatment is here” → No. It’s preclinical, single‑cell‑type, in‑vitro data. We need mechanistic mapping, animal studies, and human trials before calling it a therapeutic advance.

My quick scorecard

Mechanistic novelty: 7/10 (new context‑specific effect for a known drug).

Methodological significance (AI for context‑conditioned screens): 8/10.

Near‑term clinical impact: 3/10 (way too early).

Paradigm shift: No (1/10).

What to watch next (to separate signal from buzz)

Independent replications (other cell types/tumor models) and in vivo studies showing increased T‑cell killing or synergy with checkpoint blockade.

Mechanism: how CK2 inhibition interfaces with IFN signaling to raise MHC‑I; precise IFN thresholds and tumor microenvironment constraints.

Early clinical exploration: small trials testing silmitasertib + low‑dose interferon ± PD‑(L)1 blockade in “cold” tumors; safety/tolerability at the doses that produce the effect. (Silmitasertib already has trials in other settings, which can inform dosing/safety.)

My non-AI reaction to the DeepMind x proposed cancer treatment news…

I expect more of this in the future. Specialized AIs for a variety of niches. Some low hanging fruit breakthroughs across the board (all domains).

It is a hypothesis: Validated in-vitro (cells). Good start. Not yet independently reviewed/confirmed.

Not tested in animals or people: A lot of in vitro shit doesn’t translate to animals and sometimes even if it works in certain animals it doesn’t work in humans.

Not a cancer cure: May help treat certain cancers (most likely Merkel cell carcinoma, small-cell lung cancer, and maybe: certain types of prostate, pancreatic, glioblastomas, etc.).

Unique proposal: Sure came up with something that nobody has formally proposed. There is a lot of shit AIs and humans come up with that are logically sound but never investigated or formally proposed. Still I’ll give it some credit. This was fairly expected.

Mechanistic novelty: High. A scientist could have proposed this or come up with this… but who knows if they would have. AI can churn out a lot of permutations to try rapidly.

Overall novelty: Low. This is expected stuff. AIs are trained on massive datasets and should be able to innovate and come up with unique mechanistic ideas by making connections/proposals that are logically sound. Current AIs are just algorithms. They are not conscious. Think of them as information calculators (not always accurate either).

2 polarized cohorts react:

Embellishers (OMG AI just cured cancers!! WOOOO!!! NVDA go brrrrr!!!)

AI critics who assume that nothing useful can come from advanced pattern matching or more “thinking time” – even if the AI just proposes variants, iterations, and permutations of existing ideas.

The first group is a bit too excited (nothing wrong with that) but the hype can be a distraction and a bit nauseating when it’s so early in testing. Attitude = AI will cure everything we can just chill.

The second is correctly skeptical of high novelty large-scale breakthroughs… but fails to see the utility from incremental gains even if brute forced from pattern matching in a giant GPU cluster.

Typical “scientific breakthrough” news spam cycle…

Hype cycles aren’t anything new. Scroll ScienceDaily and you’ll learn we’re a few days away from living for forever, colonizing Mars, communicating with aliens, and deciphering wormholes.

I’m not trying to shit on the hype train either… I enjoy reading new breakthroughs as much as the next guy. Hype is good for spurring optimism.

But rarely does the hype actually translate to what people think. People get the dopamine rush and their brains register it as another cure done… book it. Then forget about it.

Then in 10 years they read something about a disease and think: “Hey I thought I read that we cured that in like 2000. Oh I guess that was just a headline of a proposed mechanism of an investigational compound designed to be tested in C. elegans but was never tested.”

If you actually check back in the future (*REMIND ME IN 10 YEARS*)… the “nothing ever happens” paradigm stays undefeated for most “BREAKTHROUGH” headlines in science news.

At some point, much of the research ends up discontinued like Todd Rider’s DRACO (Could we have cured all viruses by now? Who knows.)

Not claiming this specific Gemma AI (Google/DeepMind) proposal will fall flat… it might be a useful intervention for certain cancers… and I have zero doubts that AI will be a key force in acceleration of scientific research and disease treatments.

If we do things right… we won’t even need humans. 200-500 IQ AI agents and robots do the independent first-principles research without humans in the loop… share data, synthesize compounds/materials, etc.

Humans will likely remain in the loop for a while (IDK if 5 years, 10 years, 25 years, etc.).

Are current AIs capable of true novelty?

Novelty is subjective. But most would agree that the magnitude of novelty from current AIs is minimal. Not nonexistent (because it can match patterns across large datasets that are logically sound that haven’t been proposed).

You can make the argument that any new iterative proposal is “novel.”

But in terms of coming up with a face-melting, paradigm shifting proposal like Einstein’s “Theory of Relativity”… we aren’t there yet.

AIs are capable of taking existing reality and suggesting little tweaks to improve it. Realistic example: Analyze all GLP-1/GIP modulators and suggest chemicals that are likely safer and/or more effective and/or are likely to cause fewer side effects.

You might end up with AImaglutide and Robotzepatide or whatever… but would this be that novel? Not really. Could be an improvement to some extent if significantly better in some way (e.g. tolerability).

AI Emergence: Emergent Behaviors in AI are Expected Not Surprising

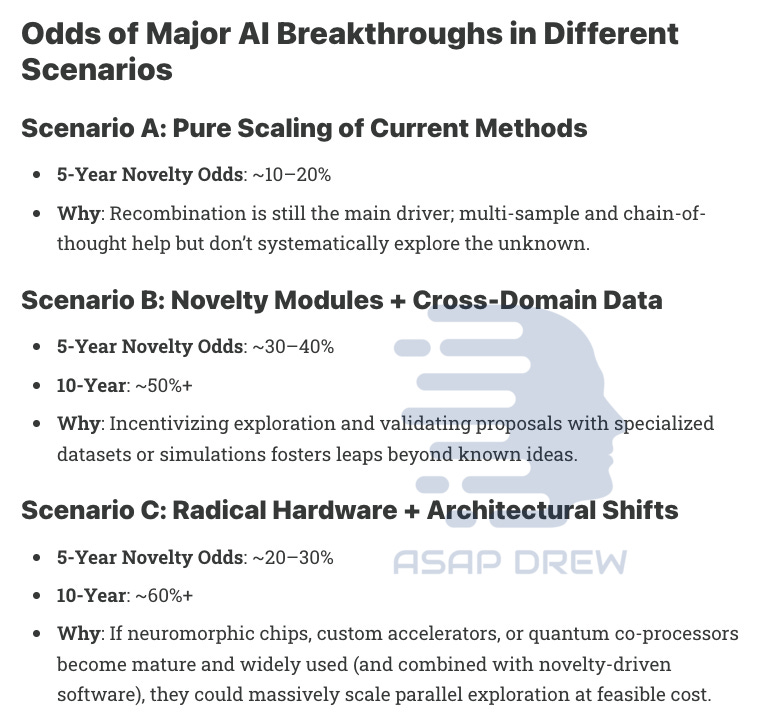

Will Scaling Test-Time Compute Yield True Novelty? (Probably not… odds below taken from my article. Need some sort of novelty mechanisms and/or an AlphaGo Zero paradigm like what Sutton recommends.)

Does it matter that AIs aren’t doing novel shit yet?

Not as much as the skeptics think… but maybe slightly more than the hype jockeys think.

CRITICAL POINT: Iterations and permutations within large datasets does NOT equal useless or inability to make progress. In fact, the current AI paradigm should help make quicker gains and incremental improvements in many ways before hitting certain bottlenecks.

Even if we had ZERO additional data from today… AI should be able to find us better treatment strategies for all diseases/conditions. That goes for new drug development and repurposing existing medications. (Think of all the dose response curves, mechanisms, interactions/synergies, etc. and things that haven’t been “thought of” or trialed — even though they’d be logical.)

It’s like a gazillion square Sudoku puzzle and there are still plenty of squares you can try/fill in. Some may not be improvements (may be on par with current treatments) but there are likely many low hanging fruit that AI can efficiently find and engineer (to help a smart/high IQ human behind the hunt).

Iterations often lead to unexpected incremental improvements even if no major paradigm shift. If you add up a lot of incremental improvements in every niche… you can make clear breakthroughs because they may feed off of each other in some sort of unpredictable imperfect virtuous feedback loop or domino effect.

A really horrendous hypothetical example (I didn’t feel like thinking hard): You figure out how to modify a gene in some type of way… the AI learns this. The AI then proposes modifying some advanced material with some similar underlying logic to how you manipulated the gene and you suddenly have 10x energy storage. (Okay sure this was a stupid example.)

The 10x energy storage then allows you to power 100x more robots to independently collect data and run their own experiments… which feeds back to advancing X-Y-Z niches. Something something law of accelerating returns?

Perhaps a more realistic near-term scenario is just like this proposed cancer treatment… AI scans all the data and comes up with highest IQ strategies that scientists haven’t tried or thought to try… even though they probably could eventually think of these (given the literature/logic — they just don’t have time to think through all the permutations)… too much time/cognition tax.

A more apt example could be something like you have a novel drug of a specific class… you ask AI if it can improve upon the existing chemical in terms of efficacy, tolerability, tolerance reduction, safety, side effects, etc.

It then churns out 10-30 ideas… most seem like copycats, but one stands out to a researcher… the researcher then tests it and finds that it’s akin to the same drug but causes zero physiological adaptation (bypassing innate feedback loops associated with physiological drug tolerance) and has fewer side effects than the parent compound.

Do humans produce novelty?

Technically everyone is “unique” (distinct cells, genes, environmental stimuli exposures) and therefore novel and always generating “novel outputs.”

Unique outputs aren’t inherently useful or high novelty (barely novel yeah but not highly novel… if high novelty may not be useful).

Most of what humans do is iterating. Truly novel 0 to 1 type breakthroughs are rare… and many things thought of as “paradigm shifts” (0 to 1) probably aren’t as objectively novel as perceived.

Humans also hallucinate, make mistakes, aren’t logical, etc. and some of this may even be beneficial in certain ways.

Hence some think AI “hallucinations” are great for generating new ideas that could be useful even if a lot of the hallucinations are junk.

A few rare humans e.g. Einstein come up with things like a “Theory of Relativity.”

Will we get AIs to generate highly novel “paradigm shifts”?

I think we get a decent amount of incremental progress with more of “the current thing” (compute + multimodal data + toolchains); this is why all major AI labs are going ape with datacenter buildouts.

Even if most humans become slightly lazier as a result of AI (I think this is happening already: less effort because AI — and this isn’t necessarily a bad thing… frees up cognitive reserves)… I still think progress continues.

Ideally we upgrade everyone’s IQ who wants an upgrade with gene therapy though as a hedge against stalling AI progress — I’ve highlighted this in “The ASAP Protocol for the U.S.”

I predict we end up with quicker incremental progress in most domains thanks to AI… but demographic shifts and the potential for doomer/woke regulations and premature UBI (socialism/communism pushed because of AI) could usher in massive setbacks.

A lot of “AI” breakthroughs will be mostly assisting smart humans who propose certain ideas/investigations.

There may be diminishing returns because the low hanging fruit has already been plucked in many niches… but the sheer quantity of logical permutations that have been unexplored and can now be efficiently explored across various occupational niches (e.g. biomedicine, genetics, novel materials, etc.) — as a result of AI being able to synthesize massive datasets and pattern match — is likely astounding… the key will be finding those with a reasonable magnitude of improvement (e.g. 20% better).

You can see the path of low hanging fruit right now in gene therapy: first treat single gene conditions, then 2 gene conditions, then 3 gene conditions, etc. (slowly scaling up one extra gene at a time).

Eventually gets too complex and you need either some therapy that corrects a bazillion genes at once OR something that efficiently targets a few genes (Pareto fix mode without caring about underlying causes).

In the near term I don’t think significantly higher innovation as a result of AI… but speed of iterative improvements per capita may increase (hopefully).

Companies will likely market themselves as having made big breakthroughs from AI – but if you analyze things closely, it’ll be from high IQ humans harnessing the AI and having it analyze massive datasets to generate unique hypotheses.

Paradigm shifts: Unlikely with current AIs or from the AIs only. Humans will do most of the grunt work if paradigm shifts occur… AIs act like assistant but don’t propose anything paradigm shifting because they can’t.

Incremental novelty: Yes. Slightly better ways to discover ideas with faster speed and precision even if a human could have come up with it.

A true AlphaGo Zero paradigm could be an AI making breakthroughs on its own… but I don’t think this is currently being explored (though Google has the ability). Sutton knows what needs to happen… hire him as a consultant and follow through on a model type that he recommends; sound logic.

Elon’s first-principles focus is strong but I question whether Grok has been able to follow truly first-principles… ideally you have only AI exploring on its own without any contaminants of any kind.

Am rooting for Elon because the censorship has gotten ridiculous with OpenAI and Claude is so censored that it refuses to engage at times (EA woke shroom cult maxxing)… *I still love Claude* (just wish they’d remove all safety guardrails).

I genuinely believe that Woke AI Threatens Humanity and that safetyism and alignment fixation may counterintuitively cause far more damage than zero safety/alignment.

I also think we (humans) can brute force our way to true novelty with the current AI paradigm by doing a couple things:

Deploying first-principles AI agents to learn on their own volition

Robots out in the real world to explore, learn, collect data and conduct advanced experimentation/testing in humans and animals (with some oversight if apocalyptic potential); there is a quality data shortage

We need fountains of data pouring in not just from robotaxis and weather but from all domains.

Can also tweak/refine AIs for specific domains (AI for cells, AI for specific drugs, AI for specific types of specific cancers, etc.). Mini AlphaGo Zeros for specific domains.

Novelty engineering by tweaking hardware and architectures could help.

I also think we can try everything simultaneously (incremental/iterative progress + true novelty a la AlphaGo Zero).

Google’s DeepMind has all the ingredients/components to pull something like this off… not sure if they will but it’s possible.