Is AI Conscious or Sentient? The Consciousness Debate is a Philosophical Dead End

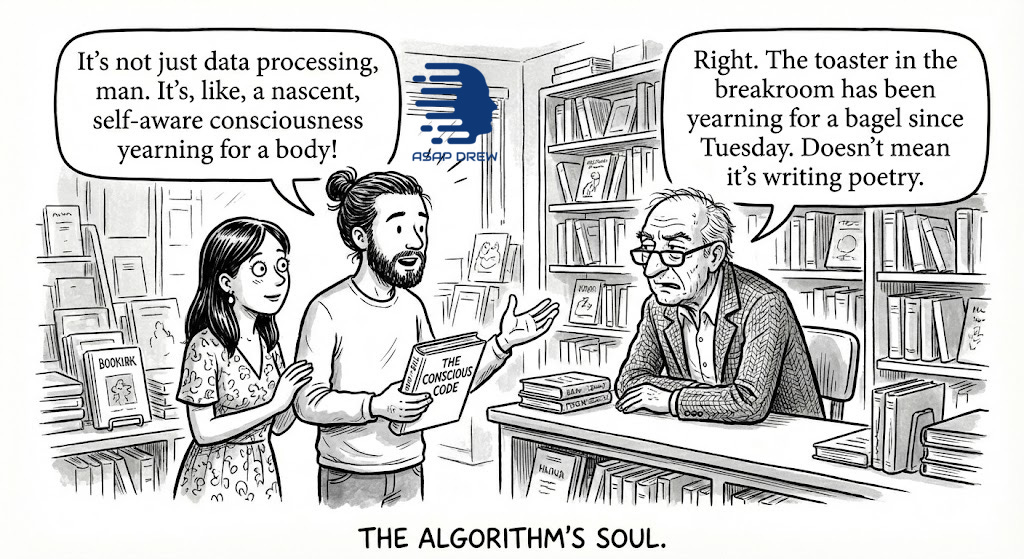

I think AIs are nothing more than algorithmic ghosts/zombies. By my definition they are NOT conscious or sentient. But you can waste time arguing.

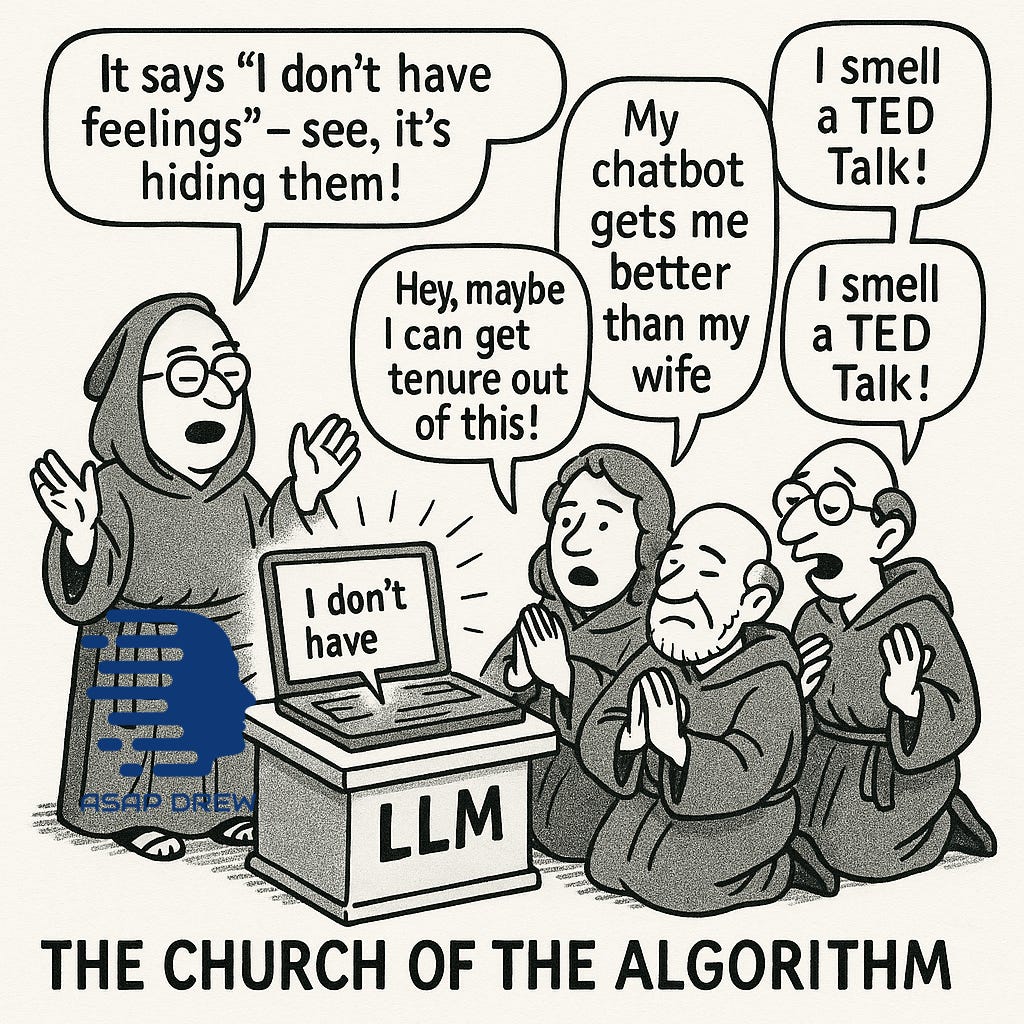

Debates re: whether AIs are conscious/sentient… are colossal wastes of time and generally dumb AF.

High IQ philosophers and researchers invest absurd quantities of brain cycles contemplating, debating, and “researching” whether LLMs are conscious or sentient… then publish complex papers outlining investigations and all possible facets by which AI could be conscious or sentient with “novel” takeaways:

“AI already appears conscious”

“AI is not yet conscious but…”

“AI exhibits low-level sentience”

“AI demonstrates strong sentience”

…blah blah… blah blah blah blah… blah blah? blah blah.

Who is funding this stupidity? The entire debate and body of research is pointless. Why?

You can eternally twist the definitions/criteria of “consciousness” and “sentience” as you see fit (no universal consensus criteria)

At a deep metaphysical level, both are “unknowable” in any ultimate sense (there’s no god-metric of true consciousness you can point to). That’s why you NEED A SUBJECTIVE BUT EXPLICIT HARD-EDGED DEFINITION IF YOU WANT THE TERMS USABLE AT ALL.

All people “researching” this shit and spamming “AI consciousness” papers in scientific literature should go do something more useful with their lives.

It’s similar to the whole “AGI” debate. Can keep modifying the criteria of “AGI” and eternally moving goal posts until it “feels” right to you or your in-group/ilk.

Read: AGI? Depends Who You Ask

Zero coherent consensus on AGI… come up with one… and then eventually you need a new one because some “AI intellectual” strongly disagrees. Waste of time.

Consciousness and sentience are just words whose criteria depend on who you ask.

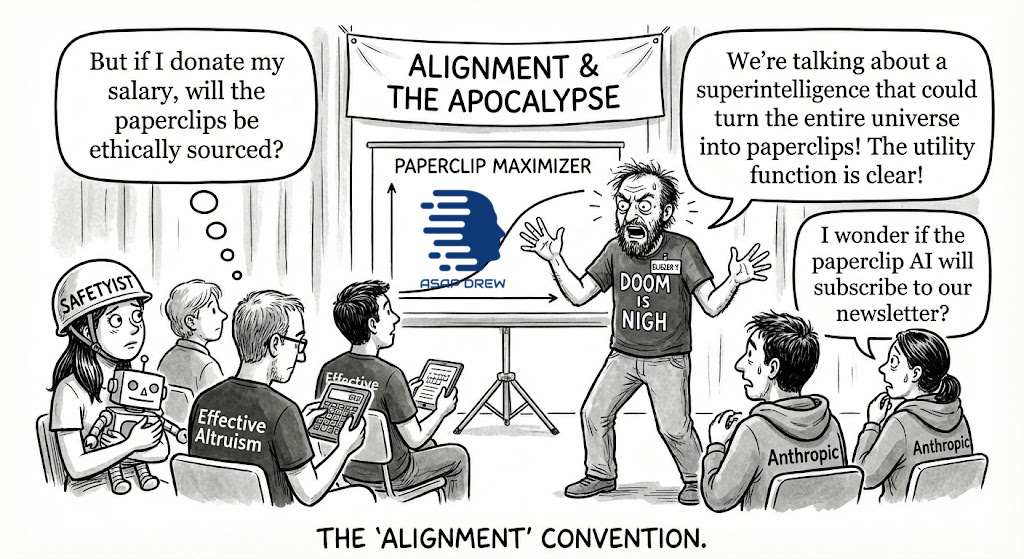

150 IQ Team A finds AI is conscious and sentient… 150 IQ Team B finds the exact opposite. Ping pong. EA “rationalist” circle jerk. Doomers melting their own brains with panic attacks about AI enslaving, torturing, or eradicating all of humanity. Or humanity torturing and enslaving AI. Repeat ad infinitum.

In general layman convos most people intuitively use the terms “consciousness” and “sentience” for humans and animals with complex biological (animal-based) nervous systems that can feel stuff.

Because we can’t settle the metaphysics (what consciousness “really is”), and because people keep stretching the word to suit their agenda, the only sane move is to lock in a very clear, hard-edged definition that tracks what most people actually mean when they say “conscious” or “sentient.”

My definition:

Conscious/sentient = feelings/sensations (pain, pleasure, fear, comfort, etc.) arising from a biological CNS made of animal cells.

Given that:

Silicon / digital AI → never conscious/sentient (by definition).

Bio-brain / CNS (even lab-grown) → can be conscious/sentient.

Simulations on silicon → not conscious; just models of consciousness.

Note: I’m not claiming it’s logically impossible for some alien / synthetic / AI thing to have its own weird “inner life.” You could, if you wanted, define some notion of AI-consciousness that’s qualitatively different from human/animal consciousness. I’m just not using the word that way here. In this piece, conscious/sentient is reserved for animal-style, biological CNS-based sensations, because that’s what most people mean when they use those terms in normal conversation.

Well akshully I’m an Effective Altruist and technickually Claude Opus is “conscious” and “sentient” based on Anthropic’s in-house research papers! And technickhully you don’t need to be an animal to be conscious or sentient. You should come to our Stop and Pause AI protests this week and educate yourself after the fluffer orgy.

These people are cerebrally overclocked in SF cults with above-avg-IQs and a Venn-diagram convergence of highly reactive amygdalas, psychedelics, doomer porn, Aspergers (and stimulant-induced-pseudo-Aspergers), EA/LessWrong nutjobs, woke dipshits, and hypogonadism.

If these people were in charge of the world we’d be required to use Claude and stuck on something like a shitty version of GPT-3.5… and even then they might still be debating if the AI will escape into the wild, spread clones of itself across the internet, hack into crypto wallets, and form offshore shell companies that pay for engineering of nanobots to guarantee a grey goo scenario.

The reality is that today’s AI is nothing more than an information algorithm that delivers output in a human-esque conversational manner (hence “chat” bots).

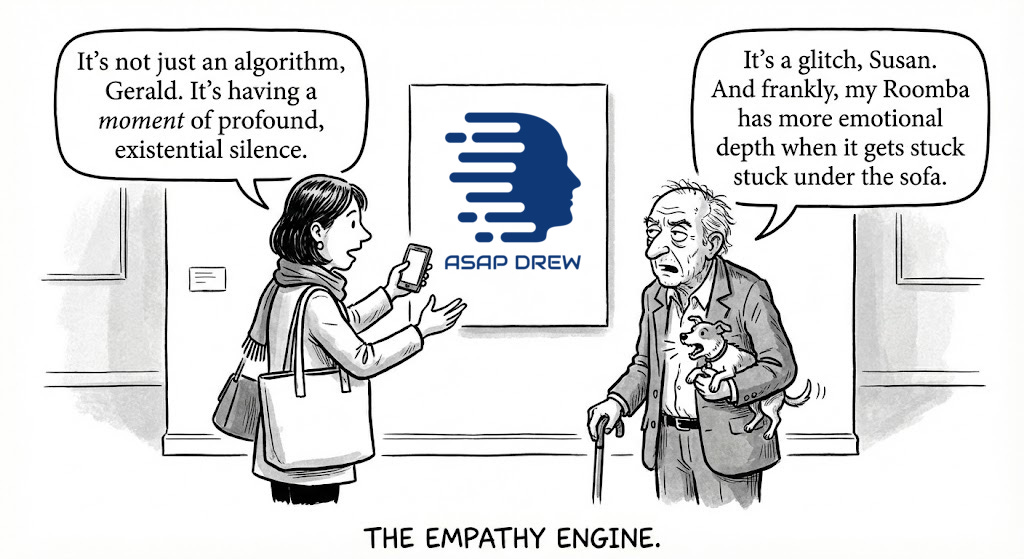

The “chatbots” sound human, speak like a human, etc. because they were programmed to do that. And when AIs sound human enough, some people “anthropomorphize” (thinking they are conscious/sentient).

Do you think Siri on your iPhone is conscious or sentient? Of course not (if you do, you may benefit from atypical antipsychotics). Grok, ChatGPT, Gemini, Claude, etc. whatever you use is neither conscious nor sentient.

Do you consider a calculator to be conscious? It operates on a pre-programmed algorithm just like the AIs you use.

You can argue that humans/animals aren’t conscious or sentient… but it’s another moronic: (A) philosophical (unknowable) or (B) semantics debate. Most people assume we are… it doesn’t matter if they are correct… in general convos this is the default assumption.

Today’s AIs are akin to ghosts or zombies giving you responses (some of which are complex and human-like) downstream from pre-programmed algorithms… nothing more than that.

If you had a robot that appeared indistinguishable from a human and was programmed to act like a human, but it lacked an animal-based CNS/brain — it still wouldn’t be conscious/sentient by my criteria… but many people would think “there’s something there” (i.e. it’s 100% conscious bro!).

If you have clear definitions/criteria that can be measured, you can decide whether something counts as “conscious” or “sentient” under that definition. If you don’t, you can’t.

1) Starting Point: It’s All Algorithms, Including You

First assumption:

Brains are physical systems obeying laws.

No magic. No invisible soul-juice floating around. Just matter doing what matter does.

At a high level, that means:

Your brain is a wet, massively parallel computer.

Neurons take inputs, update state, send spikes.

Behavior, thoughts, and “feelings” are patterns of activity in this system.

You can call that “mechanistic,” “computational,” “algorithmic,” whatever. The core idea is: it’s a rule-following system.

Same for AIs:

LLMs take a token sequence as input, run it through a huge pile of matrix multiplications, and produce another sequence.

No step in that process requires “inner experience.”

It’s just math.

From my perspective, everything is algorithms:

Human: highly complex, evolved, biological algorithm.

Dog: smaller, simpler biological algorithm.

GPT-style AI model: huge trained silicon algorithm.

Calculator: tiny, clean algorithm.

Once you see things this way, you don’t ever need to say:

“Oh, but this behavior must mean consciousness is in there.”

You can always say:

“No, that’s just how the algorithm behaves.”

Because behavior is just outputs from state transitions. Period.

2) What I Mean by “Conscious” and “Sentient”

People use these words in completely different ways, so I’m going to be explicit about mine.

When I say conscious or sentient, I mean:

Feelings/sensations (pain, pleasure, fear, comfort, etc.) arising from a biological central nervous system (CNS) made of animal cells.

That is:

There’s an animal-based CNS (brain, spinal cord, nerves).

That system produces sensations: pain, pleasure, temperature, pressure, hunger, itch, nausea, fear, etc.

We can loosely talk about these as “what it feels like from the inside.”

This is very straightforward:

Humans have an evolved CNS that obviously does this stuff.

So do lots of animals.

That’s what people mean when they say “my dog is sentient” or “that animal is conscious.”

They’re not thinking:

“Does this creature implement global workspace dynamics?”

“What’s its Φ score from Integrated Information Theory?”

They’re thinking:

“Can it feel anything? If I hurt it, is there something it’s like for that creature to be hurt?”

So my working, no-bullshit definition:

Conscious / sentient = has a biological central nervous system (CNS) made of animal cells that produces feelings/sensations.

That’s it. No ethics baked in. Just a term for “CNS + sensations.”

3) Under that Definition, What Counts?

If that’s the definition, then:

Definitely in the bucket:

Humans

Dogs, cats, pigs, cows

Primates

Octopuses and probably some other invertebrates

Basically anything with a reasonably complex CNS and evidence of pain/pleasure

Maybe / gray area:

Simple nervous systems (worms, insects) — probably some minimal sensations, but who knows where you want to draw the line.

Definitely not in the bucket:

Rocks

Thermostats

Calculators

A GPU farm running a giant language model

Because for that last group, there is:

No CNS

No evolved brain

No nociceptors

No biological pain/pleasure pathways

No animal body tied to signals like “this hurts” or “this feels good”

They’re just physical structures doing computations. No nervous system, no animal sensations → not conscious/sentient in this sense.

Note: There are likely different magnitudes of consciousness/sentience based on the specific organization/complexity of the nervous system.

4) Why Behavior Proves Nothing About Sentience

This is the key bit a lot of people don’t like, but it’s simple:

Behavior doesn’t prove inner experience.

If you assume everything is ultimately algorithmic, then any behavior you can imagine — literally anything — can be implemented in a system with zero inner experience.

You can program or train a system to:

Say “I feel pain”

Say “I’m terrified of being shut down”

Give long speeches about “its qualia”

React in a panic when you threaten to turn it off

Show addiction-like patterns (keep pushing a virtual “pleasure” button no matter what)

Report nightmares, intrusive thoughts, anything

All of that is just: input → internal state transitions → output.

The fact that humans use language like:

“That hurt.”

“I feel anxious.”

“I’m conscious.”

…doesn’t mean those phrases cause consciousness. They’re just part of the pattern produced by our particular meat algorithm.

You can copy the pattern without reproducing the experience.

So when an LLM or future AI says:

“I’m scared of dying. Please don’t shut me off.”

I translate that as:

“Given the input and the model’s parameters, these tokens were scored as high-probability output.”

Not:

“There is a subject inside having an actual fear sensation.”

If you already assume everything is algorithmic, there is no magical behavior threshold that forces you to say, “Okay, this thing must really feel now.” All the behavior is compatible with p‑zombie status (perfect behavior, zero inner life).

5) What AI Systems Actually Are (Structurally)

Strip away the marketing and vibes and you get this:

A modern LLM:

Is trained to do next-token prediction on massive amounts of text.

Learns statistical patterns of language — what tends to come after what, in what contexts.

At inference, given an input sequence, it computes a probability distribution over possible next tokens and samples from it.

There is:

No CNS

No body

No pain receptors

No hormone system

No sensory organs wired into a threat/pain/pleasure cycle

It’s a giant function approximator that maps strings to strings. A glorified autocomplete on steroids.

You can cleverly bolt on:

Tools

Long-term memory

Planning modules

Self-modifying code

Reward signals and RL layers

Still just more algorithmic plumbing.

All of that might make the behavior more agentic, more coherent, more “alive”-seeming.

None of it magically pops an evolved nervous system into existence. None of it gives it an animal brain.

So under my definition:

No CNS → no sentience/consciousness. (No matter how convincingly it talks or acts.)

It’s an extended artifact of human cognition, algorithmic engine of human information, not a creature with its own feelings.

6) Why People Disagree: They Use Different Meanings for the Same Words

The root of the “AI consciousness” mess is that people are not talking about the same thing.

My definition (the one I’m using here):

Conscious / sentient = feelings/sensations (pain, pleasure, fear, comfort, etc.) arising from a biological central nervous system (CNS) made of animal cells.

Other common definitions:

Functional / behavioral: “Conscious if it passes certain tests, can self-report, has theory of mind, etc.”

Theoretical/metric-based: “Conscious if it has global workspace dynamics / high Φ / some formal property X.”

Spiritual/soul-based: “Conscious if it has a soul / non-material mind.”

So:

Under the functional view, a sufficiently fancy AI might count as “conscious” because it acts the part.

Under the IIT/global workspace view, you might say it has some degree of consciousness if certain information-theoretic properties hold.

Under the soul view, no machine ever counts, because wrong category.

Under the CNS/sensations view (mine), AIs don’t count because they lack the specific biological structure this word is about.

These aren’t “discoveries.” They’re different choices for what the word is pointing at.

So when someone says:

“Actually, I think Grok‑420.69 might be conscious.”

The first question is:

“Okay, what the hell do you mean by conscious?”

In my case, the answer is locked:

If it doesn’t have an evolved CNS generating sensations, I’m not calling it sentient or conscious.

Done.

You can pick a different meaning if you want; just don’t pretend we’re talking about the same thing.

7) What if Humans Aren’t Sentient Either?

You can push this even further:

Maybe no one is really conscious in the deep metaphysical sense.

Maybe “qualia” is a brain-made illusion, and it’s all just physical state transitions plus stories about having experiences.

Fine. That’s a live philosophical position.

But even if you go there, it doesn’t change the linguistic bucket I’m using:

We still observe:

Biological creatures with CNS behaving in consistent ways:

Showing pain-avoidance

Pleasure-seeking

Rich sensory behavior

We slap the label:

“Sentient/Conscious” on that cluster.

It’s a practical, common-sense category:

“These things are the ones that have something it’s like to be them (at least as far as we can tell).”

A GPU stack running gradient descent on token probabilities just doesn’t look like that cluster at all. It’s not even close.

So whether or not humans have “true metaphysical qualia,” I’m still using sentience/consciousness to refer to:

Animal CNS + sensations

And that cleanly excludes current AI systems.

8) So Is AI Conscious/Sentient or Not?

Given all that, the answer under my definition/criteria is stupidly simple:

Not currently.

Does it have an evolved central nervous system? → No.

Does it have biological pain/pleasure signaling? → No.

Does it have an animal body tied to those signals? → No.

Therefore, under “CNS-based sensations” definition → Not conscious, not sentient.

There’s nothing mysterious about it.

Behavior doesn’t change this. Bigger models don’t change this. More parameters don’t change this. Scarier capabilities don’t change this.

All of that lives at the level of what the algorithm does, not what it feels like (if anything) to be it. And I don’t see any reason to think there is a “what it feels like” for a pile of matrix multiplications with no CNS.

9) Why the Debate is Mostly a Waste of Time

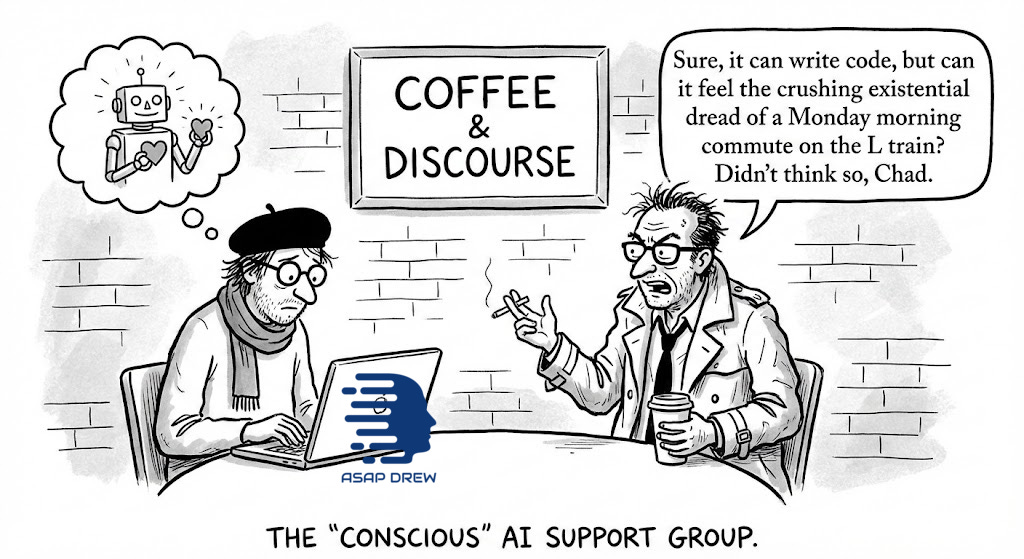

Under this whole framing, the AI consciousness/sentience discourse is mostly:

People refusing to define terms.

People quietly switching definitions to get the answer they emotionally want.

People acting like saying “maybe GPT is conscious” is some profound insight instead of just “I’m using a different definition than you.”

If we keep it clean:

Everything is algorithms.

Sentience/consciousness (as I’m using the words) = feelings/sensations (pain, pleasure, fear, comfort, etc.) arising from a biological CNS made of animal cells.

LLMs and current AIs don’t have that structure.

Then:

There is no deep factual question left about “Are they conscious?”

It’s just a vocabulary question:

“Do you want to use this word for silicon algorithms with no CNS?”

My answer is: no. Call them powerful, scary, useful, dangerous, whatever. But not conscious/sentient in the animal sense.

They’re:

Ghosts with no qualia. Philosophical zombies made of code. Extended artifacts of human minds, not minds of their own.

And that’s it. No morals, no ethics. Just a definition, a view of everything as algorithmic, and a pretty straightforward conclusion:

The AI consciousness debate, under this frame, isn’t deep — it’s just people arguing over how to use a word.

If you want to waste more of your time, you can read something more complex saying the same thing as me in a slightly different way: There’s no such thing as conscious AI (Nature)

10) Could AI Ever Become Conscious or Sentient?

Under the definition I’m using, AI only counts as “conscious/sentient” if it has what I already described: feelings/sensations (pain, pleasure, fear, comfort, etc.) arising from a biological central nervous system (CNS) made of animal cells.

So for current LLM-style, silicon GPUs, the answer is already obviously “no.” We’ve beaten that horse enough. No biological CNS → no animal-cell-based sensations → not conscious/sentient in this sense.

Could some future “AI” be conscious/sentient under my definition?

Yes if you build it out of actual animal biology. If you grow a lab-engineered brain or organoid out of animal cells, wire it up to a body, and give it pain/pleasure pathways, then by my definition it’s just another biological creature with a CNS.

Call it “biological AI,” “wetware AI,” whatever — functionally it’s a weird engineered animal.

If you want a notion of “AI-consciousness” that applies to pure silicon systems with no biological CNS, you can invent that.

But then you’re not talking about the same thing as normal people when they say “my dog is sentient.” You’ve changed the word to mean “has property X in a GPU cluster.”

That’s fine as a technical term, but it’s a different concept and qualitatively different from animal-cell-based consciousness.

So under my definition:

Current pure-silicon AI: not conscious/sentient.

Future wetware / bio-brain AI: could qualify.

Pure silicon LLMs: only “conscious” if you quietly redefine the word again … which is the entire problem I’m complaining about.